Guidance for Building Your Enterprise WAN on AWS User Guide

Summary: This Guidance demonstrates a four-phased approach to progressively migrate your enterprise wide area network (WAN) to AWS. Each phase includes an architecture diagram that allows you to envision the future state of your networking environment and the intermediate steps involved in the migrations process.

Overview

The four-phased approach to progressively build your enterprise Wide Area Network (WAN) on AWS is outlined in this companion guide. For more background, benefits, and an overview, follow the Guidance for Building Your Enterprise WAN on AWS.

Phase 1

Use the AWS global network for backup connectivity between data centers.

Your company is migrating workloads to the cloud, but has requirements to maintain mission-critical workloads on-premises, such as financial risk management, automotive manufacturing, and healthcare medical imaging. These workloads span globally dispersed data centers and are accessed by users at branch offices around the world. The branches are interconnected with technologies like Multiprotocol Label Switching (MPLS). Your workloads require these data centers to be connected by diverse network paths to make them fault-tolerant in case the primary path fails. However, the average utilization on these backup paths is typically low (such as <25%), resulting in high cost per byte. You are looking for a backup network that is equally reliable yet offers a consumption-based cost model.

For cloud migration, your data centers already use dedicated high-speed connections to AWS through AWS Direct Connect. In addition to a cloud on-ramp, Direct Connect also allows connectivity between data centers, using the SiteLink feature. SiteLink creates an on-demand, consumption-based network connecting all your data centers. By making appropriate routing changes to your on-premises network, you can route traffic to SiteLink when a primary link fails to meet the fault tolerance requirement of your workloads. With SiteLink, your inter-data center traffic will take the shortest path across the AWS global network, creating a reliable low-latency backup network with a pay-as-you-grow cost model. This allows you to build confidence in using the AWS global network to route your production workloads between data centers.

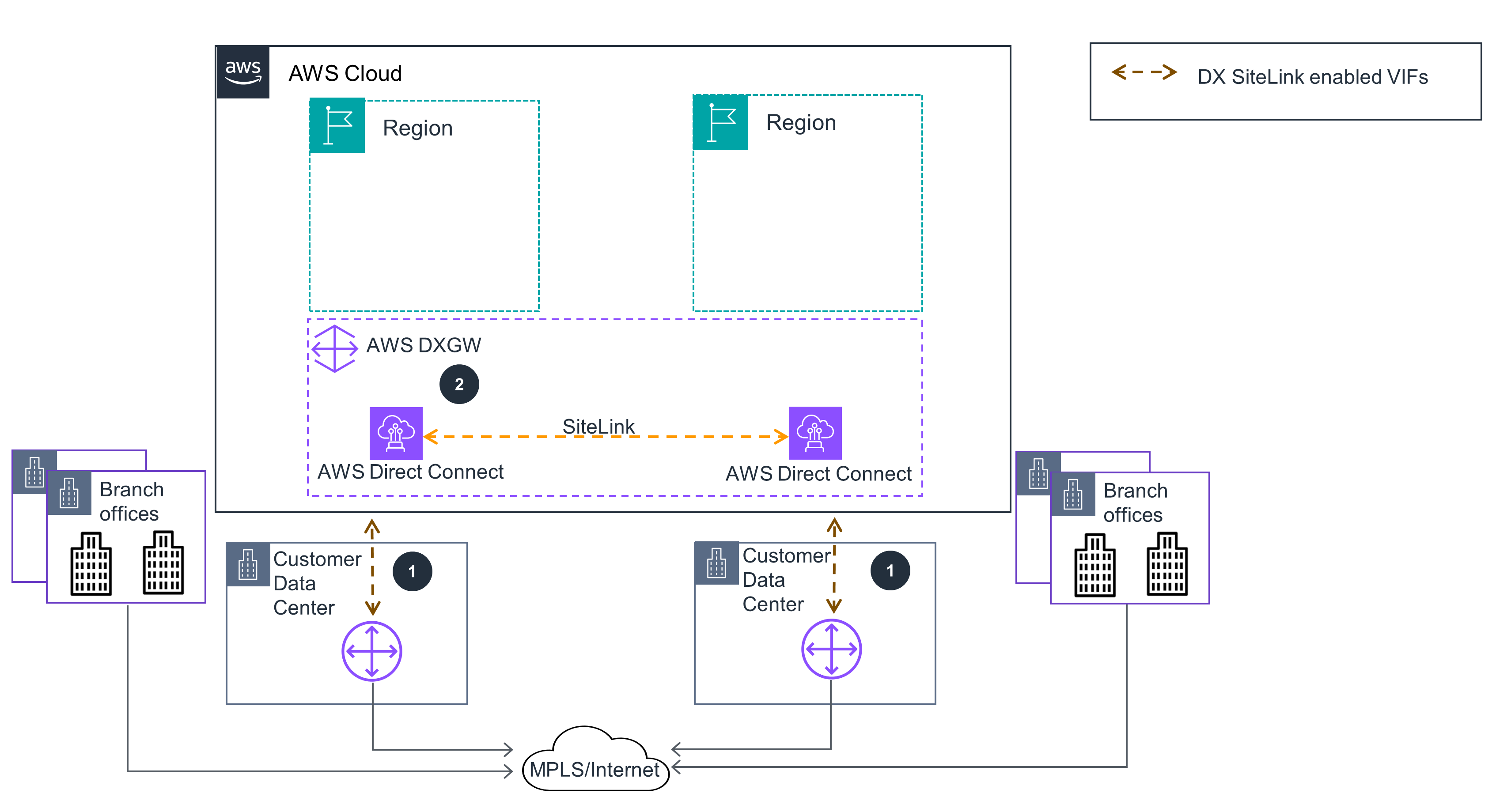

Backup your connectivity between data centers

Figure 1: Guidance deploys AWS Direct Connect SiteLink as a backup connection between on-premises data centers.

Create an AWS Direct Connect (DX) connection from each data center (DC) to the chosen DX Location.

Once physical connectivity is established, create Virtual Interfaces (VIFs) terminating on the same DX Gateway (DXGW)[for each DX connection] which ensures a Border Gateway Protocol (BGP) session is established between the DC router(s) and AWS. Turn on SiteLink on each VIF you created. Test the connectivity between data centers over SiteLink.

Phase 2

Use the AWS global network to connect your data centers.

In this phase, your objective is to achieve a consumption-based model for your primary on-premises network. In the previous phase, you used the SiteLink feature of AWS Direct Connect as a backup network path for your data centers. Once you have validated that SiteLink meets your backup requirements for fault tolerance, you are ready to verify that it can support carrying workloads as the primary network path. You can start by migrating less critical workloads (such as lower priority traffic) to the SiteLink network, which allows you to minimize the risk during the validation phase. This can be achieved by steering traffic to SiteLink using more specific routes configured in your routers on each end of the DX connection (such as in the DX location and in your data center). After you successfully validate less critical traffic, you can migrate more critical workloads or higher priority traffic.

Additionally, to minimize impact to your workloads, you can achieve high resiliency and meet uptime Service Level Agreements (SLAs) by following the AWS Direct Connect design recommendations for high resiliency connections and the associated SLA commitments (up to 99.99%) based on the level of resiliency in your design.

While this phase moves you one step closer to migrating your on-premises network to AWS, your branch office connectivity may still stay on MPLS or Internet connections and terminate in your data center. In the next phase, we will explain how to migrate your branch connectivity to AWS to minimize NAT loopback (also knowns as hairpinning) of traffic destined for the cloud.

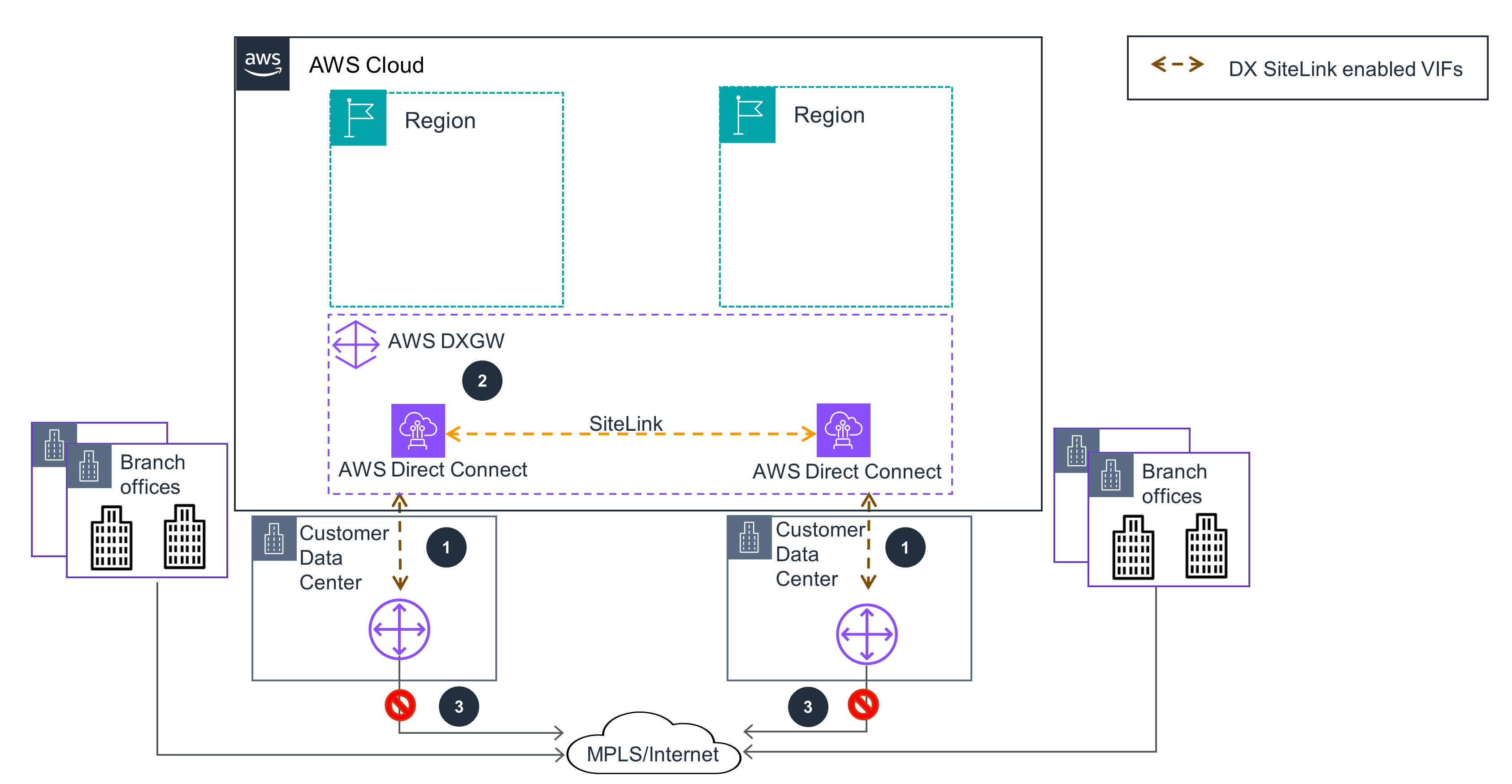

Connect your data centers

Figure 2: Guidance deploys AWS Direct Connect SiteLink as the primary connection between on-premises data centers

Create an AWS Direct Connect (DX) connection from each data center (DC) to the chosen DX Location.

Once physical connectivity is established, create Virtual Interfaces(VIFs) terminating on the same DX Gateway (DXGW) [for each DX connection] which ensures a Border Gateway Protocol (BGP) session is established between the DC router(s) and AWS. Turn on SiteLink on each VIF you created. Test the connectivity between data centers over SiteLink.

You eliminate the need for Multiprotocol Label Switching (MPLS) between the data centers, and reduce the connection capacity to your MPLS provider.

Phase 3

Use Cloud WAN to connect branch offices and segment your enterprise WAN

In the previous phases, we explained how to systematically migrate your data center interconnects to AWS. However, on-premises connectivity also requires branch office connectivity. Imagine your company having hundreds of branch offices globally, with each office requiring network resources to be isolated across business segments like sales, accounting, and corporate. As your network grows to meet business demands, you are looking not only to migrate these connections to AWS, but also have a centralized system that creates, manages, and monitors these connections and segments. In this phase, we show how you can achieve this using AWS Networking services, like Cloud WAN.

Cloud WAN provides a central dashboard for making connections between your branch offices, and Amazon Virtual Private Cloud (Amazon VPC)—building a global network with only a few clicks. You use network policies to automate network management and security tasks in one location. Cloud WAN generates a complete view of the network to help you monitor network health, security, and performance. In addition, it allows integration with third party SD-WAN solutions, enabling you to extend your existing branch connectivity to AWS.

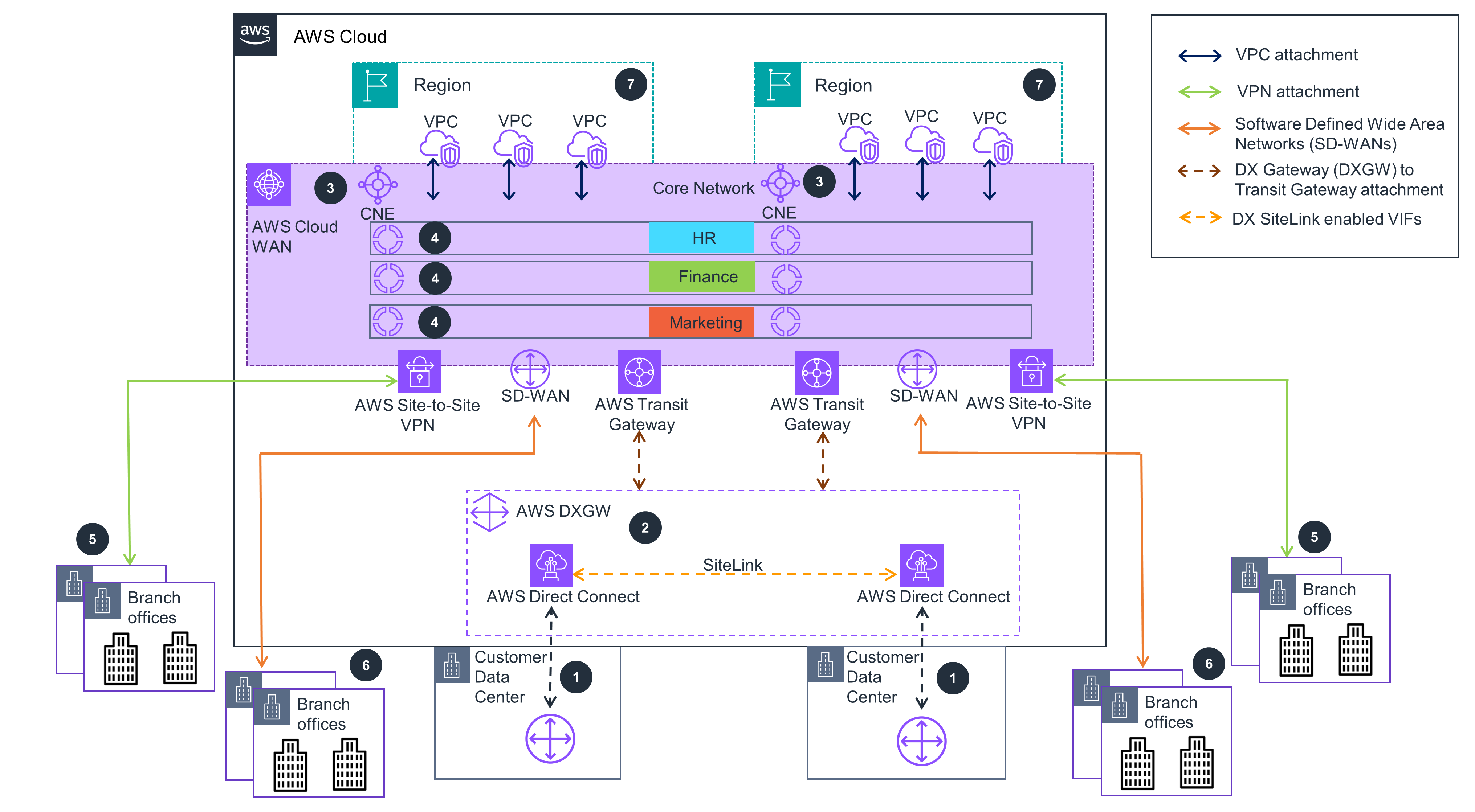

Connect branch offices and segment your enterprise WAN

Figure 3: Guidance deploys Cloud WAN, SiteLink, Transit Gateway, Site-to-Site VPN, and SDWAN for connecting branch offices to AWS

Create an AWS Direct Connect (DX) connection from each data center (DC) to the chosen DX Location.

Once physical connectivity is established, create Virtual Interfaces (VIFs) {:target=”_blank”} terminating on the same DX Gateway (DXGW) [for each DX connection] which ensures a Border Gateway Protocol (BGP) session is established between the DC router(s) and AWS. Turn on SiteLink on each VIF you created. Test the connectivity between data centers over SiteLink.

Setup connectivity within your AWS environment using AWS Cloud WAN. In AWS Cloud WAN, start by defining your Global network, which is a single, private network that acts as the high-level container for your network objects and the Core network, which is part of your global network managed by AWS.

In the Core Network Policy, specify the AWS Regions where the Core Network Edges (CNEs){:target=”_blank”} will be deployed and the autonomous System number range for the CNEs. Follow the Guidance for Automating Amazon VPC Routing in a Global Cloud WAN Deployment for details and sample code to deploy a CWAN network.

Create a Site-to-Site VPN attachment from the branch office location that require IPsec connectivity into the physically closest AWS Regions.

Create connect attachments {:target=”_blank”}(for attaching Software Defined Wide Area Networks (SD-WANs)) from branch offices that require general routing encapsulation (GRE) connectivity to SD-WAN appliance deployed in the closest AWS Region.

Create the network segments {:target=”_blank”} to ensure traffic segregation; and create attachment policies to ensure the attachments map to the correct segment. Create VPC, virtual private network (VPN), AWS Direct Connect, and Transit Gateway route table attachments to connect the respective resources to the core network created in Step 3.

Phase 4

Use the AWS global network to expand your enterprise WAN footprint

In the previous phases, we walked you through the stages of building an enterprise WAN on AWS to connect resources on-premises and in the cloud. With this network in place, you are empowered to meet changing traffic patterns and unpredictable bandwidth peaks. As your business continues to grow globally, you are now required to extend your cloud and on-premises presence to additional geographical locations in the absence of deterministic capacity and bandwidth requirements. This means quickly extending your enterprise WAN to additional cloud Regions, data centers and branch offices in new locations over connections that offer a flexible bandwidth usage model. Luckily, with your enterprise WAN running on AWS, you are positioned well to meet such demands.

In this final phase, we show how you can extend your WAN to additional cloud Regions and on-premises locations by using a combination of Direct Connect with SiteLink, and Cloud WAN. You can achieve this in a flexible, pay-as-you-grow manner without having to invest in capital expenditure for your WAN.

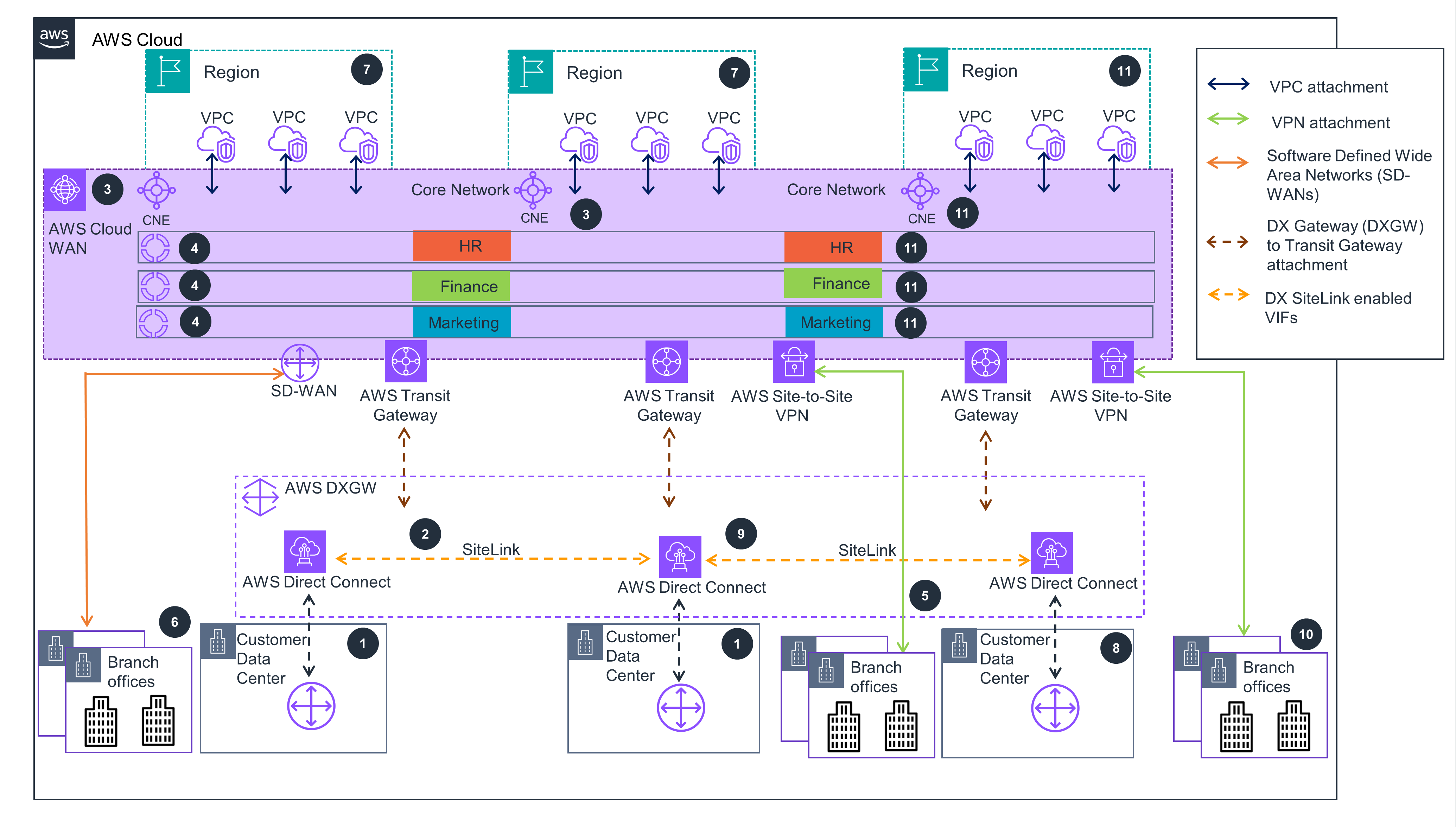

Expand your enterprise WAN footprint

Figure 4: Guidance deploys Cloud WAN, SiteLink, Transit Gateway, Site-to-Site VPN, and SDWAN to connect new locations to your global enterprise WAN running on AWS

Create an AWS Direct Connect(DX) connection from each data center (DC) to the chosen DX Location.

Once physical connectivity is established, create Virtual Interfaces(VIFs) terminating on the same DX Gateway(DXGW) [for each DX connection] which ensures a Border Gateway Protocol (BGP) session is established between the DC router(s) and AWS. Turn on SiteLink on each VIF you created. Test the connectivity between data centers over SiteLink.

Setup connectivity within your AWS environment using AWS Cloud WAN. In AWS Cloud WAN, start by defining your Global network, which is a single, private network that acts as the high-level container for your network objects and the Core network,{:target=”_blank”} which is part of your global network managed by AWS.

In the Core Network Policy, specify the AWS Regions where the Core Network Edges (CNEs) will be deployed and the autonomous System number range for the CNEs. Follow the Guidance for Automating Amazon VPC Routing in a Global Cloud WAN Deployment for details and sample code to deploy a CWAN network.

Create a Site-to-Site VPN attachment from the branch office location that require IPsec connectivity into the physically closest AWS Regions.

Create connect attachments (for attaching Software Defined Wide Area Networks (SD-WANs)) from branch offices that require general routing encapsulation (GRE) connectivity to SD-WAN appliance deployed in the closest AWS Region.

Create the network segments to ensure traffic segregation; and create attachment policies to nsure the attachments map to the correct segment. Create VPC, virtual private network (VPN), AWS Direct Connect, and Transit Gateway route table attachments to connect the respective resources to the core network created in Step 3.

Newer data centers or remote sites can be easily added to the network by creating a DX connection to the chosen DX Location.

Once the physical DX connection is established, create a VIF terminating on the same DXGW as the VIFs created in Step 2. Ensure you enable SiteLink for the new VIF created over the new DX connection. This creates connectivity between the data centers connected through DX.

When adding a new branch office using Site-to-Site VPN or AWS Direct Connect attachments, create the necessary attachment in the closest AWS Region.

You can also extend your global network footprint by hosting workloads in newer AWS Regions closer to your end customers simply by updating your network policy to include the CNEs deployed in the respective AWS Region. Next, create the necessary attachments and attachment policies for the new Regions to associate the respective attachments to their associated network segments.