Guidance for EKS Cell-Based Architecture for High Availability on Amazon EKS

Summary: This implementation guide provides an overview of the Guidance for EKS Cell-Based Architecture for High Availability on Amazon EKS, its reference architecture and components, considerations for planning the deployment, and configuration steps for deploying the Guidance name to Amazon Web Services (AWS). This guide is intended for solution architects, business decision makers, DevOps engineers, data scientists, and cloud professionals who want to implement Guidance for EKS Cell-Based Architecture for High Availability on Amazon EKS in their environment.

Overview

The EKS Cell-Based Architecture for High Availability is a resilient deployment pattern that distributes Amazon EKS workloads across multiple isolated “cells,” with each cell confined to a single Availability Zone (AZ). This architecture enhances application availability by eliminating cross-AZ dependencies and providing isolation boundaries that prevent failures in one AZ from affecting others.

By deploying independent EKS clusters in each AZ and using intelligent traffic routing, this pattern creates a highly available system that can withstand AZ failures while maintaining application availability.

Features and Benefits

AZ Isolation: Each EKS cluster (cell) is deployed in a dedicated Availability Zone, eliminating cross-AZ dependencies and preventing cascading failures.

Independent Scaling: Each cell can scale independently based on its specific load and requirements.

Intelligent Traffic Distribution: Uses Route53 weighted routing to distribute traffic across cells, with automatic failover capabilities.

Reduced Blast Radius: Failures in one cell don’t impact others, limiting the scope of potential outages.

Optimized Latency: Eliminates cross-AZ traffic for most operations, reducing latency and AWS data transfer costs.

Improved Deployment Safety: Enables progressive rollouts across cells, reducing the risk of widespread deployment failures.

Cost Optimization: Reduces cross-AZ data transfer costs by keeping traffic within AZs whenever possible.

Consistent Infrastructure: Uses Terraform to ensure consistent configuration across all cells.

Use cases

Mission-Critical Applications: For applications that require extremely high availability and cannot tolerate even brief outages.

Microservice Architectures: Complex microservice deployments that benefit from clear isolation boundaries.

High-Traffic Applications: Consumer-facing applications that need to handle large traffic volumes with consistent performance and increased resilience.

Disaster Recovery Solutions: As part of a comprehensive disaster recovery strategy with multiple fallback options.

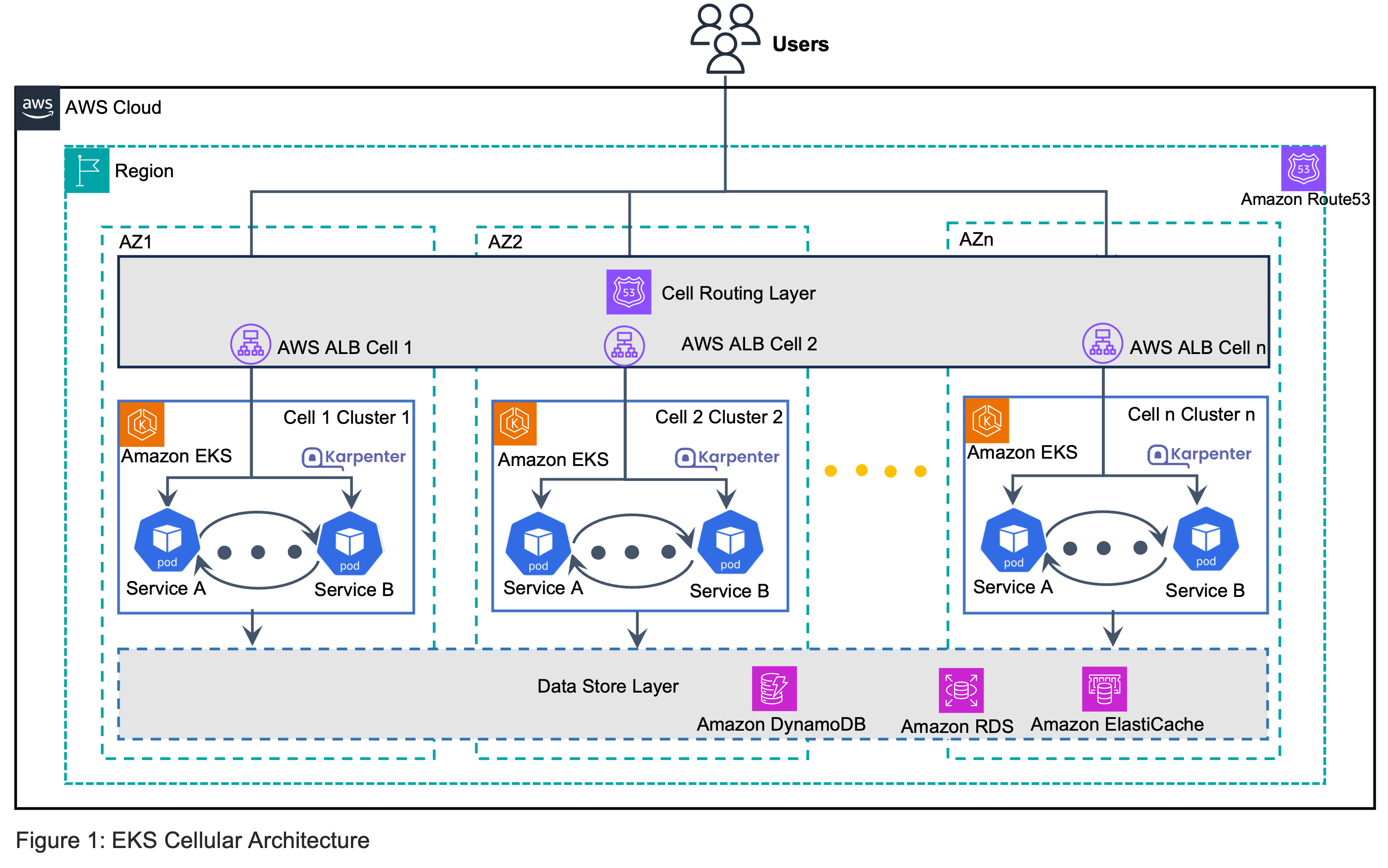

Architecture Overview

This section provides a reference implementation architecture diagram for the components deployed with this Guidance.

The cell-based EKS architecture consists of several key components working together to provide high availability:

VPC Infrastructure: A shared VPC with public and private subnets spanning three Availability Zones, providing the network foundation for the cells.

EKS Cells: Three independent EKS clusters (cell1, cell2, cell3), each deployed in a separate Availability Zone. Each cell includes:

- An EKS cluster with managed node groups

- Node instances confined to a single AZ

- AWS Load Balancer Controller for managing ingress traffic

- Karpenter for node auto-scaling

Application Deployment: Each cell hosts identical application deployments, ensuring consistent behavior across cells. The example uses simple NGINX containers, but this can be replaced with any containerized application.

Ingress Layer: Each cell has its own Application Load Balancer (ALB) created by the AWS Load Balancer Controller, with support for:

- HTTPS termination using ACM certificates

- Health checks to monitor application availability

- Cross-zone load balancing disabled to maintain AZ isolation

DNS-Based Traffic Distribution: Route53 provides intelligent traffic routing with:

- Weighted routing policy distributing traffic across all three cells (33/33/34% split)

- Individual DNS entries for direct access to specific cells

- Automatic health checks to detect cell failures

Security Components:

- IAM roles and policies for secure cluster operations

- Security groups controlling network access

- HTTPS enforcement with TLS 1.3 support

This architecture ensures that if any single Availability Zone fails, traffic automatically routes to the remaining healthy cells, maintaining application availability without manual intervention. The cell-based approach provides stronger isolation than traditional multi-AZ deployments, as each cell operates independently with its own control plane and data plane resources.

Architecture Diagram

Below are the guidance Reference Architecture and steps

Figure 1: Guidance for EKS Cell-Based Architecture for High Availability - Reference Architecture

Architecture Steps

- Environment Configuration

- DevOps engineer defines environment-specific configuration in a Terraform variable file (

terraform.tfvars) - This configuration controls all aspects of the cell-based architecture deployment

- Parameters include AWS region, domain name, certificate ARN, and Route53 zone ID

- DevOps engineer defines environment-specific configuration in a Terraform variable file (

- VPC Infrastructure Deployment

- A shared VPC is provisioned across three Availability Zones following AWS best practices

- Public subnets are configured for internet-facing load balancers

- Private subnets are configured for EKS nodes with proper tagging for Kubernetes integration

- NAT Gateway is deployed to enable outbound internet access for private resources

- EKS Cluster Provisioning

- Three independent EKS clusters (cells) are deployed, each confined to a single Availability Zone

- Each cluster uses EKS version 1.30 with managed node groups for initial capacity

- Initial managed node groups are configured with instance type m5.large in each cell’s specific AZ

- IAM roles and security groups are created with least-privilege permissions

- Cluster Add-ons Deployment

- Core EKS add-ons (CoreDNS, VPC CNI, kube-proxy) are deployed to each cluster

- AWS Load Balancer Controller is installed in each cell with cell-specific configuration

- Karpenter is deployed for dynamic node auto-scaling beyond the initial managed node groups

- Each add-on is configured with appropriate IAM roles and service accounts

- Application Deployment

- Identical application deployments are created in each cell using Kubernetes resources

- Node selectors ensure pods are scheduled only on nodes in the cell’s specific AZ

- Kubernetes Services are created to expose applications within each cluster

- Resource limits and health checks are configured for application reliability

- Ingress Configuration

- Application Load Balancers (ALBs) are provisioned for each cell using AWS Load Balancer Controller

- Ingress resources are configured with annotations to:

- Disable cross-zone load balancing to maintain AZ isolation

- Enable TLS termination using the provided ACM certificate

- Configure health checks and routing rules

- Each ALB is tagged with cell-specific identifiers for management

- DNS-Based Traffic Distribution

- Route53 records are created for direct access to each cell (

cell1.example.com,cell2.example.com,cell3.example.com) - Weighted routing policy is configured to distribute traffic across all three cells (33/33/34% split)

- Health checks are associated with each ALB to enable automatic failover

- DNS TTL values are optimized for quick failover response

- Route53 records are created for direct access to each cell (

AWS Services and Components

| AWS Service | Role | Description |

|---|---|---|

| Amazon VPC | Network Foundation | Provides isolated network infrastructure with public and private subnets across three AZs, enabling secure communication between components |

| Amazon EKS | Container Orchestration | Manages three independent Kubernetes clusters (one per AZ), each serving as an isolated “cell” for application workloads |

| EC2 Managed Node Groups | Initial Compute Capacity | Provides the baseline compute capacity for each cell, hosting critical system pods (CoreDNS, AWS Load Balancer Controller) and initial application workloads; configured with m5.large instances in specific AZs |

| Karpenter | Dynamic Auto-scaling | Automatically provisions additional nodes based on workload demands, handling application scaling beyond the capacity of managed node groups; optimized for efficient resource utilization |

| AWS Load Balancer Controller | Traffic Management | Creates and manages Application Load Balancers based on Kubernetes Ingress resources in each cell; runs on managed node group instances |

| Application Load Balancer (ALB) | Load Distribution | Distributes incoming application traffic to Kubernetes services within each cell, with TLS termination and health checks |

| Amazon Route53 | DNS Management | Implements weighted routing (33/33/34%) across cells and provides direct access to individual cells via DNS; enables automatic failover during AZ outages |

| AWS Certificate Manager (ACM) | TLS Certificate Management | Provides and manages TLS certificates for secure HTTPS connections to applications via ALBs |

| AWS IAM | Access Control | Manages permissions for EKS clusters, add-ons, and service accounts using least-privilege principles |

| Amazon ECR | Container Registry | Stores container images used by applications and add-ons |

| AWS CloudWatch | Monitoring | Collects metrics, logs, and events from EKS clusters and related AWS resources |

| NAT Gateway | Outbound Connectivity | Enables private subnet resources to access the internet for updates and external services |

| Security Groups | Network Security | Controls inbound and outbound traffic to EKS nodes and other resources |

| EKS Add-ons | Cluster Extensions | Provides essential functionality including: CoreDNS for service discovery (runs on managed node groups), VPC CNI for pod networking (runs on all nodes), Kube-proxy for network routing (runs on all nodes) |

| Terraform | Infrastructure as Code | Automates the provisioning and configuration of all AWS resources in a repeatable manner |

Plan your deployment

Cost

The EKS Cell-Based Architecture provides high availability but requires careful cost management due to its multi-cluster design. Below are key cost factors and optimization strategies:

Primary Cost Components

| AWS Service | Dimensions | Cost per Month (USD) |

|---|---|---|

| EKS Clusters | $0.10/hour × 3 clusters × 720 hours | $219.00 |

| EC2 Instances | 6 × m5.large instances × 720 hours × $0.096/hour | $420.48 |

| Application Load Balancers | $0.0225/hour × 3 ALBs × 720 hours | $48.60 |

| ALB Data Processing | $0.008/LCU-hour (variable based on traffic) | $10-50+ |

| NAT Gateway - Hourly | $0.045/hour × 720 hours | $32.87 |

| NAT Gateway - Data Processing | $0.045/GB processed (variable) | $20-100+ |

| Route53 - Hosted Zone | $0.50/hosted zone | $0.50 |

| Route53 - DNS Queries | $0.40/million queries after first 1B | $1-5 |

| EBS Storage | gp3 volumes for EKS nodes (~20GB per node) | $9.60 |

| CloudWatch Logs | $0.50/GB ingested + $0.03/GB stored | $5-20 |

| CloudWatch Metrics | Custom metrics beyond free tier | $2-10 |

Cost Summary

| Cost Type | Monthly Range (USD) |

|---|---|

| Fixed Costs | $721.82 |

| Variable Costs | $63.50 - $315+ |

| Total Estimated Range | $785 - $1,037+ |

Cost Optimization Considerations

- Leverage Karpenter efficiently: Configure appropriate provisioners to optimize instance selection

- Right-size your managed node groups: Start with smaller instance types and scale up if needed

- Use Spot Instances: Configure Karpenter to use Spot Instances for further cost optimization

- Monitor and analyze costs: Use AWS Cost Explorer to identify optimization opportunities

- Consider Savings Plans or Reserved Instances: For baseline capacity if workloads are stable

This architecture prioritizes high availability over cost optimization. Use the AWS Pricing Calculator to estimate costs for your specific deployment.

Security

When you build systems on AWS infrastructure, security responsibilities are shared between you and AWS. This shared responsibility model reduces your operational burden because AWS operates, manages, and controls the components including the control plane host operating system, the virtualization layer, and the physical security of the facilities in which the services operate. For more information about AWS security, visit AWS Cloud Security.

The EKS Cell-Based Architecture implements multiple layers of security to protect your applications and infrastructure. Here are the key security components and considerations:

Network Security

- VPC Isolation: Each deployment uses a dedicated VPC with proper subnet segmentation

- Private Subnets: EKS nodes run in private subnets with no direct internet access

- Security Groups: Restrictive security groups control traffic flow between components

- TLS Encryption: All external traffic is encrypted using TLS with certificates from AWS Certificate Manager

- HTTPS Support: HTTPS Support is configured on all Application Load Balancers

Access Control

- IAM Integration: EKS clusters use AWS IAM for authentication and authorization

- RBAC: Kubernetes Role-Based Access Control restricts permissions within clusters

- Least Privilege: Service accounts use IAM roles with minimal required permissions

- EKS Access Entries: Properly configured for node access with minimal permissions

Kubernetes Security

- Pod Security: Node selectors ensure pods run only in their designated Cells / Availability Zones

- Resource Isolation: Resource limits prevent pods from consuming excessive resources

- Health Checks: Liveness probes detect and restart unhealthy containers

- Image Security: Stores container images in a secure, encrypted repository. It includes vulnerability scanning to identify security issues in your container images.

Additional Security Considerations

- Regularly update and patch EKS clusters, worker nodes, and container images.

- Implement network policies to control pod-to-pod communication within the cluster.

- Use Pod Security Policies or Pod Security Standards to enforce security best practices for pods.

- Implement proper logging and auditing mechanisms for both AWS and Kubernetes resources.

- Regularly review and rotate IAM and Kubernetes RBAC permissions.

Supported AWS Regions

The core components of the Guidance for EKS Cell based Architecture are available in all AWS Regions where Amazon EKS is supported.

Quotas

NOTICE: Service quotas, also referred to as limits, are the maximum number of service resources or operations for your AWS account.

Quotas for AWS services in this Guidance

Ensure you have sufficient quota for each of the AWS services utilized in this guidance. For more details, refer to AWS service quotas.

If you need to view service quotas across all AWS services within the documentation, you can conveniently access this information in the Service endpoints and quotas page in the PDF.

For specific implementation quotas, consider the following key components and services used in this guidance:

- Amazon EKS: Ensure that your account has sufficient quotas for Amazon EKS clusters, node groups, and related resources.

- Amazon EC2: Verify your EC2 instance quotas, as EKS node groups rely on these.

- Amazon VPC: Check your VPC quotas, including subnets and Elastic IPs, to support the networking setup.

- Amazon EBS: Ensure your account has sufficient EBS volume quotas for persistent storage.

- IAM Roles: Verify that you have the necessary quota for IAM roles, as these are critical for securing your EKS clusters.

Key Quota Considerations for Cell-Based Architecture

| Service | Resource | Default Quota | Architecture Usage |

|---|---|---|---|

| Amazon EKS | Clusters per Region | 100 | 3 clusters per deployment |

| Amazon EKS | Nodes per Managed Node Group | 450 | Varies based on workload |

| Amazon EC2 | On-Demand Instances | Varies by type | At least 2 instances per cell |

| Elastic Load Balancing | Application Load Balancers | 50 per Region | 3 ALBs per deployment |

| Amazon VPC | VPCs per Region | 5 | 1 VPC per deployment |

| Route 53 | Records per Hosted Zone | 10,000 | 6 records per deployment |

| IAM | Roles per Account | 1,000 | Multiple roles per deployment |

To request a quota increase, use the Service Quotas console or contact AWS Support.

Deploy the Guidance

Prerequisites

Before deploying this solution, you need:

- AWS CLI configured with appropriate permissions

- Terraform installed (version 1.0 or later)

- kubectl installed

- An existing Route53 hosted zone - You’ll need the zone ID

- An existing ACM certificate - You’ll need the certificate ARN that covers your domain and subdomains

Phase 1: Set Required Variables

Create a terraform.tfvars file to set the following variables when running Terraform:

# Required variables

route53_zone_id = "YOUR_ROUTE53_ZONE_ID" # Your route53 zone id

acm_certificate_arn = "YOUR_ACM_CERTIFICATE_ARN" # You ACM certificate ARN. Ensure that ACM certificate is hosted in same region as EKS Cell infrastructure.

domain_name = "example.com" # Your domain name

region = "us-west-2" # Your AWS deployment region (e.g., us-west-2, us-east-1, eu-west-1). Preferably use region with minimum 3 AZs.

Phase 2: VPC Deployment (~ 5 minutes)

First, we’ll initialize Terraform and deploy the VPC infrastructure:

#Initialize Terraform

terraform init

# Deploy VPC

terraform apply -target="module.vpc" -auto-approve

Verification Verify that VPC has been created.

# Validate VPC creation

aws ec2 describe-vpcs --filters "Name=tag:Name,Values=*cell*" --query 'Vpcs[*].[VpcId,CidrBlock,State]' --output table

Expected Output:

| VpcId | CidrBlock | State |

|--------------|-----------------|----------|

| vpc-0abc123 | 10.0.0.0/16 | available|

Phase 3: EKS Cluster Deployment (~ 15 minutes)

Next, we’ll deploy the EKS clusters across three cells:

# Deploy EKS clusters

terraform apply -target="module.eks_cell1" -target="module.eks_cell2" -target="module.eks_cell3" -auto-approve

Verification: Confirm EKS clusters are created

for cluster in eks-cell-az1 eks-cell-az2 eks-cell-az3; do echo "Checking $cluster..."; aws eks describe-cluster --name $cluster --query 'cluster.[name,status,version,endpoint]' --output text; done

Expected Output:

Checking eks-cell-az1...

eks-cell-az1 ACTIVE 1.31 https://50CD38C9E00.gr7.us-east-1.eks.amazonaws.com

Checking eks-cell-az2...

eks-cell-az2 ACTIVE 1.31 https://D10B516C7C7.gr7.us-east-1.eks.amazonaws.com

Checking eks-cell-az3...

eks-cell-az3 ACTIVE 1.31 https://E1C27401FCD.gr7.us-east-1.eks.amazonaws.com

Phase 4: Load Balancer Controller Setup (~ 5 minutes)

1. Now we’ll deploy the AWS Load Balancer Controller to manage traffic routing.

# Deploy AWS Load Balancer Controller IAM policy and roles

terraform apply -target="aws_iam_policy.lb_controller" -auto-approve

terraform apply -target="aws_iam_role.lb_controller_role_cell1" -target="aws_iam_role.lb_controller_role_cell2" -target="aws_iam_role.lb_controller_role_cell3" -auto-approve

terraform apply -target="aws_iam_role_policy_attachment.lb_controller_policy_attachment_cell1" -target="aws_iam_role_policy_attachment.lb_controller_policy_attachment_cell2" -target="aws_iam_role_policy_attachment.lb_controller_policy_attachment_cell3" -auto-approve

Verification Let’s verify IAM Roles and Policy Attachments that were created with above commands

#Verify IAM Roles

aws iam list-roles --query 'Roles[?contains(RoleName, `lb-controller`) && (contains(RoleName, `cell`) || contains(RoleName, `az1`) || contains(RoleName, `az2`) || contains(RoleName, `az3`))].{RoleName:RoleName,Arn:Arn}' --output table

Expected Output:

-------------------------------------------------------------------------------------------------------------------------------------------------

| ListRoles |

+-----------------------------------------------------------------------+-----------------------------------------------------------------------+

| Arn | RoleName |

+-----------------------------------------------------------------------+-----------------------------------------------------------------------+

| arn:aws:iam::112233445566:role/eks-cell-az1-lb-controller | eks-cell-az1-lb-controller |

| arn:aws:iam::112233445566:role/eks-cell-az2-lb-controller | eks-cell-az2-lb-controller |

| arn:aws:iam::112233445566:role/eks-cell-az3-lb-controller | eks-cell-az3-lb-controller |

+-----------------------------------------------------------------------+-----------------------------------------------------------------------+

#Verify IAM Policy Attachments

for cell in eks-cell-az1 eks-cell-az2 eks-cell-az3; do

echo "Checking policy attachments for $cell-lb-controller:"

aws iam list-attached-role-policies --role-name $cell-lb-controller --query 'AttachedPolicies[*].[PolicyName,PolicyArn]' --output table

done

Expected Output:

Checking policy attachments for eks-cell-az1-lb-controller:

-----------------------------------------------------------------------------------

| ListAttachedRolePolicies |

+----------------------+--------------------------------------------------------+

| PolicyName | PolicyArn |

+----------------------+--------------------------------------------------------+

| eks-cell-lb-controller| arn:aws:iam::112233445566:policy/eks-cell-lb-controller|

+----------------------+--------------------------------------------------------+

Checking policy attachments for eks-cell-az2-lb-controller:

-----------------------------------------------------------------------------------

| ListAttachedRolePolicies |

+----------------------+--------------------------------------------------------+

| PolicyName | PolicyArn |

+----------------------+--------------------------------------------------------+

| eks-cell-lb-controller| arn:aws:iam::112233445566:policy/eks-cell-lb-controller|

+----------------------+--------------------------------------------------------+

Checking policy attachments for eks-cell-az3-lb-controller:

-----------------------------------------------------------------------------------

| ListAttachedRolePolicies |

+----------------------+--------------------------------------------------------+

| PolicyName | PolicyArn |

+----------------------+--------------------------------------------------------+

| eks-cell-lb-controller| arn:aws:iam::112233445566:policy/eks-cell-lb-controller|

+----------------------+--------------------------------------------------------+

2. Let’s Deploy EKS Addons and EKS Access Entries.

# Deploy EKS addons including AWS Load Balancer Controller and Karpenter, plus EKS access entries. Access entries are critical for Karpenter nodes to authenticate and join the EKS clusters

terraform apply -target="module.eks_blueprints_addons_cell1" -target="module.eks_blueprints_addons_cell2" -target="module.eks_blueprints_addons_cell3" -target="aws_eks_access_entry.karpenter_node_access_entry_cell1" -target="aws_eks_access_entry.karpenter_node_access_entry_cell2" -target="aws_eks_access_entry.karpenter_node_access_entry_cell3" -auto-approve

Note: EKS Addons deployment command pulls images from Amazon ECR Public repository. Amazon ECR Public repository requires authentication when accessing manifests via the Docker Registry HTTP API. If you get “The 401 Unauthorized response” for installing addons, it indicates missing or invalid credentials for accessing Amazon ECR Public repository. To resolve this error generate authentication token use following command,

aws ecr-public get-login-password —region us-east-1 | docker login —username AWS —password-stdin public.ecr.aws

Verification Let’s Verify deployed EKS Addons and Access entries

#Verify deployed EKS Addons

for cell in az1 az2 az3; do echo "=== Cell $cell Add-ons ==="; kubectl --context eks-cell-$cell get pods -n kube-system | grep -E "(aws-load-balancer-controller|karpenter|coredns|aws-node|kube-proxy)"; echo ""; done

Expected Output:

=== Cell az1 Add-ons ===

aws-load-balancer-controller-7df659c98d-l6spp 1/1 Running 0 26h

aws-load-balancer-controller-7df659c98d-sgr66 1/1 Running 0 26h

aws-node-dnnsl 2/2 Running 0 26h

aws-node-lvmxn 2/2 Running 0 26h

coredns-748f495494-cfwzv 1/1 Running 0 26h

coredns-748f495494-g96dp 1/1 Running 0 26h

kube-proxy-829nw 1/1 Running 0 26h

kube-proxy-l6sxr 1/1 Running 0 25h

=== Cell az2 Add-ons ===

aws-load-balancer-controller-6c5c868698-jm8fz 1/1 Running 0 26h

aws-load-balancer-controller-6c5c868698-xnnl8 1/1 Running 0 26h

aws-node-qd2t2 2/2 Running 0 26h

aws-node-xvqb8 2/2 Running 0 25h

coredns-748f495494-2hc49 1/1 Running 0 26h

coredns-748f495494-zfc7x 1/1 Running 0 26h

kube-proxy-gjbvg 1/1 Running 0 25h

kube-proxy-qj4c5 1/1 Running 0 26h

=== Cell az3 Add-ons ===

aws-load-balancer-controller-5f7f88776d-fpwxq 1/1 Running 0 26h

aws-load-balancer-controller-5f7f88776d-m24wf 1/1 Running 0 26h

aws-node-5bjjb 2/2 Running 0 26h

aws-node-qpqzq 2/2 Running 0 25h

coredns-748f495494-bdzrm 1/1 Running 0 26h

coredns-748f495494-lcd7b 1/1 Running 0 26h

kube-proxy-dcjsr 1/1 Running 0 25h

kube-proxy-n2tp4 1/1 Running 0 26h

#Verify EKS Access Entries

echo "Checking EKS clusters and access entries..."; for cell in az1 az2 az3; do echo "Cell $cell: $(aws eks describe-cluster --name eks-cell-$cell --query 'cluster.status' --output text 2>/dev/null || echo 'NOT FOUND') | Access Entries: $(aws eks list-access-entries --cluster-name eks-cell-$cell --query 'length(accessEntries)' --output text 2>/dev/null || echo '0')"; done

Expected Output:

Checking EKS clusters and access entries...

Cell az1: ACTIVE | Access Entries: 4

Cell az2: ACTIVE | Access Entries: 4

Cell az3: ACTIVE | Access Entries: 4

3. Deploy Karpenter NodePools and EC2NodeClasses for dynamic node provisioning in each cell.

# Deploy Karpenter EC2NodeClasses first (NodePools depend on these)

terraform apply -target="kubernetes_manifest.karpenter_ec2nodeclass_cell1" -target="kubernetes_manifest.karpenter_ec2nodeclass_cell2" -target="kubernetes_manifest.karpenter_ec2nodeclass_cell3" -auto-approve

# Then deploy Karpenter NodePools for all cells

terraform apply -target="kubernetes_manifest.karpenter_nodepool_cell1" -target="kubernetes_manifest.karpenter_nodepool_cell2" -target="kubernetes_manifest.karpenter_nodepool_cell3" -auto-approve

Verification Check Karpenter installation and resources

#Verification Command for Karpenter EC2NodeClasses:

for cell in az1 az2 az3; do echo "=== Cell $cell EC2NodeClass ==="; kubectl --context eks-cell-$cell get ec2nodeclass -o wide; echo ""; done

#Verification Command for Karpenter EC2NodeClasses:

for cell in az1 az2 az3; do echo "=== Cell $cell EC2NodeClass ==="; kubectl --context eks-cell-$cell get ec2nodeclass -o wide; echo ""; done

Expected Output for EC2NodeClasses:

=== Cell az1 EC2NodeClass ===

NAME READY AGE

eks-cell-az1-nodeclass True 5m

=== Cell az2 EC2NodeClass ===

NAME READY AGE

eks-cell-az2-nodeclass True 5m

=== Cell az3 EC2NodeClass ===

NAME READY AGE

eks-cell-az3-nodeclass True 5m

Expected Output for NodePools:

=== Cell az1 NodePool ===

NAME READY AGE

eks-cell-az1-nodepool True 3m

=== Cell az2 NodePool ===

NAME READY AGE

eks-cell-az2-nodepool True 3m

=== Cell az3 NodePool ===

NAME READY AGE

eks-cell-az3-nodepool True 3m

Phase 5: Application Deployment (~ 5 minutes)

Let’s setup Kubernetes Environment context and Variables

# Set up environment variables and restart AWS Load Balancer Controller pods

source setup-env.sh

source restart-lb-controller.sh

With our infrastructure in place, we’ll deploy sample applications to each cell.

# Deploy Kubernetes deployments, services and ingress resources

terraform apply -target="kubernetes_deployment.cell1_app" -target="kubernetes_deployment.cell2_app" -target="kubernetes_deployment.cell3_app" -auto-approve

terraform apply -target="kubernetes_service.cell1_service" -target="kubernetes_service.cell2_service" -target="kubernetes_service.cell3_service" -auto-approve

terraform apply -target="kubernetes_manifest.cell1_ingress" -target="kubernetes_manifest.cell2_ingress" -target="kubernetes_manifest.cell3_ingress" -auto-approve

# Wait for ALBs to be created by AWS Load Balancer Controller (this may take a few minutes)

echo "Waiting for ALBs to be created by AWS Load Balancer Controller..."

sleep 120

aws elbv2 describe-load-balancers --query 'LoadBalancers[*].[LoadBalancerName,DNSName]' --output table

# Test Karpenter node provisioning by scaling applications

kubectl scale deployment cell1-app --replicas=100 --context $CELL_1

kubectl scale deployment cell2-app --replicas=100 --context $CELL_2

kubectl scale deployment cell3-app --replicas=100 --context $CELL_3

# Wait for Karpenter to provision nodes (3-5 minutes)

echo "Waiting for Karpenter to provision nodes..."

sleep 180

# Check if Karpenter nodes were created

for CELL in $CELL_1 $CELL_2 $CELL_3; do

echo "Checking nodes in $CELL after scaling..."

kubectl get nodes -l node-type=karpenter --context $CELL

done

# Verify applications are scheduled on Karpenter nodes

for CELL in $CELL_1 $CELL_2 $CELL_3; do

echo "Checking pod placement in $CELL..."

kubectl get pods -o wide --context $CELL | grep -E "(cell[1-3]-app|NODE)"

done

Verification: Application Deployments Verification

1. Verify deployments across all cells

# Verify deployments across all cells

for cell in az1 az2 az3; do

echo "=== Cell $cell Application Deployment ==="

kubectl --context eks-cell-$cell get deployments -o wide

echo ""

done

Expected Output:

=== Cell az1 Application Deployment ===

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-app 100/100 100 100 10m nginx nginx:latest app=nginx

=== Cell az2 Application Deployment ===

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-app 60/100 100 60 10m nginx nginx:latest app=nginx

=== Cell az3 Application Deployment ===

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

nginx-app 100/100 100 100 10m nginx nginx:latest app=nginx

2. Verify Kubernetes services across all cells

for cell in az1 az2 az3; do

echo "=== Cell $cell Service ==="

kubectl --context eks-cell-$cell get services -o wide

# Map az1->cell1, az2->cell2, az3->cell3

service_name="cell${cell#az}-service"

kubectl --context eks-cell-$cell describe service $service_name | grep -E "(Name:|Selector:|Endpoints:|Port:|NodePort:)"

echo ""

done

Expected Output:

=== Cell az1 Service ===

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

cell1-service NodePort 172.20.150.142 <none> 80:31152/TCP 27h app=cell1-app

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 28h <none>

Name: cell1-service

Selector: app=cell1-app

Port: <unset> 80/TCP

NodePort: <unset> 31152/TCP

Endpoints: 10.0.1.100:80,10.0.1.101:80+...

=== Cell az2 Service ===

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

cell2-service NodePort 172.20.150.4 <none> 80:30616/TCP 27h app=cell2-app

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 28h <none>

Name: cell2-service

Selector: app=cell2-app

Port: <unset> 80/TCP

NodePort: <unset> 30616/TCP

Endpoints: 10.0.2.100:80,10.0.2.101:80+...

=== Cell az3 Service ===

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

cell3-service NodePort 172.20.79.220 <none> 80:30824/TCP 27h app=cell3-app

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 28h <none>

Name: cell3-service

Selector: app=cell3-service

Port: <unset> 80/TCP

NodePort: <unset> 30824/TCP

Endpoints: 10.0.3.100:80,10.0.3.101:80+...

3. Verify Ingress resource

# Verify ingress resources and ALB creation (trimmed output)

for cell in az1 az2 az3; do

echo "=== Cell $cell Ingress ==="

kubectl --context eks-cell-$cell get ingress -o wide

# Map az1->cell1, az2->cell2, az3->cell3 for ingress names

case $cell in

az1) ingress_name="cell1-ingress" ;;

az2) ingress_name="cell2-ingress" ;;

az3) ingress_name="cell3-ingress" ;;

esac

# Show only essential details

kubectl --context eks-cell-$cell describe ingress $ingress_name | grep -E "(Name:|Address:|Host.*Path.*Backends)" | head -3

echo ""

done

Expected Output:

=== Cell az1 Ingress ===

NAME CLASS HOSTS ADDRESS PORTS AGE

cell1-ingress <none> cell1.mycelltest.com eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com 80 27h

Rules:

Host Path Backends

---- ---- --------

cell1.mycelltest.com

/ cell1-service:80 (10.0.2.160:80,10.0.5.40:80,10.0.15.119:80 + 197 more...)

Annotations: alb.ingress.kubernetes.io/actions.ssl-redirect:

=== Cell az2 Ingress ===

NAME CLASS HOSTS ADDRESS PORTS AGE

cell2-ingress <none> cell2.mycelltest.com eks-cell-az2-alb-823479339.us-east-1.elb.amazonaws.com 80 27h

Rules:

Host Path Backends

---- ---- --------

cell2.mycelltest.com

/ cell2-service:80 (10.0.19.185:80,10.0.25.184:80,10.0.22.191:80 + 97 more...)

Annotations: alb.ingress.kubernetes.io/actions.ssl-redirect:

=== Cell az3 Ingress ===

NAME CLASS HOSTS ADDRESS PORTS AGE

cell3-ingress <none> cell3.mycelltest.com eks-cell-az3-alb-1319392746.us-east-1.elb.amazonaws.com 80 27h

Rules:

Host Path Backends

---- ---- --------

cell3.mycelltest.com

/ cell3-service:80 (10.0.35.244:80,10.0.40.115:80,10.0.47.244:80 + 147 more...)

Annotations: alb.ingress.kubernetes.io/actions.ssl-redirect:

4. Test Application through ALB

for cell in az1 az2 az3; do

case $cell in

az1) ingress_name="cell1-ingress"; host="cell1.mycelltest.com" ;;

az2) ingress_name="cell2-ingress"; host="cell2.mycelltest.com" ;;

az3) ingress_name="cell3-ingress"; host="cell3.mycelltest.com" ;;

esac

ALB_DNS=$(kubectl --context eks-cell-$cell get ingress $ingress_name -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

echo "Testing Cell $cell ALB with Host header: $ALB_DNS"

curl -s -H "Host: $host" -o /dev/null -w "HTTP Status: %{http_code}, Response Time: %{time_total}s\n" http://$ALB_DNS/ || echo "ALB not ready yet"

echo ""

done

Expected Output:

Testing Cell az1 ALB with Host header: eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com

HTTP Status: 200, Response Time: 0.009698s

Testing Cell az2 ALB with Host header: eks-cell-az2-alb-823479339.us-east-1.elb.amazonaws.com

HTTP Status: 200, Response Time: 0.005855s

Testing Cell az3 ALB with Host header: eks-cell-az3-alb-1319392746.us-east-1.elb.amazonaws.com

HTTP Status: 200, Response Time: 0.004943s

Phase 6: Route53 Configuration (~ 2 minutes)

Finally, we’ll configure Route53 records in your existing hosted zone for DNS-based routing across our cells.

# Deploy Route53 records

terraform apply -target="data.aws_lb.cell1_alb" -target="data.aws_lb.cell2_alb" -target="data.aws_lb.cell3_alb" -auto-approve

terraform apply -target="aws_route53_record.cell1_alias" -target="aws_route53_record.cell2_alias" -target="aws_route53_record.cell3_alias" -auto-approve

terraform apply -target="aws_route53_record.main" -target="aws_route53_record.main_cell2" -target="aws_route53_record.main_cell3" -auto-approve

Verification: Verify Route53 records and application functionality

1. Verify Route53 Records

DOMAIN_NAME=$(grep '^domain_name' terraform.tfvars | sed 's/.*=\s*"\([^"]*\)".*/\1/')

HOSTED_ZONE_ID=$(grep '^route53_zone_id' terraform.tfvars | sed 's/.*=\s*"\([^"]*\)".*/\1/')

echo "Domain Name: $DOMAIN_NAME"

echo "Hosted Zone ID: $HOSTED_ZONE_ID"

aws route53 list-resource-record-sets --hosted-zone-id $HOSTED_ZONE_ID \

--query "ResourceRecordSets[?contains(Name,'$DOMAIN_NAME')]" \

--output text

Expected Output:

DOMAIN_NAME=$(grep '^domain_name' terraform.tfvars | sed 's/.*=\s*"\([^"]*\)".*/\1/')

HOSTED_ZONE_ID=$(grep '^route53_zone_id' terraform.tfvars | sed 's/.*=\s*"\([^"]*\)".*/\1/')

echo "Domain Name: $DOMAIN_NAME"

echo "Hosted Zone ID: $HOSTED_ZONE_ID"

aws route53 list-resource-record-sets --hosted-zone-id $HOSTED_ZONE_ID \

--query "ResourceRecordSets[?contains(Name,'$DOMAIN_NAME')]" \

--output text

Expected Output:

mycelltest.com. cell1 A 33

ALIASTARGET eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

mycelltest.com. cell2 A 33

ALIASTARGET eks-cell-az2-alb-823479339.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

mycelltest.com. cell3 A 34

ALIASTARGET eks-cell-az3-alb-1319392746.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

mycelltest.com. 172800 NS

RESOURCERECORDS ns-1695.awsdns-19.co.uk.

RESOURCERECORDS ns-1434.awsdns-51.org.

RESOURCERECORDS ns-296.awsdns-37.com.

RESOURCERECORDS ns-520.awsdns-01.net.

mycelltest.com. 900 SOA

RESOURCERECORDS ns-1695.awsdns-19.co.uk. awsdns-hostmaster.amazon.com. 1 7200 900 1209600 86400

cell1.mycelltest.com. A

ALIASTARGET eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

cell2.mycelltest.com. A

ALIASTARGET eks-cell-az2-alb-823479339.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

cell3.mycelltest.com. A

ALIASTARGET eks-cell-az3-alb-1319392746.us-east-1.elb.amazonaws.com. True Z35SXDOTRQ7X12

2. Verify Application with Route53 records

DOMAIN_NAME=$(awk -F'=' '/^domain_name/ {gsub(/[" ]/, "", $2); gsub(/#.*/, "", $2); print $2}' terraform.tfvars)

echo "Using domain: $DOMAIN_NAME"

echo ""

for cell in az1 az2 az3; do

case $cell in

az1) ingress_name="cell1-ingress"; host="cell1.$DOMAIN_NAME" ;;

az2) ingress_name="cell2-ingress"; host="cell2.$DOMAIN_NAME" ;;

az3) ingress_name="cell3-ingress"; host="cell3.$DOMAIN_NAME" ;;

esac

ALB_DNS=$(kubectl --context eks-cell-$cell get ingress $ingress_name -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

echo "=== Testing Cell $cell ==="

echo "ALB Direct: $ALB_DNS"

echo "Route53 DNS: $host"

# Test ALB directly with Host header

echo -n "ALB with Host header: "

curl -s -H "Host: $host" -o /dev/null -w "HTTP Status: %{http_code}, Response Time: %{time_total}s\n" http://$ALB_DNS/ || echo "ALB not ready yet"

# Test Route53 DNS name directly

echo -n "Route53 DNS: "

curl -s -o /dev/null -w "HTTP Status: %{http_code}, Response Time: %{time_total}s\n" http://$host/ || echo "DNS not ready yet"

echo ""

done

Expected Output:

Using domain: mycelltest.com

=== Testing Cell az1 ===

ALB Direct: eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com

Route53 DNS: cell1.mycelltest.com

ALB with Host header: HTTP Status: 200, Response Time: 0.234s

Route53 DNS: HTTP Status: 200, Response Time: 0.187s

=== Testing Cell az2 ===

ALB Direct: eks-cell-az2-alb-823479339.us-east-1.elb.amazonaws.com

Route53 DNS: cell2.mycelltest.com

ALB with Host header: HTTP Status: 200, Response Time: 0.198s

Route53 DNS: HTTP Status: 200, Response Time: 0.156s

=== Testing Cell az3 ===

ALB Direct: eks-cell-az3-alb-1319392746.us-east-1.elb.amazonaws.com

Route53 DNS: cell3.mycelltest.com

ALB with Host header: HTTP Status: 200, Response Time: 0.201s

Route53 DNS: HTTP Status: 200, Response Time: 0.143s

Scale back down to original replicas

# Scale back down to original replicas

kubectl scale deployment cell1-app --replicas=2 --context $CELL_1

kubectl scale deployment cell2-app --replicas=2 --context $CELL_2

kubectl scale deployment cell3-app --replicas=2 --context $CELL_3

Uninstall the Guidance

The uninstall process removes all components of the cell-based architecture in the correct order to handle resource dependencies. This includes:

- 3 EKS Clusters:

eks-cell-az1,eks-cell-az2,eks-cell-az3 - Kubernetes Resources: Applications, services, and ingress configurations

- Application Load Balancers: ALBs created by AWS Load Balancer Controller

- Route53 DNS Records: Cell routing and weighted distribution records

- IAM Resources: Service roles and policies for controllers and access

- VPC Infrastructure: Networking components, subnets, and security groups

- CloudWatch Resources: Log groups and monitoring data

Verify Current Deployment

Run the verification script to understand what resources currently exist:

#Run verify resource script from source directory

./verify-resources-before-cleanup.sh

This will show you all existing resources including EKS clusters, ALBs, Route53 records, IAM roles, and VPC components.

Run Cleanup with Confirmation Prompts

#Run cleanup script from source directory

./cleanup-infrastructure.sh

The script includes safety checks and will prompt for confirmation before proceeding with the irreversible deletion process.

Monitor the Cleanup Process

The cleanup script proceeds through several stages:

- Network Connectivity Check - Verifies AWS CLI configuration and connectivity

- Kubeconfig Updates - Updates kubectl contexts for all three cells

- Kubernetes Resources Cleanup - Removes ingress, services, and deployments

- Terraform State Cleanup - Removes problematic resources from state

- Terraform Resource Destruction - Destroys resources in dependency order:

- Kubernetes resources

- Route53 records

- EKS blueprint addons

- IAM roles and policies

- EKS clusters

- VPC and remaining resources

Important Notes

Data Backup

- No persistent data is stored in the default cell-based architecture

- If you’ve added persistent volumes or databases, back them up before uninstalling

- Application logs in CloudWatch will be deleted during cleanup

DNS Considerations

- Route53 hosted zone is NOT deleted - only the A records for cells

- Domain ownership remains with you

- DNS propagation may take time to reflect changes

Cost Implications

- Uninstall stops all recurring charges for EKS, EC2, ALB, and other resources

- Route53 hosted zone charges continue

- CloudWatch log storage charges stop after log groups are deleted

Recovery

- Uninstall is irreversible - you cannot recover the exact same infrastructure

- Re-deployment is possible using the same Terraform configuration

- New resource identifiers will be generated (new ALB DNS names, etc.)

Troubleshooting

IAM Role Trust Relationship Issues

Symptoms:

WebIdentityErr: failed to retrieve credentials

AccessDenied: Not authorized to perform sts:AssumeRoleWithWebIdentity

Root Cause: IAM role trust relationship is not properly configured for OIDC provider.

Verification:

# Check trust relationship

aws iam get-role --role-name eks-cell-az1-lb-controller --query 'Role.AssumeRolePolicyDocument' --output text

# Verify OIDC provider exists

OIDC_PROVIDER=$(aws eks describe-cluster --name eks-cell-az1 --query "cluster.identity.oidc.issuer" --output text | sed 's|https://||')

aws iam list-open-id-connect-providers | grep $OIDC_PROVIDER

Solution: The trust relationship should include the correct OIDC provider and service account:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::ACCOUNT_ID:oidc-provider/OIDC_PROVIDER"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"OIDC_PROVIDER:sub": "system:serviceaccount:kube-system:aws-load-balancer-controller",

"OIDC_PROVIDER:aud": "sts.amazonaws.com"

}

}

}

]

}

Karpenter Nodes Not Joining Cluster

Symptoms:

Karpenter nodes in pending state or not appearing

Verification:

# Check Karpenter access entries

for cell in az1 az2 az3; do

echo "=== Cell $cell Access Entries ==="

aws eks list-access-entries --cluster-name eks-cell-$cell --region us-east-1

echo "Total entries: $(aws eks list-access-entries --cluster-name eks-cell-$cell --region us-east-1 --query 'length(accessEntries)' --output text)"

done

Solution: Verify Karpenter node access entries exist with correct ARNs:

# Expected ARN pattern: arn:aws:iam::ACCOUNT:role/karpenter-eks-cell-az1

# Each cell should have 4 access entries including Karpenter

Application Pods Not Scheduling

Symptoms:

Warning FailedScheduling pod/cell1-app-xxx 0/2 nodes are available

Verification:

# Check pod status and node placement

for cell in az1 az2 az3; do

case $cell in

az1) app_label="app=cell1-app" ;;

az2) app_label="app=cell2-app" ;;

az3) app_label="app=cell3-app" ;;

esac

echo "=== Cell $cell Application Pods ==="

kubectl --context eks-cell-$cell get pods -l $app_label -o wide

done

Common Solutions:

- Verify node selectors match available nodes in the correct AZ

- Check resource requests vs available node capacity

- Ensure managed node groups are running in each cell

AWS Load Balancer Controller Not Running

Symptoms:

No resources found in kube-system namespace.

Verification:

# Check load balancer controller status

for cell in az1 az2 az3; do

echo "=== Cell $cell Load Balancer Controller ==="

kubectl --context eks-cell-$cell get pods -n kube-system -l app.kubernetes.io/name=aws-load-balancer-controller

done

Common Causes & Solutions:

a) IAM Role Issues:

# Verify IAM roles exist

for cell in az1 az2 az3; do

echo "Checking IAM role for $cell:"

aws iam get-role --role-name eks-cell-$cell-lb-controller --query 'Role.RoleName' --output text 2>/dev/null || echo 'NOT FOUND'

done

b) Policy Attachment Issues:

# Check if policy is attached

aws iam list-policies --scope Local --query 'Policies[?contains(PolicyName, `lb-controller`) || contains(PolicyName, `load-balancer`)].{PolicyName:PolicyName,Arn:Arn}' --output table

Service Endpoints Not Found

Symptoms:

Error from server (NotFound): services "nginx-service" not found

Root Cause: Service names don’t match the expected naming pattern.

Verification:

# Check actual service names

for cell in az1 az2 az3; do

echo "=== Cell $cell Services ==="

kubectl --context eks-cell-$cell get services -o wide

done

Solution: Use the correct service naming pattern:

# Correct service verification command

for cell in az1 az2 az3; do

case $cell in

az1) service_name="cell1-service" ;;

az2) service_name="cell2-service" ;;

az3) service_name="cell3-service" ;;

esac

echo "=== Cell $cell Service ==="

kubectl --context eks-cell-$cell get services -o wide

kubectl --context eks-cell-$cell describe service $service_name | grep -E "(Name:|Selector:|Endpoints:|Port:|NodePort:)"

done

ALBs Not Being Created After Ingress Deployment

Symptoms:

kubectl get ingress shows ADDRESS field empty after several minutes

Root Cause: AWS Load Balancer Controller cannot create ALBs due to configuration or permission issues.

Verification:

# Check AWS Load Balancer Controller logs

for cell in az1 az2 az3; do

echo "=== Cell $cell Controller Logs ==="

kubectl --context eks-cell-$cell logs -n kube-system -l app.kubernetes.io/name=aws-load-balancer-controller --tail=20

done

Common Solutions:

a) Check Service Account Annotations:

# Verify service account has correct IAM role annotation

for cell in az1 az2 az3; do

echo "=== Cell $cell Service Account ==="

kubectl --context eks-cell-$cell get serviceaccount aws-load-balancer-controller -n kube-system -o yaml | grep -A 2 annotations

done

b) Verify Ingress Annotations:

# Check ingress has required annotations

kubectl --context eks-cell-az1 get ingress cell1-ingress -o yaml | grep -A 10 annotations

c) Check Backend Service Exists:

# Ensure pods are running that match service selector

for cell in az1 az2 az3; do

case $cell in

az1) app_label="app=cell1-app" ;;

az2) app_label="app=cell2-app" ;;

az3) app_label="app=cell3-app" ;;

esac

echo "=== Cell $cell Backend Pods ==="

kubectl --context eks-cell-$cell get pods -l $app_label

done

Application Load Balancer Returns 404 Errors

Symptoms:

Testing Cell az1 ALB: eks-cell-az1-alb-1084734623.us-east-1.elb.amazonaws.com

HTTP Status: 404, Response Time: 0.005606s

Root Cause: ALB is created but routing is not configured properly due to missing host headers.

Solution: Test with proper host headers instead of direct ALB access:

# Extract domain from terraform.tfvars

DOMAIN_NAME=$(awk -F'=' '/^domain_name/ {gsub(/[" ]/, "", $2); gsub(/#.*/, "", $2); print $2}' terraform.tfvars)

# Test with host headers

for cell in az1 az2 az3; do

case $cell in

az1) ingress_name="cell1-ingress"; host="cell1.$DOMAIN_NAME" ;;

az2) ingress_name="cell2-ingress"; host="cell2.$DOMAIN_NAME" ;;

az3) ingress_name="cell3-ingress"; host="cell3.$DOMAIN_NAME" ;;

esac

ALB_DNS=$(kubectl --context eks-cell-$cell get ingress $ingress_name -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')

echo "Testing Cell $cell with Host header:"

curl -s -H "Host: $host" -o /dev/null -w "HTTP Status: %{http_code}\n" http://$ALB_DNS/

done

Notices

Customers are responsible for making their own independent assessment of the information in this Guidance. This Guidance: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this Guidance is not part of, nor does it modify, any agreement between AWS and its customers.

Authors

- Raj Bagwe, Senior Solutions Architect,AWS

- Ashok Srirama, Specialist Principal Solutions Architect, AWS

- Daniel Zilberman, Senior Solutions Architect, AWS Tech Solutions