Guidance for Building SaaS applications on Amazon EKS using GitOps

Summary: This Implementation Guide provides an overview of the Guidance for Building SaaS applications on Amazon EKS using GitOps, its reference architecture and components, considerations for planning the deployment, and configuration steps for deploying the guidance to Amazon Web Services (AWS). This guide is intended for solution architects, business decision makers, DevOps engineers, data scientists, and cloud professionals who want to implement Guidance for Building SaaS applications on Amazon EKS using GitOps in their AWS environment.

Overview

This implementation guide provides an overview of the Guidance for Building SaaS applications on Amazon Elastic Kubernetes Service (EKS) using GitOps methodology, its reference architecture and components, considerations for planning the deployment, and configuration steps for deploying the guidance to Amazon Web Services (AWS).

SaaS (Software as a Service) applications provide users with access to software over the internet, eliminating the need for local installation and maintenance. AWS allows SaaS applications to scale resources up or down quickly, robust security measures and high availability, ensuring minimal downtime and continuous access for users. Amazon EKS provides native Kubernetes

features that enable tenant-wise compute isolation using multiple isolation methods including namespaces, network policies, RBAC, and resource quotas, ensuring robust multi-tenant isolation and efficient resource management. This setup is crucial for SaaS tiers as it allows for optimized resource allocation, aligning with the varying demands of different tiers. SaaS tiers refer to different levels of service or pricing plans offered by SaaS companies. These tiers typically include varying features, user support, and pricing, allowing customers to choose the package that best fits their needs.

GitOps approach simplifies SaaS operations by using Git repositories as the single source of truth for managing multiple EKS clusters across different customers and environments. This approach automatically provisions consistent configurations, eliminates manual errors, and provides audit trails while enabling rapid tenant scaling and instant rollbacks through Git history. Amazon EKS enhances this strategy by providing managed Kubernetes infrastructure that eliminates cluster management overhead and integrates seamlessly with AWS services for monitoring, security, and compliance, allowing SaaS teams to focus on product development rather than infrastructure operations while ensuring each customer receives the same reliable, secure experience from development to production.

Features and benefits

The Guidance for Building SaaS applications on Amazon EKS using GitOps provides the following features:

GitOps can significantly benefit SaaS providers by improving deployment speed and reliability which is crucial for SaaS applications that require frequent updates. Additionally, GitOps enhances security and compliance by providing a clear audit trail of changes, helping track who made changes and when. This consistency also ensures that all environments are configured similarly, reducing errors and improving overall system stability. In this guidance, we will learn how it simplifies SaaS tier onboarding, identity management, and data partitioning, allowing SaaS providers to focus on delivering features quickly and responding to market changes.

Building SaaS applications on Amazon EKS involves several tools that work together to streamline infrastructure management and application deployment. Terraform is used for Infrastructure as Code (IaC), allowing you to define and manage AWS resources like EKS clusters in a declarative way. This ensures consistent and reproducible infrastructure setups, which is crucial for SaaS providers needing to manage multiple environments efficiently. Flux can handle dynamic configurations and automation, ensuring that applications are always up-to-date and consistent across environments. It supports Helm, a package manager which simplifies the deployment of Kubernetes resources by using pre-defined charts, reducing the complexity of managing multiple YAML files.

Flux uses Git repositories as the single source of truth for defining the desired state of Kubernetes resources and applications. It provides a user-friendly interface and CLI for managing application states, and it automatically synchronizes these changes with the actual state of the EKS cluster through pull-based reconciliation.Argo Workflows provides the orchestration layer that coordinates complex, multi-step operations across multiple EKS clusters, making it essential for SaaS platforms that need to manage hundreds or thousands of tenant environments consistently and reliably. This level of automation and consistency is vital for SaaS providers aiming to deliver high-quality services across various tiers while maintaining operational efficiency.

Use Cases

Here are the top five use cases where SaaS application providers can benefit from using GitOps on Amazon EKS:

Multi-Tenant Management: GitOps helps manage multiple tenants efficiently by ensuring consistent configurations across different environments. This is particularly useful for SaaS providers offering tiered services, as it simplifies the deployment and management of tenant-specific resources.

Automated Deployment and Scaling: GitOps automates the deployment and scaling of applications, allowing SaaS providers to quickly adapt to changing demands across different tiers. This ensures that resources are optimized for each tier, enhancing cost efficiency and performance.

Security and Compliance: GitOps provides a secure and auditable way to manage infrastructure and applications, which is crucial for SaaS providers offering different tiers with varying security requirements. It helps maintain compliance by tracking changes and ensuring that configurations align with security policies.

Consistent Configuration Management: By using GitOps, SaaS providers can ensure that configurations for different tiers are consistent and up-to-date. This reduces errors and improves overall system reliability, which is essential for maintaining high-quality service across tiers.

Efficient Resource Utilization: GitOps helps optimize resource usage by automating the deployment of resources based on defined configurations. This ensures that each tier uses resources efficiently, reducing waste and improving cost management for SaaS providers.

Architecture overview

This repository is organized to facilitate a hands-on learning experience, structured as follows:

- gitops: Contains GitOps configurations and templates for setting up the application plane, clusters, control plane, and infrastructure necessary for the SaaS architecture.

- helpers: Includes CloudFormation templates to assist in setting up the required AWS resources. (Used for deploying a VSCode server instance needed for setup)

- helm-charts: Houses Helm chart definitions for deploying tenant-specific resources within the Kubernetes cluster and shared services resources.

- tenant-microservices: Contains the source code and Dockerfiles for the sample microservices used in the workshop (consumer, payments, producer).

- terraform: Features Terraform modules and scripts for provisioning the AWS infrastructure and Kubernetes resources. Detailed setup instructions are provided within this folder’s README.md.

- workflow-scripts: Provides scripts to automate the workflow for tenant onboarding and application deployment within the GitOps framework.

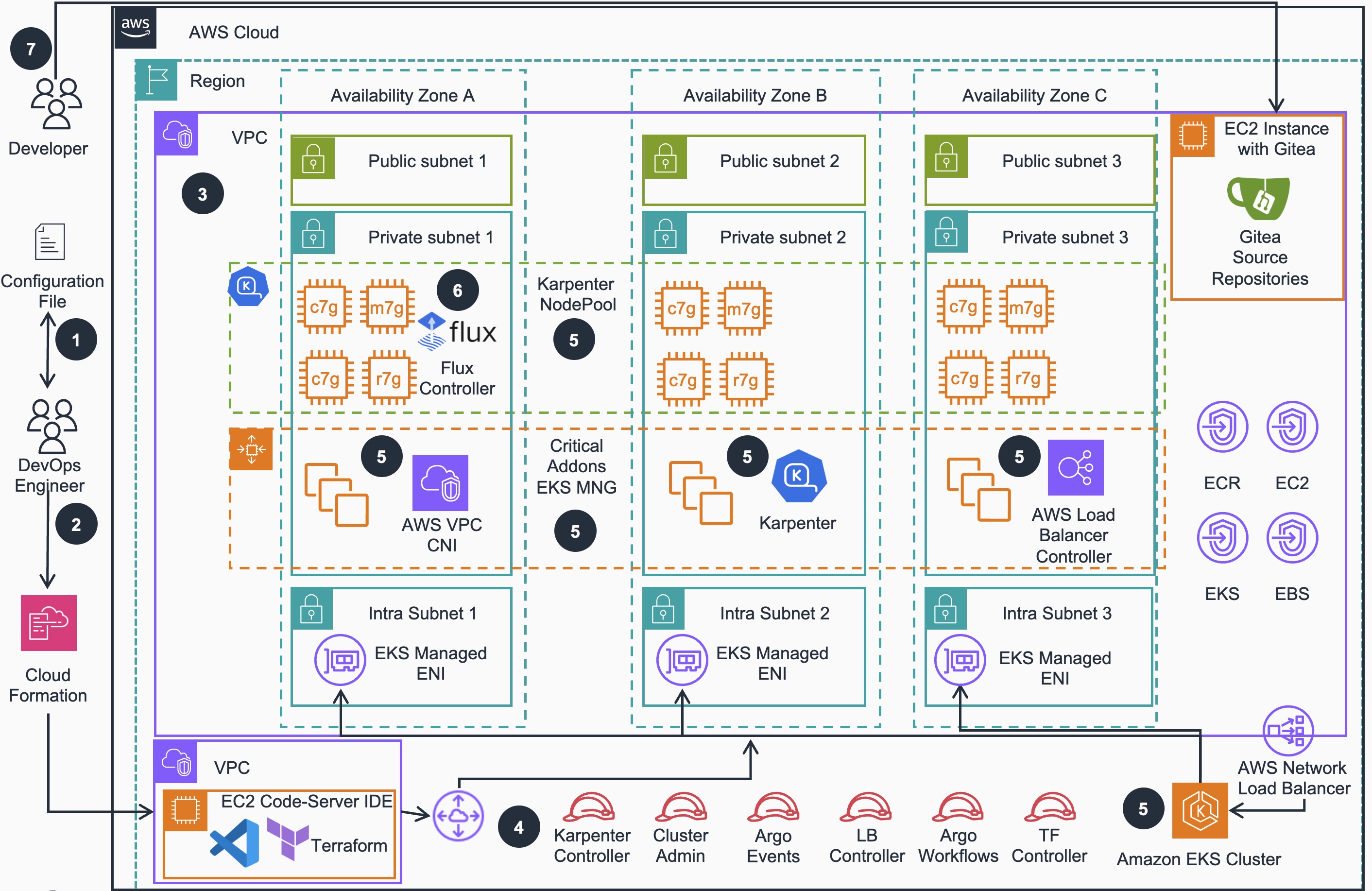

Architecture diagram

Figure 1: Reference Architecture of an Amazon Elastic Kubernetes Service (EKS) cluster

Architecture steps

DevOps engineer defines a per-environment Terraform variable file that control all of the environment-specific configuration. This configuration file is used in all steps of deployment process by all IaC configurations to create different EKS environments.

DevOps engineer applies the environment configuration using AWS CloudFormation which deploys a Amazon EC2 Instance with a VSCode IDE that is used to apply Terraform.

An Amazon Virtual Private Network (VPC) is provisioned and configured based on specified configuration. According to best practices for Reliability, 3 Availability zones (AZs) are configured with corresponding VPC Endpoints to provide access to resources deployed in private VPC and other VPC connected by VPC Peering.

User facing Identity and Access Management (IAM) roles (Cluster Admin, Karpenter Controller, Argo Workflow, Argo Events, LB Controller, TF Controller) are created for various access levels to EKS cluster resources, as recommended in Kubernetes security best practices.

Amazon Elastic Kubernetes Service (EKS) cluster is provisioned with Managed Nodes Group that run critical cluster add-ons (CoreDNS, AWS Load Balancer Controller and Karpenter) on its compute node instances. Karpenter will manage compute capacity to other add-ons, as well as business applications that will be deployed by user while prioritizing provisioning Amazon EC2 Spot instances for the best price-performance.

Other selected EKS add-ons are deployed based on the configurations defined in the per-environment Terraform configuration file (see Step1 above).

Gitea source code repositories running on Amazon EC2 can be accessed by Developers to update microservices source code.

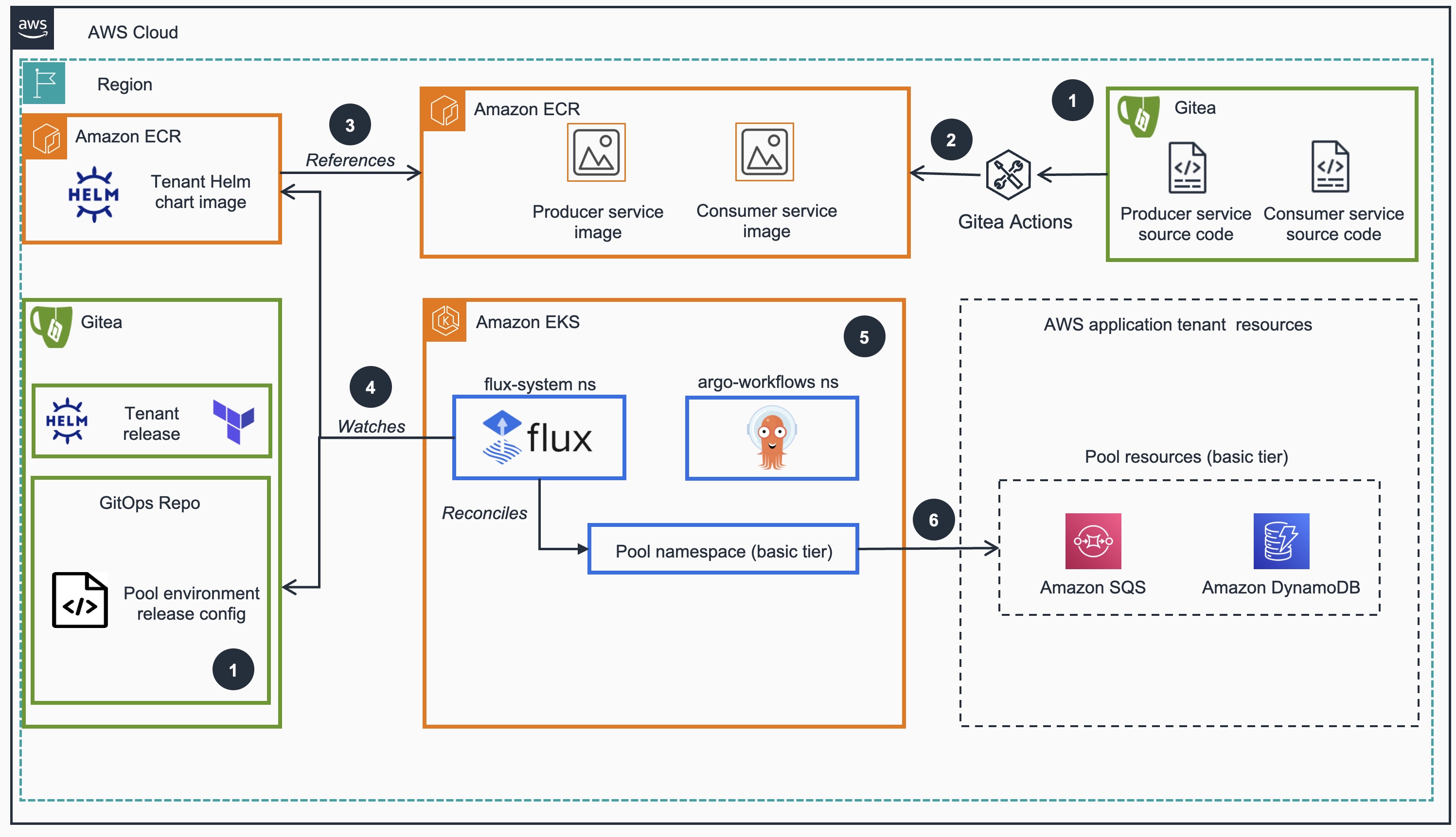

Below is the flow diagram of the tenant applications, illustrating how they interact with each other and with external AWS services such as Amazon SQS and Amazon DynamoDB.

Figure 2: Reference Architecture of GitOps driven workflow on Amazon EKS cluster using FluxV2 for provisioning tenant resources

Architecture steps

Gitea source code repositories for the GitOps releases, Tenant re definition and microservice application code.

Gitea Actions is responsible to build the Producer and Consumer container images and push it on Amazon Elastic Container Registry (ECR).

Amazon ECR for the Tenant Template Helm chart that references the services-built images

Flux watches environment definition on git and ECR to deploy changes to the EKS cluster, so that the cluster deployments match the expected state declared in Git source repo and the correct version of Helm chart is deployed in the cluster.

Argo Workflows is used for templating and automating variable replacement during onboarding, offboarding, and deployment processes. Argo Workflows automates these steps by committing the changes to the Git repository, which then triggers the rest of the GitOps pipeline.

Basic tier application tenants share AWS resources (Amazon SQS, Amazon DynamoDB). Basic tier tenants are served by the same microservice instances and infrastructure resources. This approach optimizes resource usage and reduces costs by sharing the infrastructure among multiple tenants.

AWS services in this Guidance

| AWS Service | Role | Description |

|---|---|---|

| Amazon Elastic Kubernetes Service ( EKS) | Core service | Manages the Kubernetes control plane and worker nodes for container orchestration. |

| Amazon Elastic Compute Cloud (EC2) | Core service | Provides the compute instances for EKS worker nodes and runs containerized applications. |

| Amazon Virtual Private Cloud (VPC) | Core Service | Creates an isolated network environment with public and private subnets across multiple Availability Zones. |

| Amazon Elastic Container Registry (ECR) | Supporting service | Stores and manages Docker container images for EKS deployments. |

| Amazon Elastic Load Balancing (ELB) | Supporting service | Distributes incoming traffic across multiple targets in the EKS cluster. |

| AWS Identity and Access Management (IAM) | Supporting service | Manages access to AWS services and resources securely, including EKS cluster access. |

| AWS Certificate Manager (ACM) | Security service | Manages SSL/TLS certificates for secure communication within the cluster. |

| Amazon CloudWatch | Monitoring service | Collects and tracks metrics, logs, and events from EKS and other AWS resources provisoned in the guidance |

| AWS Systems Manager | Management service | Provides operational insights and takes action on AWS resources. |

| AWS Key Management Service (KMS) | Security service | Manages encryption keys for securing data in EKS and other AWS services. |

| AWS CodeBuild | CI/CD service | Compiles/builds source code, runs tests, and produces software packages ready for deployment. |

| AWS CodePipeline | CI/CD service | Automates the build, test, and deployment phases of release process. |

| Amazon Managed Grafana (AMG) | Observability service | Provides fully managed service for metrics visualization and monitoring. |

| Amazon Managed Service for Prometheus (AMP) | Observability service | Offers managed Prometheus-compatible monitoring for container metrics. |

Plan your deployment

Cost

You are responsible for the cost of the AWS services deployed while running this guidance. As of September, 2025, the cost for running this guidance with the default settings in the US East (N. Virginia) us-east-1 AWS Region is approximately $329.25/month.

We recommend creating a budget through AWS Cost Explorer to help manage costs. Prices are subject to change. For full details, refer to the pricing webpage for each AWS service used in this Guidance.

Sample cost table

The following table provides a sample cost breakdown for deploying this guidance with the default parameters in the US East (Ohio) Region for one month. This estimate is based on the AWS Pricing Calculator output for the full deployment as per the guidance.

| AWS service | Dimensions | Cost [USD] |

|---|---|---|

| Amazon EKS | 1 Cluster | $ 73.0month |

| Amazon VPC | Public IP addresses, NAT Gateway | $ 70.20month |

| Amazon EC2 | 2 m6g.large instances | $ 57.81month |

| Amazon EC2 | 1 t3.large instance (VSCode Server) | $ 64.74month |

| Amazon EC2 | 1 t2.micro instance (Gitea) | $ 10.47month |

| Amazon ECR | Image storage and data transfer | $ 25.0month |

| Amazon CloudWatch | Metrics | $ 3.00month |

| Amazon KMS | Keys and requests | $ 7month |

| Application Load Balancer | 1 ALB | $ 18.03month |

| TOTAL | $ 329.25 USD/month |

For a more accurate estimate based on your specific configuration and usage patterns, we recommend using the AWS Pricing Calculator.

Security

When you build systems on AWS infrastructure, security responsibilities are shared between you and AWS. This shared responsibility model reduces your operational burden because AWS operates, manages, and controls the components including the host operating system, the virtualization layer, and the physical security of the facilities in which the services operate. For more information about AWS security, visit AWS Cloud Security.

This guidance implements several security best practices and AWS services to enhance the security posture of your EKS Cluster. Here are the key security components and considerations:

Identity and Access Management (IAM)

- IAM Roles: The architecture uses predefined IAM roles (Cluster Admin, Admin, Edit, Read) to manage access to the EKS cluster resources. This follows the principle of least privilege, ensuring users and services have only the permissions necessary to perform their tasks.

- EKS Managed Node Groups: These use IAM roles with specific permissions required for nodes to join the cluster and for pods to access AWS services.

Network Security

- Amazon VPC: The EKS cluster is deployed within a custom VPC with public and private subnets across multiple Availability Zones, providing network isolation.

- Security Group: Although not explicitly shown in the diagram, security groups are typically used to control inbound and outbound traffic to EC2 instances and other resources within the VPC.

- NAT Gateways: Deployed in public subnets to allow outbound internet access for resources in private subnets while preventing inbound access from the internet.

Data Protection

- Amazon EBS Encryption: EBS volumes used by EC2 instances are typically encrypted to protect data at rest.

- AWS Key Management Service (KMS): Used for managing encryption keys for various services, including EBS volume encryption.

Kubernetes-specific Security

- Kubernetes RBAC: Role-Based Access Control is implemented within the EKS cluster to manage fine-grained access to Kubernetes resources.

- AWS Certificate Manager: Integrated to manage SSL/TLS certificates for secure communication within the cluster.

Monitoring and Logging

- Amazon CloudWatch: Used for monitoring and logging of AWS resources and applications running on the EKS cluster.

- Amazon Managed Grafana and Prometheus: Provide additional monitoring and observability capabilities, helping to detect and respond to security events.

Container Security

- Amazon ECR: Stores container images in a secure, encrypted repository. It includes vulnerability scanning to identify security issues in your container images.

Secrets Management

- AWS Secrets Manager: While not explicitly shown in the diagram, it’s commonly used to securely store and manage sensitive information such as database credentials, API keys, and other secrets used by applications running on EKS.

Additional Security Considerations

- Regularly update and patch EKS clusters, worker nodes, and container images.

- Implement network policies to control pod-to-pod communication within the cluster.

- Use Pod Security Policies or Pod Security Standards to enforce security best practices for pods.

- Implement proper logging and auditing mechanisms for both AWS and Kubernetes resources.

- Regularly review and rotate IAM and Kubernetes RBAC permissions.

Supported AWS Regions

The core components of this guidance are available in all AWS Regions where Amazon EKS is supported.

The observability components of this guidance use Amazon Managed Service for Prometheus (AMP) and Amazon Managed Grafana (AMG). These services are available in the following regions:

| Region Name | |

|---|---|

| US East (N. Virginia) | us-east-1 |

| US East (Ohio) | us-east-2 |

| US West (Oregon) | us-west-2 |

| Asia Pacific (Mumbai) | ap-south-1 |

| Asia Pacific (Seoul) | ap-northeast-2 |

| Asia Pacific (Singapore) | ap-southeast-1 |

| Asia Pacific (Sydney) | ap-southeast-2 |

| Asia Pacific (Tokyo) | ap-northeast-1 |

| Europe (Frankfurt) | eu-central-1 |

| Europe (Ireland) | eu-west-1 |

| Europe (London) | eu-west-2 |

| Europe (Stockholm) | eu-north-1 |

| South America (São Paulo) | sa-east-1 |

The core components of this guidance can be deployed in any AWS Region where Amazon EKS is available. This includes all commercial AWS Regions except for the Greater China and the AWS GovCloud (US) Regions.

For the most current availability of AWS services by Region, refer to the AWS Services by Region List.

If you deploy this guidance into a region where AMP and/or AMG are not available, you can disable the OSS observability tooling during deployment. This allows you to use the core components of the guidance without built-in observability features.

Service Quotas

Service quotas, also referred to as limits, are the maximum number of service resources or operations for your AWS account.

Quotas for AWS services in this Guidance

Make sure you have sufficient quota for each of the services implemented in this solution. For more information, see AWS service quotas.

To view the service quotas for all AWS services in the documentation without switching pages, view the information in the Service endpoints and quotas page in the PDF instead.

For specific implementation quotas, consider the following key components and services used in this guidance:

- Amazon EKS: Ensure that your account has sufficient quotas for Amazon EKS clusters, node groups, and related resources.

- Amazon EC2: Verify your EC2 instance quotas, as EKS node groups rely on these.

- Amazon VPC: Check your VPC quotas, including subnets and Elastic IPs, to support the networking setup.

- Amazon EBS: Ensure your account has sufficient EBS volume quotas for persistent storage.

- IAM Roles: Verify that you have the necessary quota for IAM roles, as these are critical for securing your EKS clusters.

- AWS Systems Manager: Review the quota for Systems Manager resources, which are used for operational insights and management.

- AWS Secrets Manager: If you’re using Secrets Manager for storing sensitive information, ensure your quota is adequate.

Deploy the guidance

Prerequisites

AWS Account and Permissions: Ensure you have an active AWS account with appropriate permissions to create and manage AWS resources like Amazon EKS, EC2, IAM, and VPC.

All required tools (AWS CLI, Terraform, Git, kubectl, Helm, and Flux CLI) are pre-installed in the VSCode server instance that will be deployed as part of the setup process.

Deployment Process Overview

Before you launch the guidance please review the architecture, cost, security, and other considerations discussed in this guide.

Follow thestep-by-step instructions in this section to configure and deploy the guidance into your account.

- Deploy the VSCode Server Instance:

- Navigate to the AWS CloudFormation console in your AWS account

- Click “Create stack” and select “With new resources (standard)”

- Choose “Upload a template file” and upload the

helpers/vs-code-ec2.yamlfile from this repository - Click “Next” and provide a Stack name (e.g., “eks-saas-gitops-vscode”)

- Configure any required parameters and click “Next”

- Click the checkbox “I acknowledge that AWS CloudFormation might create IAM resources with custom names.”

- Click “Next”, Review the configuration and click “Submit” to create the stack. Now, wait for the CloudFormation stack to complete deployment (~ 30 minutes)

Time to deploy: Approximately 30 minutes

- Access the VSCode Server Instance:

- The Terraform infrastructure is deployed automatically as part of the VSCode server instance setup. The VSCode instance has all required tools pre-installed (AWS CLI, Terraform, Git, kubectl, Helm, and Flux CLI)

- Once the CloudFormation stack deployment is complete, go to the “Outputs” tab

- Find the VsCodePassword and click on the link, copy the password under “Value”

- Find the VSCodeURL output value and click on the link

- This will open the VSCode web interface in your browser

- Input the password copied from VsCodePassword

- The repository connected to Flux and your Amazon EKS Cluster will be automatically cloned and available in the VSCode workspace on /home/ec2-user/environment/gitops-gitea-repo. The sample microservices and Helm charts are available in their respective directories.

Let’s understand SaaS Tier Strategy followed in this guidance:

SaaS applications use various deployment models – silo, hybrid, and pool. Each model has different level of customization, isolation, and requirements for provisioning resources during tenant onboarding. These Saas deployment models match the level of service and customization a customer gets with features offered in different Saas Tiers like:

The Basic Tier is designed for tenants sharing resources often resulting in better cost efficiency. However, before provisioning Basic Tier tenants, a Pool Environment must be created to accommodate them.

The Premium Tier is designed for tenants that require dedicated resources. The template (premium_tenant_template.yaml) is used to create helm releases for tenants under the tenants/premium folder.

The Advanced Tier is required to accomodate the requirements of a new customer group. It should be a mix of dedicated and shared resources, having the producer shared but with a dedicated consumer. The idea is to take the advantages of each model (Silo/Dedicated and Pool/Shared) based on the usage pattern of those customers.

Figure 3: Reference Architecture - different SaaS Tiers configured for guidance

For this workshop/guidance, we have developed some applications to serve our tenants:

Producer: Responsible for receiving API calls, producing messages, and sending them to an SQS queue.

Consumer: Responsible for retrieving messages from the SQS queue and persisting them in a specific DynamoDB table.

Other App & Infrastructure involved:

- Flux controller is the central component of the solution. Flux watches environment definition on git and ECR to deploy changes to the cluster, so that the cluster deployments match the expected state declared in Git and the correct version of Helm is deployed in the cluster.

- Tofu Controller plays a critical role in this architecture by enabling the seamless integration of Terraform modules through the usage of a Custom Resource Definition called Terraform CRD.

- Helm Charts for packaging, deploying, and managing Kubernetes applications. Helm Charts help in defining, installing, and managing Kubernetes resources efficiently.

Below is the architecture diagram showing how OpenTofu Controller works with FluxV2 to provisioning AWS managed resources

Figure 4: OpenTofu Controller works with FluxV2 on Amazon EKS cluster to provision AWS managed resources through Terraform

Architecture steps

Flux continuously watches Git repositories for changes. In this case, it monitors the repository containing the Terraform CRD and the Terraform module.

When a Terraform CRD is created in the cluster (defined in the Git repository), Flux detects this new resource and starts the reconciliation process.

The TF Controller is responsible for monitoring the Terraform CRD within the

flux-systemnamespace. When it detects a new or updated Terraform CRD, it initiates the necessary actions.The TF Controller launches a

tf-runnerpod. This pod pulls the specified Terraform module from the Git repository and executes it, managing the infrastructure as defined in the CRD.The

tf-runnerpod provisions the required resources, such as Amazon SQS queues and Amazon DynamoDB tables, based on the Terraform module’s definitions.The state and plan of the Terraform execution are stored as Kubernetes secrets (e.g.,

tfstateandtfplan). This ensures that the state is preserved and can be accessed by subsequent Terraform operations.

SECTION 1: Defining Saas tier Strategy

Our tiering strategy is executed with Helm Release Templates, which are structured to reflect the distinct service levels of each tenant. Let’s call this new tier definition Advanced as it should be a mix of dedicated and shared resources, having the producer sharing resources with a dedicated consumer. The idea is to take the advantages of each model (Silo/Dedicated and Pool/Shared) based on the usage pattern of those customers.

Figure 5: Advanced Tenant Architecture

Deployment Steps

All steps below are assumed to be run on the VSCode instance provisioned above. It is typically called eks-saas-gitops-Instance, you can connect to it from the AWS Console “EC2 - Instances” via System Manager connection option or via corresponding browser URL. In both cases, the commands below should be executed as ec2-user which can be ensured by running the following command:

sudo su - ec2-user

Last login: Thu Sep 25 04:03:02 UTC 2025 on pts/2

[ec2-user@ip-10-0-1-186 ~]$ pwd

/home/ec2-user

Step 1.1

Create the new Helm release template under the tier-templates folder.

export REPO_PATH="/home/ec2-user/environment"

cat << EOF > $REPO_PATH/gitops-gitea-repo/application-plane/production/tier-templates/advanced_tenant_template.yaml

apiVersion: v1

kind: Namespace

metadata:

name: {TENANT_ID}

---

apiVersion: helm.toolkit.fluxcd.io/v2beta1

kind: HelmRelease

metadata:

name: {TENANT_ID}-advanced

namespace: flux-system

spec:

releaseName: {TENANT_ID}-advanced

targetNamespace: {TENANT_ID} # Deploying into the tenant-specific namespace

interval: 1m0s

chart:

spec:

chart: helm-tenant-chart

version: "{RELEASE_VERSION}.x"

sourceRef:

kind: HelmRepository

name: helm-tenant-chart

values:

tenantId: {TENANT_ID}

apps:

producer:

envId: pool-1

enabled: false # Pool deployment -- advanced tier shares resources with other tenants

ingress:

enabled: true

consumer:

enabled: true # Silo deployment -- advanced tier has a dedicated deployment for each tenant

ingress:

enabled: true

EOF

Step 1.2

We will now create a folder to add the Advanced Tier tenants. And to create a Helm release for a new tenant in the Advanced Tier and explore the resources created by Flux and the Tofu Controller.

mkdir -p $REPO_PATH/gitops-gitea-repo/application-plane/production/tenants/advanced

export TENANT_ID=tenant-t1d6c

export RELEASE_VERSION=0.0

cd $REPO_PATH/gitops-gitea-repo/application-plane/production/

cp tier-templates/advanced_tenant_template.yaml tenants/advanced/$TENANT_ID.yaml

sed -i "s|{TENANT_ID}|$TENANT_ID|g" "tenants/advanced/$TENANT_ID.yaml"

sed -i "s|{RELEASE_VERSION}|$RELEASE_VERSION|g" "tenants/advanced/$TENANT_ID.yaml"

Step 1.3

To deploy the HelmRelease using GitOps, add a reference to kustomization.yaml file pointing to the new tenant Helm release.

cat << EOF > tenants/advanced/kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- $TENANT_ID.yaml

EOF

Step 1.4

That’s all! Now commit the changes and push it to Git. And, to speed up the process, force Flux reconciliation

git pull origin main

git add .

git commit -am "Adding tenant-t1d6c with Advanced Tier"

git push origin main

flux reconcile source git flux-system

Step 1.5

Finally, we will check the Kubernetes namespace of new tenant tenant-t1d6c for the consumer deployment. Also, you should also be able to see the SQS Queue and DynamoDB table for the new tenant because the consumer is dedicated. Let’s just make a request to the application to confirm it’s running correctly:

kubectl get namespaces

kubectl get deployment -n tenant-t1d6c

aws dynamodb list-tables | grep tenant-t1d6c

aws sqs list-queues | grep tenant-t1d6c

If you don’t see the DynamoDB table and the SQS queue in corresponding secdtions of AWS Console, it’s likely because the OpenTofu Controller is still running and creating those resources - wait a few seconds and try again!

APP_LB=http://$(kubectl get ingress -n tenant-t1d6c -o json | jq -r .items[0].status.loadBalancer.ingress[0].hostname)

curl -s -H "tenantID: tenant-t1d6c" $APP_LB/producer | jq

curl -s -H "tenantID: tenant-t1d6c" $APP_LB/consumer | jq

Observe that the producer microservice is running in the shared environment pool-1 while the consumer has it’s dedicated environment tenant-t1d6c.

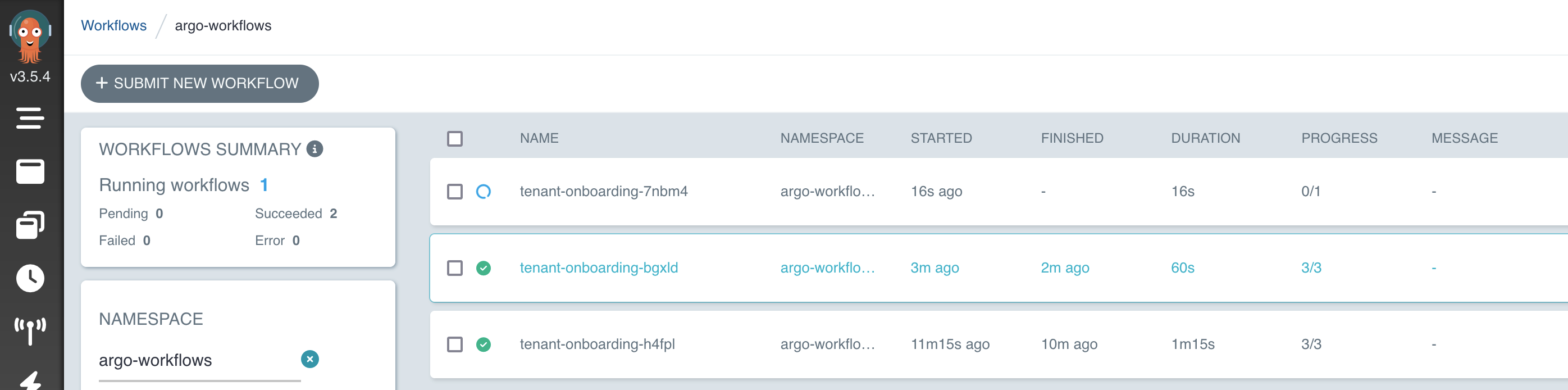

SECTION 2: Automating Tenant Onboarding

Argo Workflows is used in this setup for templating and automating variable replacement during tenant onboarding, offboarding, and deployment processes. Argo Workflows automates these steps by committing the changes to the Git repository, which then triggers the rest of the GitOps pipeline.

Step 2.1

Let’s automate onboarding process for Advanced tenant:

aws sqs send-message \

--queue-url $ARGO_WORKFLOWS_ONBOARDING_QUEUE_SQS_URL \

--message-body '{

"tenant_id": "tenant-3",

"tenant_tier": "advanced",

"release_version": "0.0"

}'

kubectl -n argo-workflows get workflow

Step 2.2

Open the Argo Workflows Web UI to see the workflow created for the new tenant onboarding:

kubectl -n argo-workflows get workflow

ARGO_WORKFLOW_URL=$(kubectl -n argo-workflows get service/argo-workflows-server -o json | jq -r '.status.loadBalancer.ingress[0].hostname')

echo http://$ARGO_WORKFLOW_URL:2746/workflows

Figure 6: Argo Workflows User Interface - onboarding workflow

Step 2.3

Verify Gitops changes in Gitea Once the workflow completes execution, check the files committed to the Gitea repository for tenant-3:

# Get Gitea IPs from the configuration

export GITEA_PRIVATE_IP=$(kubectl get configmap saas-infra-outputs -n flux-system -o jsonpath='{.data.gitea_url}')

export GITEA_PUBLIC_IP=$(kubectl get configmap saas-infra-outputs -n flux-system -o jsonpath='{.data.gitea_public_url}')

export GITEA_PORT="3000"

# Get Gitea admin password from Systems Manager Parameter Store

export GITEA_ADMIN_PASSWORD=$(aws ssm get-parameter --name "/eks-saas-gitops/gitea-admin-password" --with-decryption --query 'Parameter.Value' --output text)

# Display access information for web browser login

echo "=== Gitea Web Interface Access ==="

echo "Public URL (for browser access): $GITEA_PUBLIC_IP"

echo "Username: admin"

echo "Password: $GITEA_ADMIN_PASSWORD"

echo "=================================="

echo ""

echo "Use the PUBLIC URL above to access Gitea from your web browser."

Open the Public URL in your browser to view the Gitea web interface and log in using the credentials displayed above.

Figure 7: Added Tenant for different tiers in Git repository

Notice that the workflow provisions the new tenant in the Advanced tier. Here, enable_producer is set to false and enable_consumer is set to true, meaning that tenant-3 uses a mix of siloed and pooled resources.

Step 2.4

Check resources deployed and, to speed up the process, force Flux reconciliation

cd $REPO_PATH/gitops-gitea-repo

git pull origin main

flux get helmreleases

You will only see that consumer microservice was created for tenant-3 namespace because the producer is Pool.

flux get sources chart

# Advanced Tenant

kubectl -n tenant-3 get deployment

Step 2.5

let’s get the application load balancer endpoint. In this sample, we are using the same ALB for all tenants and just use a header tenantID to route requests to the right tenant environment.

# Export Application Endpoint

export APP_LB=http://$(kubectl get ingress -n tenant-1 -o json | jq -r .items[0].status.loadBalancer.ingress[0].hostname)

curl -s -H "tenantID: tenant-3" $APP_LB/producer | jq

curl -s -H "tenantID: tenant-3" $APP_LB/consumer | jq

Notice that tenant-3 (Advanced) uses producer microservice on namespace pool-1 and consumer microservice on namespace tenant-3.

Step 2.6

To validate the infrastructure, we’ll send a POST request to the producer and check if the consumer persists the item in the DynamoDB database.

curl --location --request POST "$APP_LB/producer" \

--header 'tenantID: tenant-3' \

--header 'tier: advanced'

TABLE_NAME=$(aws dynamodb list-tables --region $AWS_REGION --query "TableNames[?contains(@, 'tenant-3')]" --output text)

aws dynamodb scan --table-name $TABLE_NAME --region $AWS_REGION

By following these steps, you can test the microservices for each tenant and validate the infrastructure setup by checking the persistence of data in DynamoDB. This ensures that the deployments and integrations are functioning correctly across different tenant tiers.

SECTION 3: Automating offboarding of a Tenat

Step 3.1

We will remove the tenant tenant-31d6c that we have provisioned earlier in Section 1 SAAS Tier Strategy and ensure all associated resources are cleaned up. First, we will send a message to the Amazon SQS offboarding queue with the required metadata: tenant_id and tenant_tier parameters:

export ARGO_WORKFLOWS_OFFBOARDING_QUEUE_SQS_URL=$(kubectl get configmap saas-infra-outputs -n flux-system -o jsonpath='{.data.argoworkflows_offboarding_queue_url}')

aws sqs send-message \

--queue-url $ARGO_WORKFLOWS_OFFBOARDING_QUEUE_SQS_URL \

--message-body '{

"tenant_id": "tenant-t1d6c",

"tenant_tier": "advanced"

}'

Step 3.2

To check the workflow created for offboarding the tenant, run the following command:

kubectl -n argo-workflows get workflow

ARGO_WORKFLOW_URL=$(kubectl -n argo-workflows get service/argo-workflows-server -o json | jq -r '.status.loadBalancer.ingress[0].hostname')

echo http://$ARGO_WORKFLOW_URL:2746/workflows

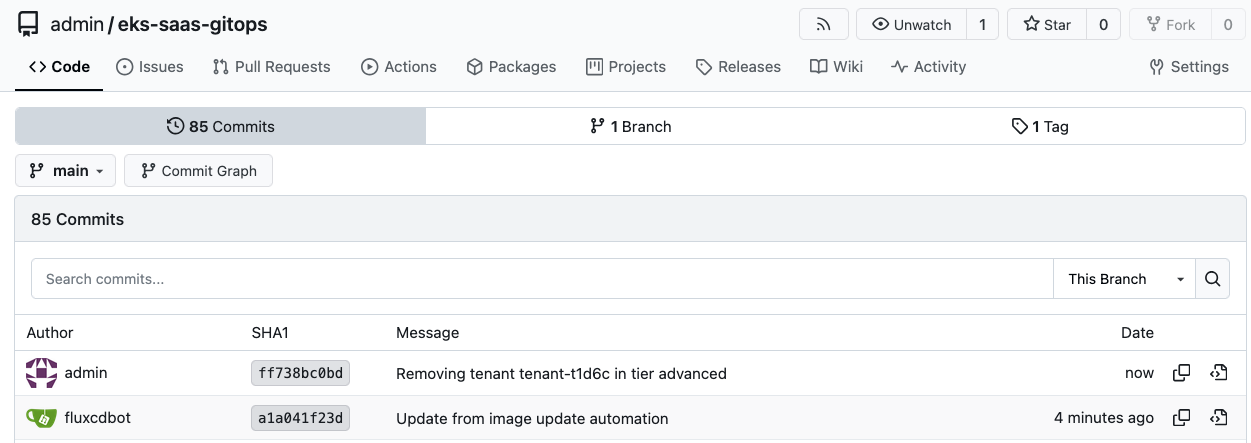

Figure 8: Offboarding Argo Workflow

and to verify GitOps changes you should see commits related to the removal of the tenant resources:

Figure 9: Offboarding Changes in Gitea

To speed up ofboarding, force Flux reconciliation and you can verify that the previous SQS Queue and DynamoDB table associated to the tenant have been cleaned up:

flux reconcile source git flux-system

aws dynamodb list-tables | grep tenant-t1d6c

aws sqs list-queues | grep tenant-t1d6c

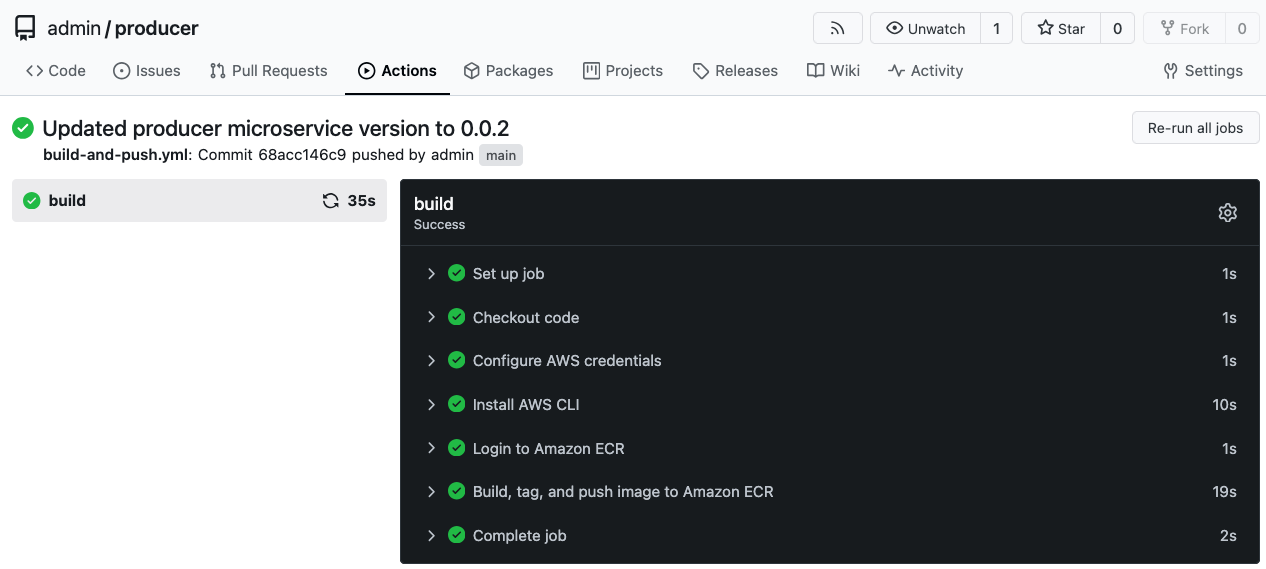

SECTION 4: Deployment Automation in microservice image with Flux

Step 4.1

Flux controllers interact with your Elastic Container Repositories (ECR) repositories and automate the update process. Here, you will update the producer microservice code and push the changes to trigger an automated deployment using Flux.

Open the Producer Microservice Code in your IDE, open the file producer/producer.py ; Modify the ms_version variable from ms_version = “0.0.1” to ms_version = “0.0.2” You will now commit Your Changes to the Producer Microservice Repository

cd /home/ec2-user/environment/microservice-repos/producer

git status

git add .

git commit -m "Updated producer microservice version to 0.0.2"

git push origin main

Step 4.2

Verify if the Pipeline is Executing the New Changes

The pipeline may take a few seconds to start after pushing the changes.

echo "Gitea Web Interface: $GITEA_PUBLIC_IP"

echo "Navigate to the 'producer' repository and click on the 'Actions' tab to view the pipeline execution."

Figure 10: Gitea Pipeline Changes to producer microservices

Flux will automatically update container imags in the ECR repository.

aws ecr list-images --repository-name producer

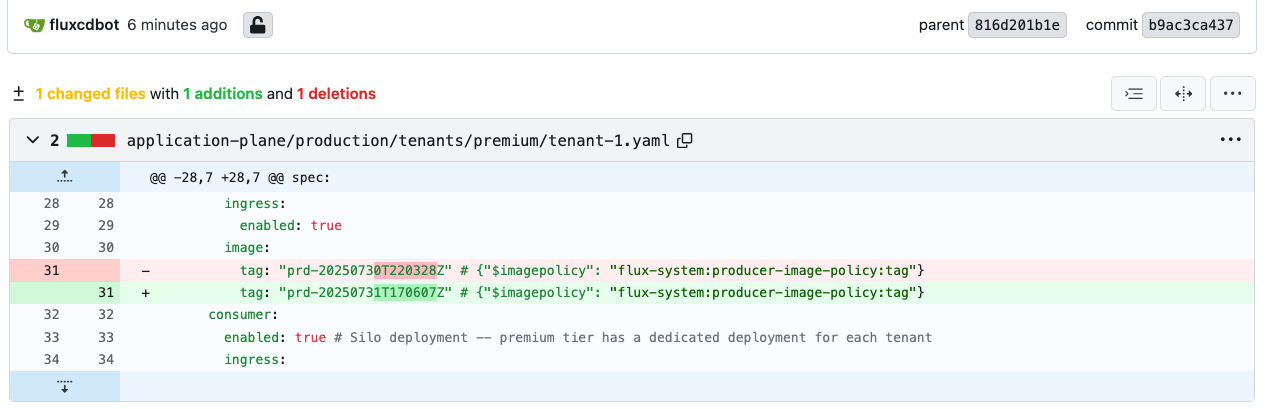

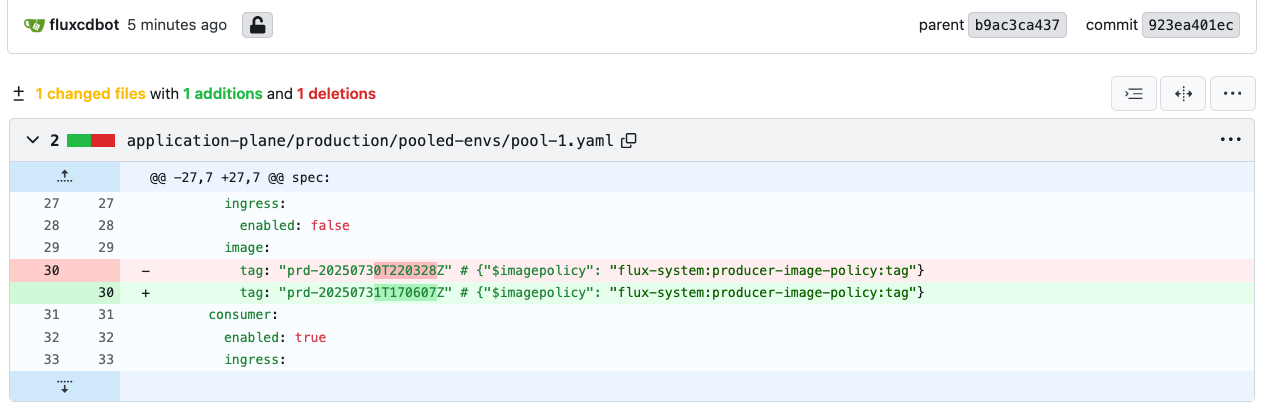

Step 4.3

As soon as Flux detects a new container image, it will patch the HelmReleases that have the flag {“$imagepolicy”: “flux-system:producer-image-policy:tag”}. You can check the Gitea repository directly by accessing its web interface. Check the recent commits to see if there are changes corresponding to the new image tag. Open the new commits made by fluxcdbot in both tenant-1 and pool-1 files:

Figure 11: Gitea Tenant Updates

Figure 12: Gitea Pool Environment Change

Step 4.4

Get the latest image Tag from ECR and image tag used in deployment to compare images:

NAMESPACE="tenant-1"

DEPLOYMENT_NAME="tenant-1-producer"

REPO_NAME="producer"

LATEST_IMAGE_TAG=$(aws ecr describe-images --repository-name $REPO_NAME --region $AWS_REGION --query 'imageDetails[].{pushed:imagePushedAt,tags:imageTags}' --output json | jq -r '.[] | select(.tags[] | startswith("prd-")) | .tags[] | select(startswith("prd-"))' | sort | tail -1)

echo "Latest ECR Image Tag: $LATEST_IMAGE_TAG"

DEPLOYED_IMAGE=$(kubectl get deployment/$DEPLOYMENT_NAME -n $NAMESPACE -o jsonpath='{.spec.template.spec.containers[0].image}')

echo "Deployed Image: $DEPLOYED_IMAGE"

if [[ "$DEPLOYED_IMAGE" == *"$LATEST_IMAGE_TAG"* ]]; then

echo "Success: The deployed image ($DEPLOYED_IMAGE) matches the latest ECR image ($LATEST_IMAGE_TAG)."

else

echo "Failure: The deployed image ($DEPLOYED_IMAGE) does not match the latest ECR image ($LATEST_IMAGE_TAG)."

fi

You should see a message similar to this: “Success: The deployed image matches the latest ECR image.”

If the command above returns Failure it’s because Flux is still reconciling. Wait a few seconds and then run the Get the Image Used in the Deployment and Compare Images commands again.

Step 4.5

Validate the application new version in Tenant First, let’s get the application load balancer endpoint and run curl for producer microservice:

APP_LB=http://$(kubectl get ingress -n tenant-1 -o json | jq -r .items[0].status.loadBalancer.ingress[0].hostname)

curl -s -H "tenantID: tenant-3" $APP_LB/producer | jq

As you should be able to see, the producer microservice version has changed for the tenant.

Step 4.6

Since Flux has updated the Git repository, we need to pull the latest changes in your local:

cd /home/ec2-user/environment/gitops-gitea-repo

git pull origin main

SECTION 5: Automating Rollbacks with Flux in Helm charts

Step 5.1

Managing Helm Chart Updates

Open the values.yaml file, go to the file: gitops-gitea-repo/helm-charts/helm-tenant-chart/values.yaml and edit the following:

consumer:

resources:

limits:

cpu: 200m

memory: 256Mi

requests:

cpu: 150m

memory: 200Mi

then save the file.

Step 5.2

Update the Helm Chart Version

Open the file: gitops-gitea-repo/helm-charts/helm-tenant-chart/Chart.yaml, update version and appVersion fields from 0.0.1 to 0.0.2:

apiVersion: v2

name: helm-tenant-chart

description: A Helm chart for Kubernetes

type: application

version: 0.0.2

appVersion: "0.0.2"

then save the file.

Step 5.3

Retrieve ECR URL from ConfigMap and package the updated Helm chart:

export ECR_HELM_CHART_URL=$(kubectl get configmap saas-infra-outputs -n flux-system -o jsonpath='{.data.ecr_helm_chart_url}')

cd /home/ec2-user/environment/gitops-gitea-repo/helm-charts

helm package helm-tenant-chart

this should create the updated Helm chart

Step 5.4

Push the new chart package to ECR and verify that it is uploaded:

aws ecr get-login-password --region $AWS_REGION | helm registry login --username AWS --password-stdin $ECR_HELM_CHART_URL

helm push helm-tenant-chart-0.0.2.tgz oci://$(echo $ECR_HELM_CHART_URL | sed 's|\(.*\)/.*|\1|')

aws ecr list-images --repository-name gitops-saas/helm-tenant-chart

Step 5.5

To speed up the process, force a reconciliation and verify:

flux reconcile source git flux-system

flux get sources chart

After a few seconds, you should see the Helm releases updated to version 0.0.2.

Step 5.6

Automating Rollbacks with Flux

Deleting the Latest Application Image(latest and prd- tagged versions): First, delete the latest application image of producer microservice to trigger the rollback:

LATEST_TAGS=$(aws ecr describe-images --repository-name producer --region $AWS_REGION --query 'sort_by(imageDetails,&imagePushedAt)[-1].imageTags[]' --output text)

echo "Latest image tags: $LATEST_TAGS"

for tag in $LATEST_TAGS; do

aws ecr batch-delete-image --repository-name producer --image-ids imageTag=$tag

done

aws ecr list-images --repository-name producer

It may take a few minutes for the rollback to complete. Use the watch command to check the rollback status:

flux get images policy --watch

Step 5.7

Verify that the producer microservice has reverted to the previous version by running the command

flux reconcile source git flux-system

APP_LB=http://$(kubectl get ingress -n tenant-1 -o json | jq -r .items[0].status.loadBalancer.ingress[0].hostname)

curl -s -H "tenantID: tenant-1" $APP_LB/producer | jq

curl -s -H "tenantID: tenant-2" $APP_LB/producer | jq

curl -s -H "tenantID: tenant-3" $APP_LB/producer | jq

As observed, the producer microservice should be successfully reverted to the previous version 0.0.1.

Step 5.8

Rolling Back the Helm Chart

We will now delete the latest Helm chart version where you should see tags 0.0.1 and 0.0.2 and execute the following command to delete 0.0.2 version tag:

aws ecr list-images --repository-name gitops-saas/helm-tenant-chart

aws ecr batch-delete-image --repository-name gitops-saas/helm-tenant-chart --image-ids imageTag=0.0.2

aws ecr list-images --repository-name gitops-saas/helm-tenant-chart

Step 5.9 To complete, we will force Flux reconciliation for Helm chart:

flux reconcile source git flux-system

flux get sources chart

It may take a few minutes for all tenants and environments to roll back to version 0.0.1.

In this last section you deleted the latest versions, triggered Flux to reconcile the changes, and verified that the rollbacks were successful. This exercise demonstrates the simplicity and effectiveness of managing version rollbacks in a Flux-driven GitOps environment.

Uninstall the Guidance

To clean up the guidance AWS resources to avoid ongoing costs, you should use the provided cleanup script.

Running the Destroy Script will:

- Clean up ECR repositories and their container images,

- Remove EKS node groups and cluster resources,

- Destroy VPC and related networking components,

- Clean up IAM roles and policies,

- Remove all other infrastructure components created during deployment.

cd /home/ec2-user/eks-saas-gitops/terraform

sh ./destroy.sh <AWS_REGION>

Important: the destruction process may take 15-20 minutes to complete. Ensure you have the necessary AWS permissions to delete all the resources that were created during the initial deployment described in Deployment process overview section above.

Related resources

Kubernetes – Kubernetes is open-source software that allows you to deploy and manage containerized applications at scale.

Terraform – Terraform is an infrastructure as code tool that lets you build, change, and version infrastructure safely and efficiently.

Flux – Flux is a tool for keeping Kubernetes clusters in sync with sources of configuration (like Git repositories), and automating updates to configuration when there is new code to deploy.

Tofu Controller – Tofu Controller (previously known as Weave TF-Controller) is a controller for Flux to reconcile OpenTofu and Terraform resources in the GitOps way.

Argo Workflows – Argo Workflows enables users to define and execute complex, multi-step workflows as native Kubernetes objects.

Helm Charts – Helm helps you manage Kubernetes applications — Helm Charts help you define, install, and upgrade even the most complex Kubernetes application.

Git – Git is a free and open source distributed version control system designed to handle everything from small to very large projects with speed and efficiency.

Gitea – Gitea is a painless, self-hosted, all-in-one software development service.

Contributors

Lucas Soriano Alves Duarte, Sr. Specialist Solutions Architect, ISV Containers Team

Daniel Zilberman, Sr. Solutions Architect, AWS Tech Solutions Team

Tiago Reichert, Sr. Specialist Solutions Architect, GTM Containers Team

Bruno Lopes, Sr. Solutions Architect, Geo Containers Team

Pedro Oliveira, Solutions Architect, ENT Global Sales Team

Eric Anderson, Assoc. Specialist Solutions Architect, ISV Containers team

Nikita Ravi Shetty, Assoc. Specialist Solutions Architect, Telco Containers Team

Rodrigo Bersa, Sr. Specialist Solutions Architect, Containers, Specilist AppMod Team

Notices

Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers.