Guidance for Building Transaction Posting Systems Using Event-Driven Architecture on AWS

Overview

This implementation guide focuses on transaction posting subsystems responsible for posting payments to receiving accounts. In this phase of payment processing, inbound transactions are evaluated, have accounting rules applied to them, then are posted into receiving accounts. The accounting rules dictate the work that needs to happen to successfully process the transaction. Inbound transactions are assumed to have been authorized by an upstream process.

In traditional architectures, the upstream system writes transactions to a log. The log is periodically picked up by a batch-based processing system, processed in bulk, then eventually posted to customer accounts. Transactions (and customers) must wait for the next batch iteration before being processed, which can take multiple days.

Instead of this traditional approach, the reference architecture in this guidance uses event-driven patterns to post transactions in near real-time rather than in batches. Transaction lifecycle events are published to an Amazon EventBridge event bus. Rules forward the events to processors, which act on the events, then emit their own events, moving the transaction through its lifecycle. Processors can be easily added or removed as organization needs change. Customers get a more fine-grained account balance and can dispute transactions much sooner. Processing load is offloaded from batch systems during critical hours.

Features and benefits

Guidance for Building Payment Systems Using Event-Driven Architecture on AWS provides the following features:

- Inbound transaction source: Translates transactional writes to a stream of transaction events

- Deduplication function: Flags likely duplicate transactions for event processors to filter out

- Samples of subscribed event processors: Shows patterns for attaching event processors to different points in a transaction’s lifecycle

- Business Rule workflow: Implements sample business rules, including branching processing logic

- External posting system integration: Provides a pattern for integration with downstream (external) systems

Use cases

This guidance applies to the following use cases:

- Credit/Debit cards account posting: Banks and financial institutions which issue credit/debit cards can now move from end-of-day (EOD) batch cycles to near real-time account posting.

- ATM transactions: All ATM financial transactions (debit and credit) can be posted directly to the cardholders account enabling redundancy to ear-marked funds and cycle payments posting. <!– The followingg use cases mentioning specific payment schemes are commented out per issues raised by the Payments team

- Domestic near real-time payments: Real-time payment schemes like FedNow, ACH, UK-FPS, Singapore-G3, UPI-India, NPP-Australia and more can benefit from this guidance by moving to faster clearing and settlement in addition to near real-time posting.

- International near real-time payments and X-border: Similar to the domestic schemes, any international and cross-border payments reap the same benefits. –>

Although this guidance is specifically considering the use case of a payment processing system, the architecture patterns may be generalized for other event-driven use cases.

Architecture overview

This section provides a reference implementation architecture diagram for the components deployed with this guidance.

Architecture diagram

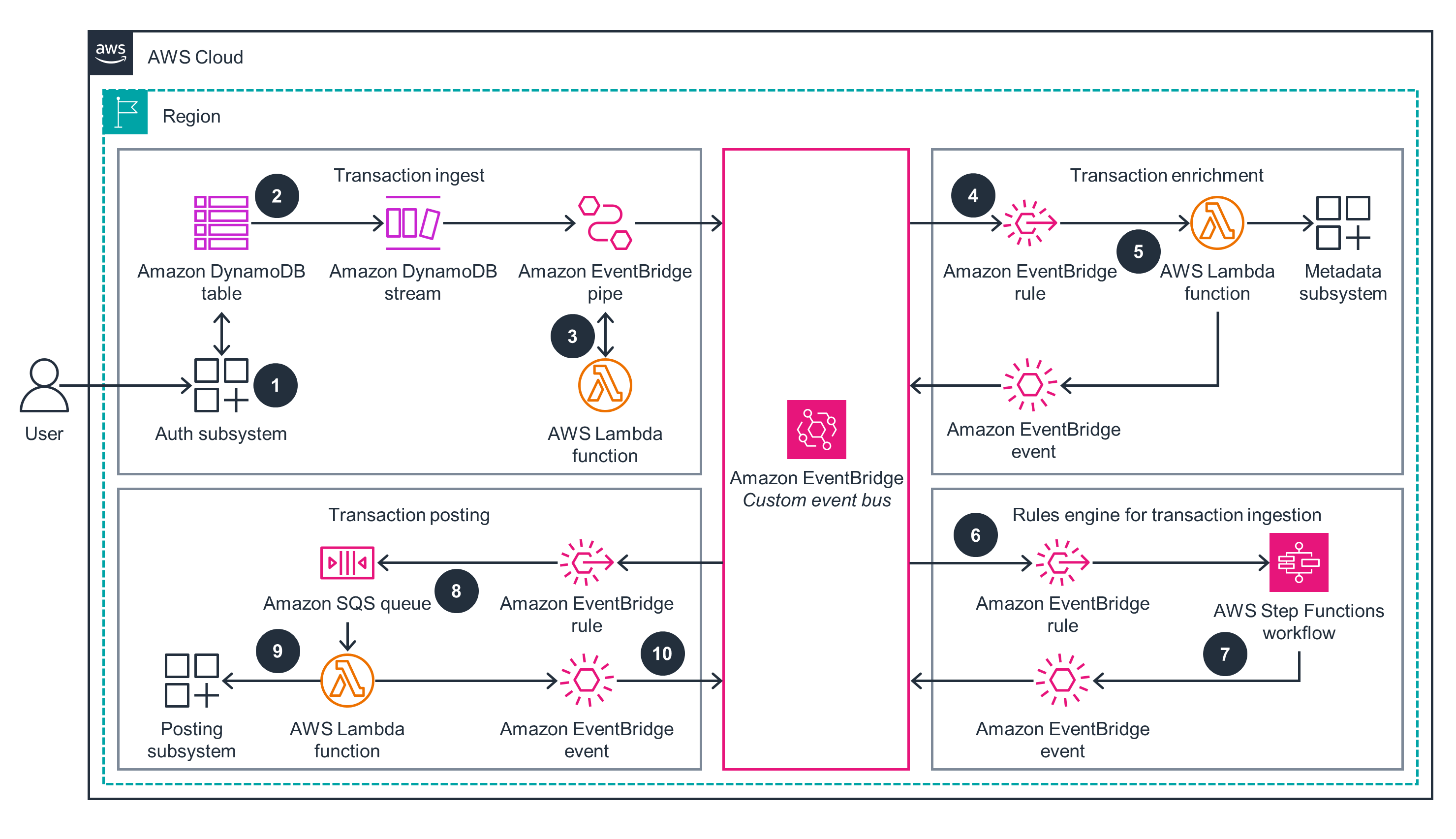

Figure 1: Building Payment Systems Using Event-Driven Architecture on AWS - Reference Architecture

Follow the instructions in the section Deploy the Guidance to build and run your own implementation of solution.

Architecture steps

Your user initiates a payment, which the authorization application approves and persists to an Amazon DynamoDB table.

An Amazon EventBridge pipe reads the approved authorization records from the DynamoDB table stream and publishes events to an EventBridge custom event bus.

You can add duplicate checking logic to the EventBridge pipe through an AWS Lambda deduplication function. This guidance’s sample code uses a DynamoDB table with conditional writes to identify duplicate inbound transactions based on transaction properties and time window.

An EventBridge rule invokes an enrichment Lambda function for matching events to add context like account type and bank details.

The Lambda function queries the metadata and publishes a new event containing the extra info to the EventBridge custom event bus.

An EventBridge rule watching for enriched events invokes an AWS Step Functions workflow to apply business rules to the event as part of a rules engine.

When an event passes all business rules, the Step Functions workflow publishes a new event back to the EventBridge custom event bus.

An EventBridge rule adds a message to an Amazon Simple Queue Service (Amazon SQS) queue as a buffer to avoid overrunning the downstream posting subsystem.

A Lambda function reads from the Amazon SQS queue and invokes the downstream posting subsystem to post the transaction.

The Lambda function publishes the final event back to the EventBridge custom event bus.

Note: some identified services implement at-least-once behavior. Where necessary, event processors should implement idempotency controls like a distributed lock as mentioned in our architecture decision register.

Note: most services used in this guidance are regional. You can use multi-region patterns where appropriate to achieve your resilience objectives.

AWS Services in this Guidance

| AWS service | Role | Description |

|---|---|---|

| Amazon Eventbridge | Core | An EventBridge custom event bus is paired with EventBridge rules to route transaction processing events to subscribed components. The emitted events describe the lifecycle of transactions as they move through the system. Additionally, an EventBridge pipe is used to consume the inbound transaction stream and publish events to the event bus. |

| AWS Lambda | Core | Runs custom code in response to events. This guidance includes a sample duplication detection function, a transaction enrichment function, a sample posting system integration function, and others. You could alternatively use a container platform like AWS Fargate. |

| Amazon Simple Queue Service (Amazon SQS) | Core | Used as a durable buffer for when you need to capture events from rules and also need to govern scale-out. |

| Amazon Simple Storage Service (Amazon S3) | Core | Stores audit and transaction logs captured by EventBridge archives. |

| Amazon DynamoDB | Core | Acts as a possible ingest method for inbound transactions. Transactions are written to a DynamoDB table, which pushes records onto a DynamoDB stream. The stream records are published to the EventBridge event bus to start the processing lifecycle. |

| AWS Step Functions | Supporting | Implements a simple business rules system, initiating alternate processing paths for transactions with unique characteristics. This could be implemented by alternate business rules systems like Drools. |

| Amazon CloudWatch | Supporting | Monitors system health through metrics and logs. |

| AWS X-Ray | Supporting | Traces transaction processing across components. |

| AWS Identity and Access Management (IAM) | Supporting | Defines roles and access policies between components in the system. |

| AWS Key Management Service (AWS KMS) | Supporting | Manages encryption of transaction data. |

Plan your deployment

Estimated cost for testing guidance

You are responsible for the cost of the AWS services used while running this guidance. As of April 2024, the cost for running this guidance with the default settings in the us-east-1 (N. Virginia) Region is approximately $1 per month, assuming 3,000 transactions.

This guidance uses AWS serverless services, which use a pay-as-you-go billing model, meaning you only pay for the resources you consume. Costs are incurred with usage of the deployed resources. Refer to the Sample cost table for a service-by-service cost breakdown.

We recommend creating a budget through AWS Cost Explorer to help manage costs. Prices are subject to change. For full details, refer to the pricing webpage for each AWS service used in this guidance.

Sample cost table

The following table provides a sample cost breakdown for deploying this guidance with the default parameters in the us-east-1 (N. Virginia) Region for one month assuming “non production” level of message traffic volume.

| AWS service | Dimensions | Cost [USD] |

|---|---|---|

| Amazon DynamoDB | 1 GB Data Storage,1 KB avg item size, 3,000 DynamoDB Streams per month | $ 0.25 |

| AWS Lambda | 3,000 requests per month with 200 ms avg duration, 128 MB memory, 512 MB ephemeral storage | $ 0.00 |

| Amazon SQS | 0.03 million requests per month | $ 0.00 |

| AWS Step Functions | 3,000 state machine requests per month with 3 state transitions per state machine | $ 0.13 |

| Amazon Simple Notification Service (Amazon SNS) | 3,000 requests users and 3,000 email notifications per month | $ 0.04 |

| Amazon EventBridge | 3,000 custom events per month with 3,000 events replay and 3,000 requests in the pipes | $ 0.00 |

| Total estimated cost per month: | $1 |

Esimated production-scale cost

A sample cost breakdown for production scale load (around 20 million requests/month) can be found in this AWS Pricing Calculator estimate and is estimated to be around $1,811.15 USD/month

Quotas

Service quotas, also referred to as limits, are the maximum number of service resources or operations for your AWS account.

Quotas for AWS services in this guidance

Make sure you have sufficient quota for each of the services implemented in this solution. For more information, see AWS service quotas. Note that some services have different default quotas in different AWS Regions.

This guidance is built using AWS serverless services, which scale automatically based on the incoming load, up to your configured service quotas. Development and test workloads for this guidance will likely not reach default quota levels for the involved services. If you decide to scale into production-sized workloads, you will likely need to adjust your service quotas. If you do not, you may encounter throttling errors. You can see counts of these errors in Amazon CloudWatch metrics.

This guidance includes example patterns that protect subcomponents from being overwhelmed by bursts of traffic and handle throttling errors. One example uses an Amazon SQS queue to buffer messages instead of sending them directly to an external account posting system. The messages wait in the queue while a worker sends them to the posting system at a rate it can handle. Dead Letter Queues (DLQ) and Destinations are also configured for asynchronously invoked services, to capture messages that encounter errors.

We recommend reviewing the quotas for the following services when working at a higher scale:

- DynamoDB table write capacity

- Lambda function concurrent executions

- Step Functions state machine StartExecution calls, State Transitions, and open executions

To view the service quotas for all AWS services in the documentation without switching pages, view the information in the Service endpoints and quotas page in the PDF instead.

Security

When you build systems on AWS infrastructure, security responsibilities are shared between you and AWS. This shared responsibility model reduces your operational burden because AWS operates, manages, and controls the components including the host operating system, the virtualization layer, and the physical security of the facilities in which the services operate. For more information about AWS security, visit AWS Cloud Security.

Guidance for Building Payment Systems Using Event-Driven Architecture on AWS leverages AWS Identity and Access Management (IAM) as the primary governing security service. IAM establishes a strong identity foundation and implements the principle of least privilege.

Each component in the system is assigned its own IAM role. Each component’s role is assigned its own set of IAM policies which specify the actions the component can take. Using component-specific roles and polices allows us to define fine-grained rules that restrict components to only the actions needed to perform their function.

All resources in this guidance require IAM credentials and permissions to invoke. There are no public endpoints in this guidance. Specifically, none of the involved Lambda functions have public function URLs enabled.

The services involved in this guidance use encryption at rest and in transit to protect data. Encryption is managed by AWS KMS.

Deploy the Guidance

The following section includes information about deploying this guidance.

Prerequisites

Operating system

These deployment instructions are optimized to work on Amazon Linux 2 or Mac OSX operating systems.

This guidance builds Lambda functions using Python. The build process currently supports Linux and MacOS. It was tested with Python 3.11. You will need Python and Pip to build and deploy.

Third-party tools

This guidance uses Terraform as an infrastructure as code (IaC) provider. You will need Terraform installed to deploy. These instructions were tested with Terraform version 1.7.1.

You can install Terraform on Linux (such as a CodeBuild build agent) with commands like this:

curl -o terraform_1.7.1_linux_amd64.zip https://releases.hashicorp.com/terraform/1.7.1/terraform_1.7.1_linux_amd64.zip

unzip -o terraform_1.7.1_linux_amd64.zip && mv terraform /usr/bin

The guidance is deployed as one Terraform config. The Root HCL config file (main.tf) dictates the flow and all the submodules are bundled under this repo in individual folders (for example /sqs for the sqs module). Lambda code can be found under the /src folder.

The guidance uses a local Terraform backend for deployment simplicity. You may want to switch to a shared backend like Amazon S3 for collaboration, or when using a continuous integration, continuous deployment (CI/CD) pipeline.

AWS account requirements

These instructions require AWS credentials configured according to the Terraform AWS Provider documentation.

The credentials must have IAM permission to create and update resources in the target account.

Services include:

- EventBridge custom event bus, pipes, rules

- Lambda functions

- Amazon SQS queues

- DynamoDB tables and streams

- Step Functions workflows

- Amazon SNS topics

- Amazon S3 buckets

Deployment process overview

Before you launch the guidance, review the cost, architecture, security, and other considerations discussed in this guide. Follow the step-by-step instructions in this section to configure and deploy the guidance into your account.

Time to deploy: Approximately 30 minutes

Deploy the platform

- Log in to your AWS account on your CLI/shell through your preferred auth provider.

- Clone the code repository using command:

git clone https://github.com/aws-solutions-library-samples/guidance-for-building-event-driven-payment-systems-on-aws - Change directory to the source folder inside the repository:

cd guidance-for-building-event-driven-payment-systems-on-aws/source - Initialize Terraform using the following command:

terraform init - To see the resources that will be deployed, run the following command:

terraform plan -var="region=<your target region>"This will not deploy anything to your environment.

- To actually deploy the guidance sample code, run the following command:

terraform apply -var="region=<your target region>"Terraform will generate a plan, then prompt you to confirm that you want to deploy the listed resources. Type

yesif you want to deploy

Deployment validation

When successful, Terraform Outputs the ARN for the DynamoDB input table’s stream. It should look something like this:

Apply complete! Resources: 5 added, 7 changed, 5 destroyed.

Outputs:

stream_arn = "arn:aws:dynamodb:us-east-2:111111111111:table/visa/stream/2024-01-04T21:55:22.954"

Confirm your resources were created by logging into the AWS Management console. Make sure you are in the Region you specified in the terraform apply command. Check for your resources.

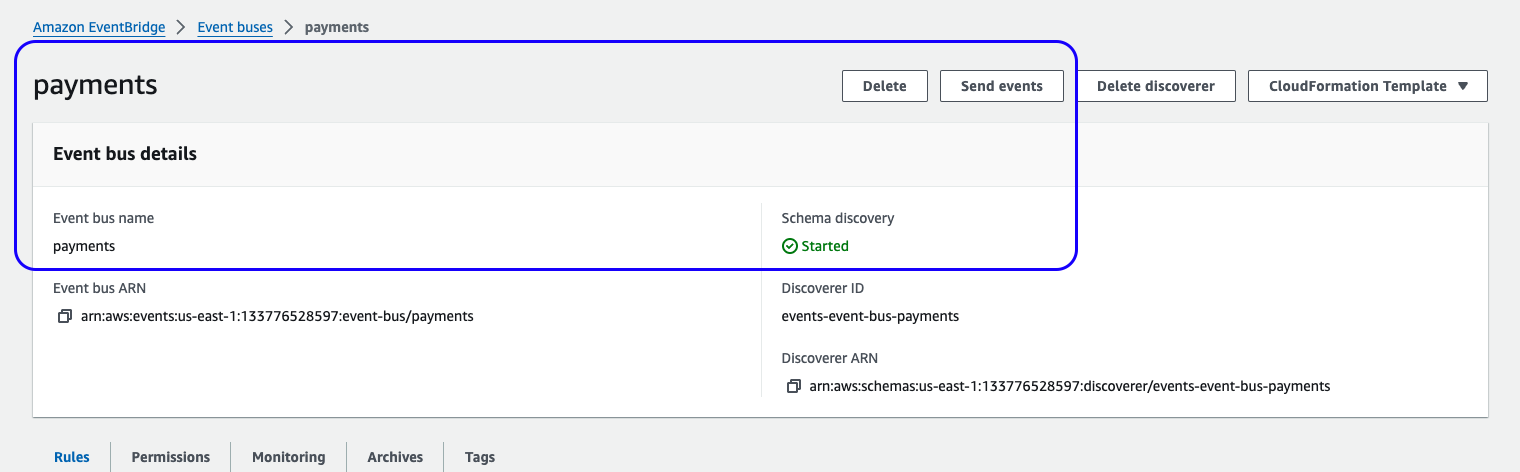

- Open the EventBridge console and verify a

paymentsCustom Event Bus exists.

Figure 2: Guidance Custom EventBridge bus view in the AWS Management Console

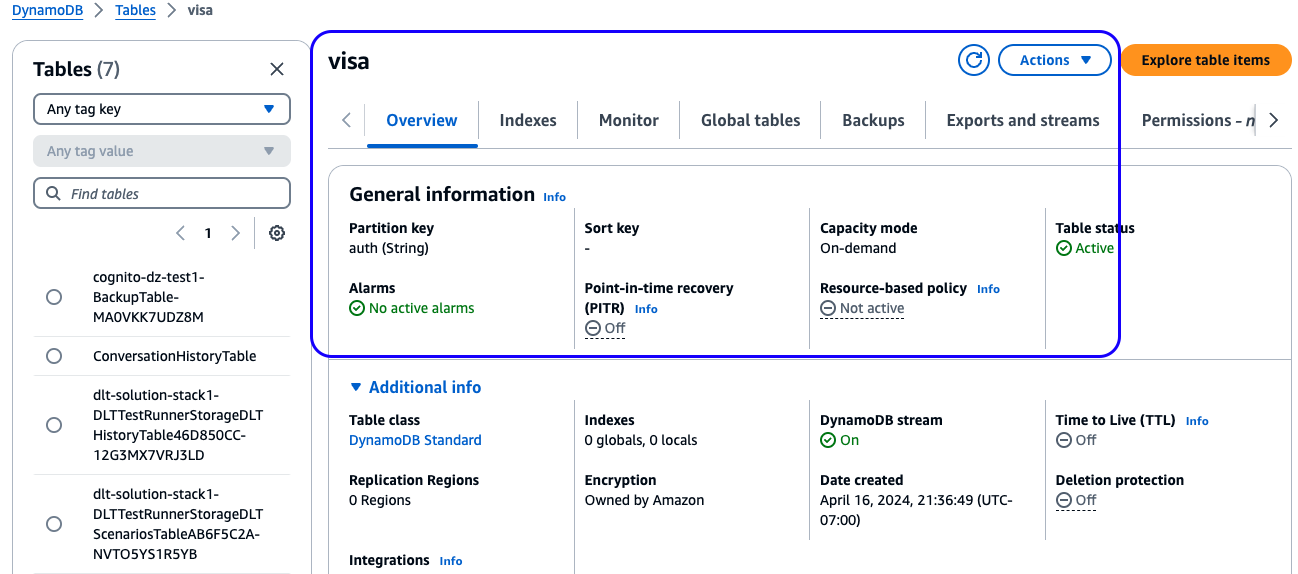

- Open the DynamoDB console and verify a

visatable exists.

Figure 3: Guidance DynamoDB table view in the AWS Management Console

Run the Guidance

You can initiate transaction processing by writing a properly-formed record to the visa table in DynamoDB.

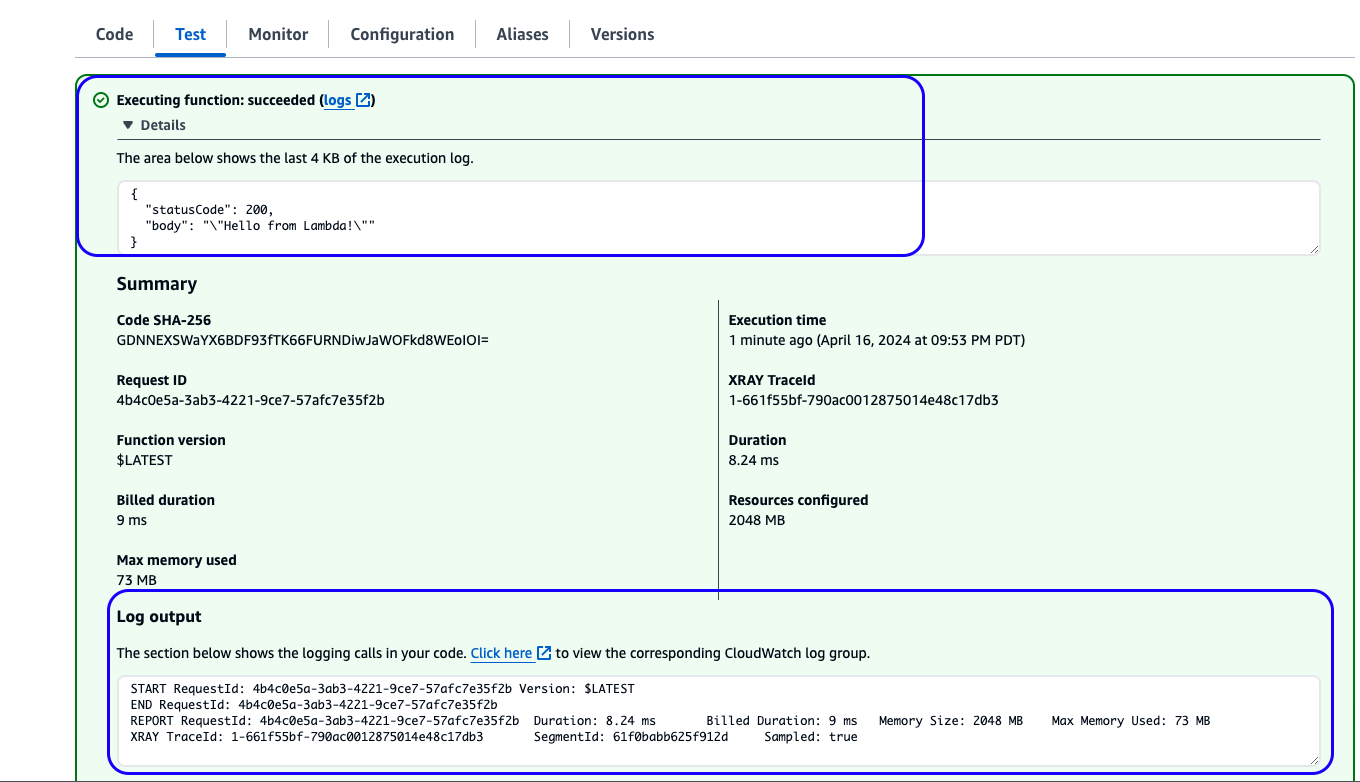

The guidance includes a Lambda function named visa-mock, which you can invoke to write sample records to DynamoDB. You can invoke the function by following these steps:

- Navigate to the Lambda service for your target Region in the AWS Management Console.

- Select the

visa-mockfunction, and open theTesttab. - Specify a new test event, fill out the Event name field, and change the Event JSON to be an empty set of braces:

{}- Note: The Event JSON content does not matter, as long as it is valid JSON.

- Select the

Testbutton. - You should see an

Executing function: succeededmessage and no errors in the Log output.

Figure 4: Successful sample Lambda function execution results in the AWS Management Console

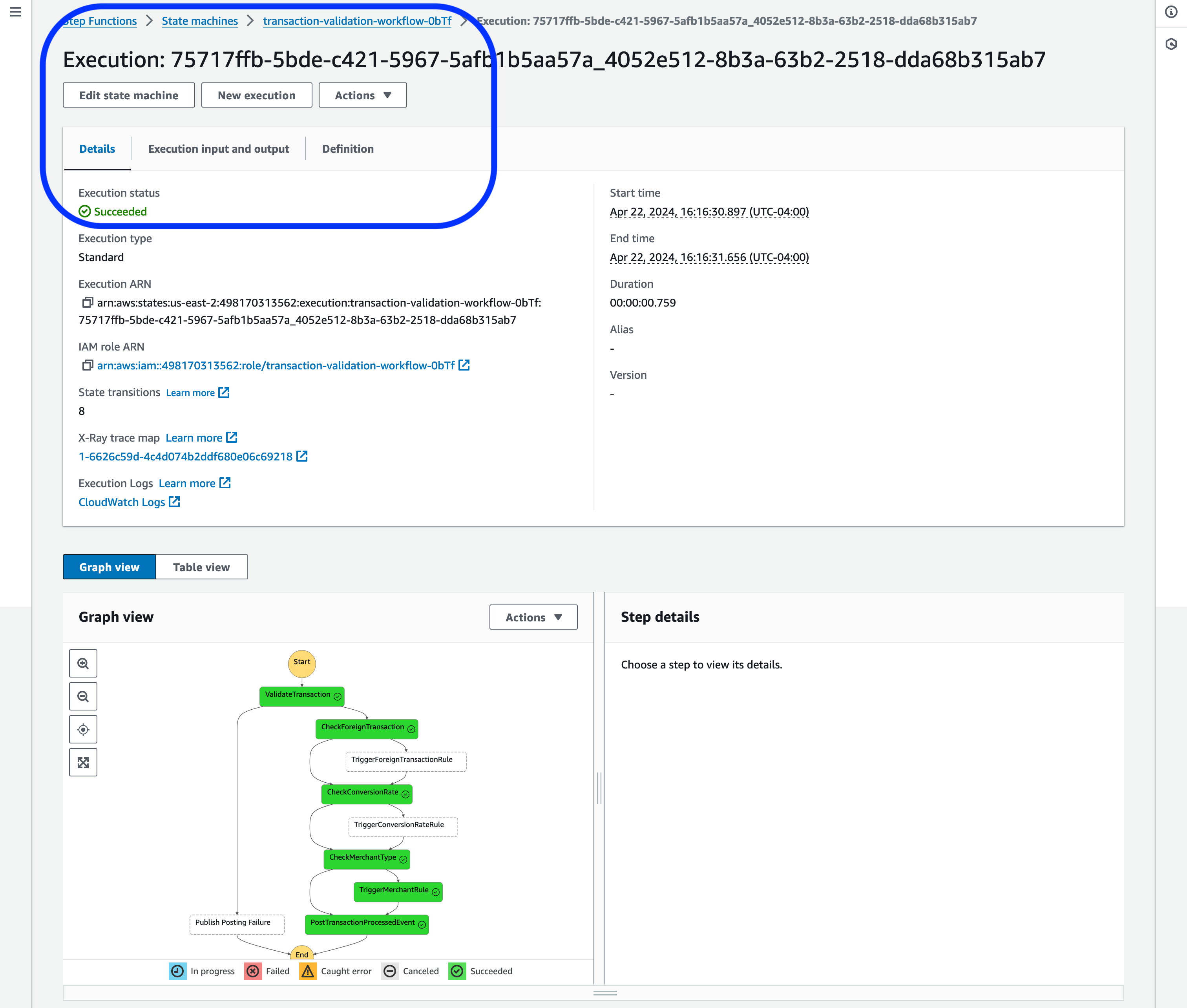

As transactions move through the system, you will see metrics being published to CloudWatch, as well as invokes across the included Lambda functions and Step Functions state machine executions. The guidance includes EventBridge archives, which collect records of events that match EventBridge rules.

Figure 5: Successful Step Functions execution results in the AWS Management Console

Uninstall the Guidance

You can manually uninstall the guidance for Building Payment Systems Using Event-Driven Architecture on AWS using the AWS Management Console or by using the Terraform CLI.

To manually remove the deployed resources, use the terraform show command to list the resources that were deployed. Find those resources in the AWS Management Console and delete them. Finally, empty and delete the Terraform state-tracking S3 bucket.

To automatically remove the resources with Terraform, follow these steps:

- Empty the guidance S3 buckets in the AWS Management Console. AWS guidance implementations do not automatically delete S3 bucket content in case you have stored data to retain.

- To remove the provisioned resources, run the following command from the root of the

/sourcedirectory in the code repository:

terraform destroy -var="region=<your target region>"

Related resources

- Visit ServerlessLand for more information on building with AWS serverless services.

- Visit What is an Event-Driven Architecture? in AWS documentation for more information about event-driven systems.

Next Steps

Consider subscribing your own business rules engine to the EventBridge event bus and processing inbound transactions using your own logic.

Contributors

- Ramesh Mathikumar, Principal DevOps Consultant

- Rajdeep Banerjee, Sr. Solutions Architect

- Brian Krygsman, Sr. Solutions Architect

- Daniel Zilberman, Sr. Solutions Architect,Technical Solutions

Notices

Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers.