Amazon Redshift Integration Guide

This section provides instructions for configuring Amazon Redshift to query data in tables created by InsuranceLake and to enable InsuranceLake to create Amazon Redshift views of data lake data.

Contents

- Amazon Redshift integration options

- Common setup

- Create data lake views in Amazon Redshift

- Create materialized views in Amazon Redshift

- Use Amazon Redshift for Consume layer storage

Amazon Redshift integration options

You can use an Amazon Redshift provisioned cluster or an Amazon Redshift Serverless workgroup to directly query Data Catalog tables. You can optionally register databases in the Data Catalog created by InsuranceLake as Amazon Redshift external schemas, and create materialized views for improved query performance from Amazon Redshift’s optimized compute engine.

Access to the data lake data from Amazon Redshift relies on Amazon Redshift Spectrum, which requires that the workgroup or cluster be located in the same AWS Region as the data lake.

You can also use AWS Glue ETL jobs to write data directly to Amazon Redshift Managed Storage through AWS Glue connections. AWS Glue connections rely on VPC connectivity between ETL jobs and your Amazon Redshift database.

The following sections cover the setup and testing of each integration option. Some setup steps are common to multiple integration options, which will be identified in the guide.

Common setup

This section covers steps that are common to all 3 methods of integration with Amazon Redshift.

The instructions in the following sections assume you have completed the Quickstart guide and loaded the provided sample data into Data Catalog tables.

Provisioned cluster or serverless workgroup

If you need to create an Amazon Redshift Serverless workgroup or cluster, follow the respective getting started guides:

Ensure you can connect to Amazon Redshift with superuser privileges. You do not need an Amazon Redshift user associated with an IAM identity unless specifically indicated.

Ensure you have followed the CDK Instructions for local deployment setup.

Amazon Redshift permissions for AWS Glue

AWS Glue ETL jobs must have the correct permissions to access your Amazon Redshift resources and execute SQL statements. Follow the below steps to adjust the InsuranceLake AWS Glue stack so that the IAM role used by ETL jobs has these permissions.

Add the following policy document to

get_glue_rolestarting at line 371 in glue_jobs_stack.py, the InsuranceLake AWS Glue jobs stack.Modify the below code to use the correct ARN for your Amazon Redshift Serverless workgroup or cluster. ARNs for Amazon Redshift Serverless workgroups follow the convention:

arn:aws:redshift-serverless:<REGION>:<ACCOUNT ID>:workgroup/<WORKGROUP UUID>. ARNs for Amazon Redshift Provisioned clusters follow the convention:arn:aws:redshift:<REGION>:<ACCOUNT ID>:cluster:<CLUSTER ID>andarn:aws:redshift:<REGION>:<ACCOUNT ID>:dbname:<CLUSTER ID>/*.'RedshiftAccess': iam.PolicyDocument(statements=[ iam.PolicyStatement( effect=iam.Effect.ALLOW, actions=[ 'redshift-data:ExecuteStatement', 'redshift-data:BatchExecuteStatement', 'redshift-serverless:GetCredentials', 'redshift:GetClusterCredentialsWithIAM', ], resources=[ '<CLUSTER OR WORKGROUP ARN>', '<CLUSTER DATABASE>', ] ), iam.PolicyStatement( effect=iam.Effect.ALLOW, actions=[ 'redshift-data:GetStatementResult', 'redshift-data:CancelStatement', 'redshift-data:DescribeStatement', ], resources=[ '*' ], conditions={ 'StringEquals': { 'redshift-data:statement-owner-iam-userid': '${aws:userid}' } } ), ]),Using CDK, redeploy the AWS Glue jobs stack.

cdk deploy Dev-InsuranceLakeEtlPipeline/Dev/InsuranceLakeEtlGlueJobsIdentify the IAM role for the InsuranceLake AWS Glue ETL jobs, which follows the naming convention

<environment>-insurancelake-<region>-glue-role.- If you do not know the name of the role, you can find it in the CloudFormation console.

- Select the InsuranceLake AWS Glue stack,

<Environment>-InsuranceLakeEtlGlueJobs. - Select the

Outputstab. - Find the value for the export named

<Environment>InsuranceLakeGlueRole. This is the IAM role for AWS Glue ETL jobs.

Open the Amazon Redshift query editor as a superuser.

Run the following SQL to grant the InsuranceLake AWS Glue ETL jobs role access to your Amazon Redshift database and the Data Catalog through Amazon Redshift Spectrum.

In the below SQL, you will explicitly create the Amazon Redshift user for the AWS Glue job IAM role so the

GRANTstatements succeed. Normally, the first connection by the role to Amazon Redshift would create the user automatically; however, the first run will fail due to missing permissions and these instructions show you how to avoid that.Adjust the following SQL statements to use the correct AWS Glue role name and Amazon Redshift database name.

CREATE USER "IAMR:dev-insurancelake-us-east-2-glue-role" PASSWORD DISABLE; GRANT USAGE ON DATABASE "awsdatacatalog" TO "IAMR:dev-insurancelake-us-east-2-glue-role"; GRANT CREATE ON DATABASE "dev" TO "IAMR:dev-insurancelake-us-east-2-glue-role";

Create data lake views in Amazon Redshift

Data lake permissions

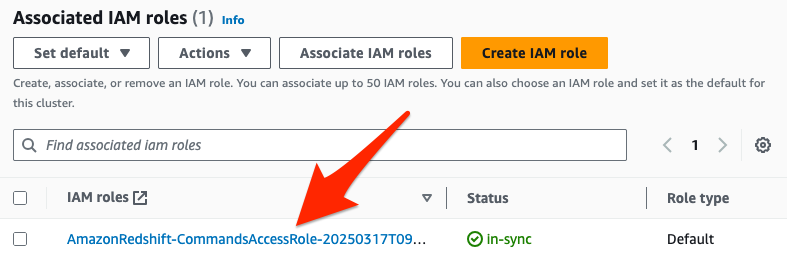

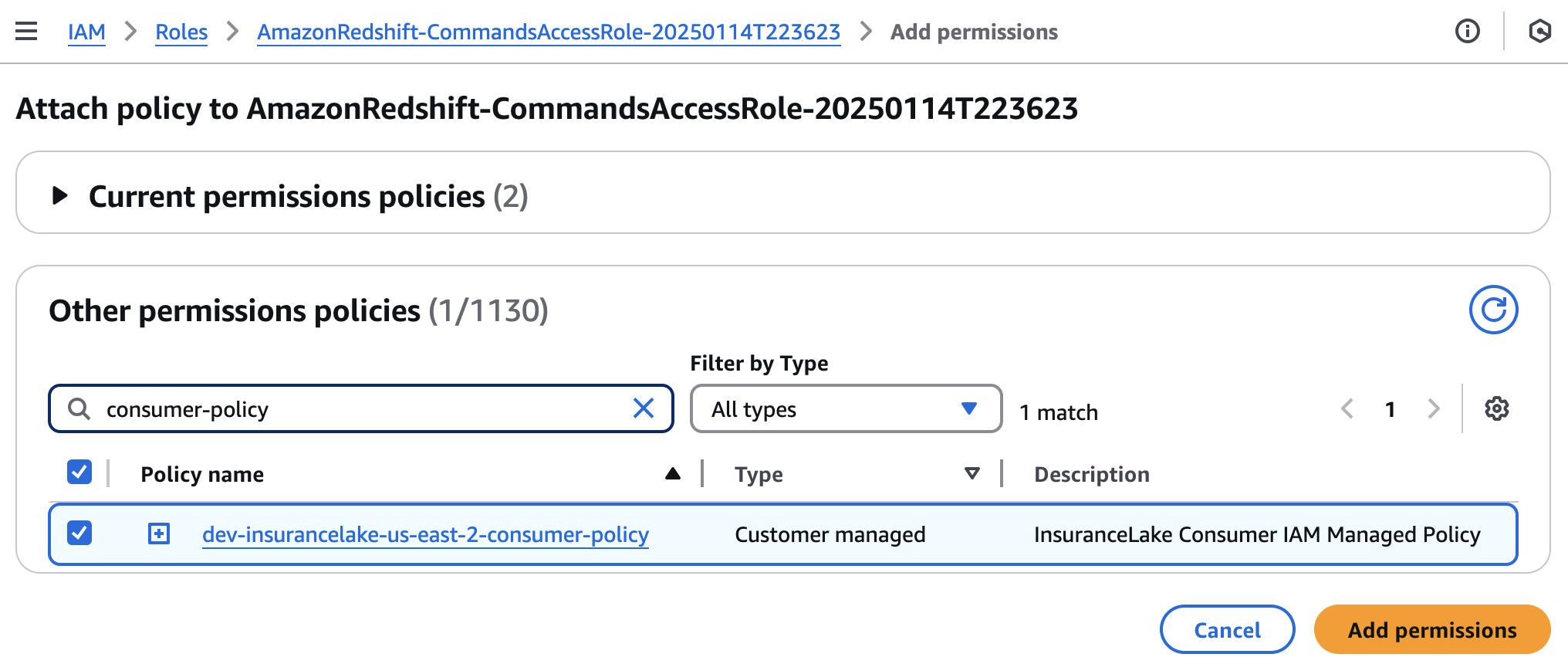

Your Amazon Redshift workgroup or cluster must have the correct permissions to access the data lake. InsuranceLake deployment creates a customer-managed IAM policy that you can use to provide this access.

Identify the default IAM role used by the cluster or the workgroup’s namespace in the Amazon Redshift management console.

Select the IAM role name to open it in AWS Console.

Select

Add permissions, thenAttach policies.Search for the InsuranceLake customer-managed policy, which follows the naming convention

<environment>-insurancelake-<region>-consumer-policy, and select the checkbox to the left of the InsuranceLake.- If you do not know the name of the policy, you can find it in the CloudFormation console.

- Select the InsuranceLake AWS Glue stack,

<Environment>-InsuranceLakeEtlDataLakeConsumer. - Select the

Outputstab. - Find the value for the export named

<Environment>InsuranceLakeConsumerPolicy. This is the customer-managed policy to attach.

Attach InsuranceLake customer-managed policy to the role by selecting

Add permissions.This policy grants the IAM role permissions to decrypt using the InsuranceLake KMS key, and read from InsuranceLake Amazon S3 buckets (Cleanse and Consume) and Data Catalog.

Database connection parameters

The Cleanse-to-Consume AWS Glue job requires extra parameters to create views in Amazon Redshift.

| Parameter Name | Type | Description |

|---|---|---|

| redshift_database | Always required | Database name |

| redshift_workgroup_name | Required for Amazon Redshift Serverless | Workgroup name |

| redshift_cluster_id | Required for Amazon Redshift Provisioned | Cluster ID |

AWS Glue job parameters can be specified in several ways: in a StartJobRun API call, SDK command, or CLI command; by modifying the AWS Glue ETL job parameters (under Job details, Advanced properties); and by modifying the task node DevInsuranceLakeCleanseGlueJobTask in the InsuranceLake Step Function State Machine definition. We recommend modifying the Step Functions State Machine and using CDK to deploy the change.

Follow the steps below to add these parameters to the InsuranceLake data pipeline.

Prepare the set of parameters. Ensure the database, workgroup name, and cluster ID match the names in your environment.

- For a serverless workgroup, use

redshift_databaseandredshift_workgroup_name. Example follows.--redshift_database=dev --redshift_workgroup_name=default - For a provisioned cluster, use

redshift_databaseandredshift_cluster_id. Example follows.--redshift_database=dev --redshift_cluster_id=redshift-cluster-1

- For a serverless workgroup, use

Add two additional arguments to

glue_cleanse_taskstarting at line 164 in step_functions_stack.py, the InsuranceLake Step Functions stack.Adjust the following code section to use the parameters you identified in the previous step.

This example is for an Amazon Redshift Serverless workgroup:

glue_cleanse_task = self.get_glue_job_task( 'Cleanse', 'Cleanse to Consume data load and transform', cleanse_to_consume_job.name, failure_function_task, arguments={ '--source_database_name.$': '$.target_database_name', '--target_database_name.$': "States.Format('{}_consume', $.target_database_name)", '--base_file_name.$': '$.base_file_name', '--redshift_database': 'dev', '--redshift_workgroup_name': 'default', }, )Using CDK, redeploy the Step Functions stack.

cdk deploy Dev-InsuranceLakeEtlPipeline/Dev/InsuranceLakeEtlStepFunctions

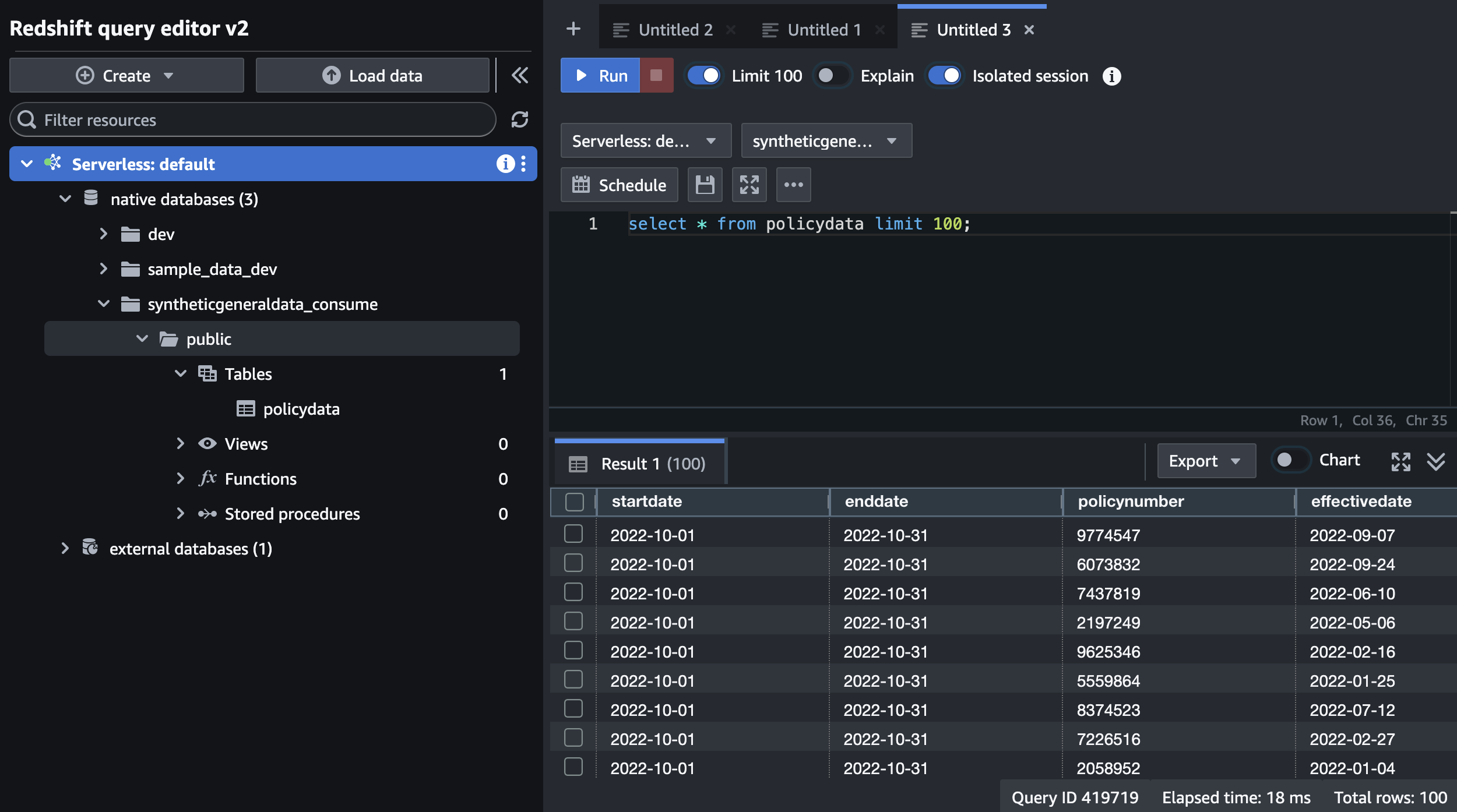

Query data lake views from Amazon Redshift

Access the Amazon Redshift query editor.

In the tree-view pane, expand

external databasesand theawsdatacatalogschema. Notice the Data Catalog databases and tables likesyntheticgeneraldataandpolicydata.If you see the error,

The current user is not authenticated with IAM credentials, edit the connection by clicking the vertical ellipsis next to the Amazon Redshift connection.- Choose

Temporary credentials using your IAM identity, orFederated userunderOther ways to connect. - Select

Create connection.

- Choose

Run the following query. Notice that the dataset is the same as what was shown in Athena.

SELECT * FROM awsdatacatalog.syntheticgeneraldata_consume.policydata LIMIT 100;

Create materialized views in Amazon Redshift

Materialized views of data lake data in Amazon Redshift require the same steps as data lake views in Amazon Redshift, as well as the steps to create an external schema and grant permissions below.

Create an external schema

If you want to create materialized views in Amazon Redshift that use data lake data, follow these steps to create an external schema. You must create an external schema for each data lake database you want to access.

Ensure you have successfully run a workflow to create each database before running these commands, or that you have manually created the databases. The Data Catalog database must exist for the commands to work.

Create an external schema based on the InsuranceLake database in the Data Catalog. This query creates an external schema

datalake_syntheticgeneraldata_consume. The name following theDATABASEidentifier must match the name of the Data Catalog database created by InsuranceLake.For a full list of parameters refer to the Create External Schema documentation.

CREATE EXTERNAL SCHEMA IF NOT EXISTS "datalake_syntheticgeneraldata_consume" FROM DATA CATALOG DATABASE 'syntheticgeneraldata_consume' IAM_ROLE DEFAULT;Grant the AWS Glue role access to the external schema.

See the steps in Amazon Redshift permissions for AWS Glue to identify the correct IAM role name and modify the below SQL accordingly.

GRANT USAGE ON SCHEMA "datalake_syntheticgeneraldata_consume" TO "IAMR:dev-insurancelake-us-east-2-glue-role"; GRANT SELECT ON ALL TABLES IN SCHEMA "datalake_syntheticgeneraldata_consume" TO "IAMR:dev-insurancelake-us-east-2-glue-role";Grant other users or groups access to the external schema as needed.

Repeat these steps for other databases as needed.

For more details and examples of creating materialized views using the external schemas created above, see the Amazon Redshift SQL section of the InsuranceLake Cleanse-to-Consume SQL Usage Documentation.

Create and query materialized views from Amazon Redshift

These instructions assume you have completed the Quickstart guide and loaded the provided sample data into Data Catalog tables.

Trigger the

CombinedDatademonstration workflow.- Copy and paste the following text into a local file,

trigger.csv(the timestamp value is not important).Description,Timestamp Demonstration run,2025-03-28 12:00:00 - Run the following Amazon S3 copy command, substituting the S3 path for your InsuranceLake Collect bucket.

aws s3 cp trigger.csv s3://<Collect S3 bucket>/SyntheticGeneralData/CombinedData/

- Copy and paste the following text into a local file,

Use the Step Functions console to monitor the progress of the workflow.

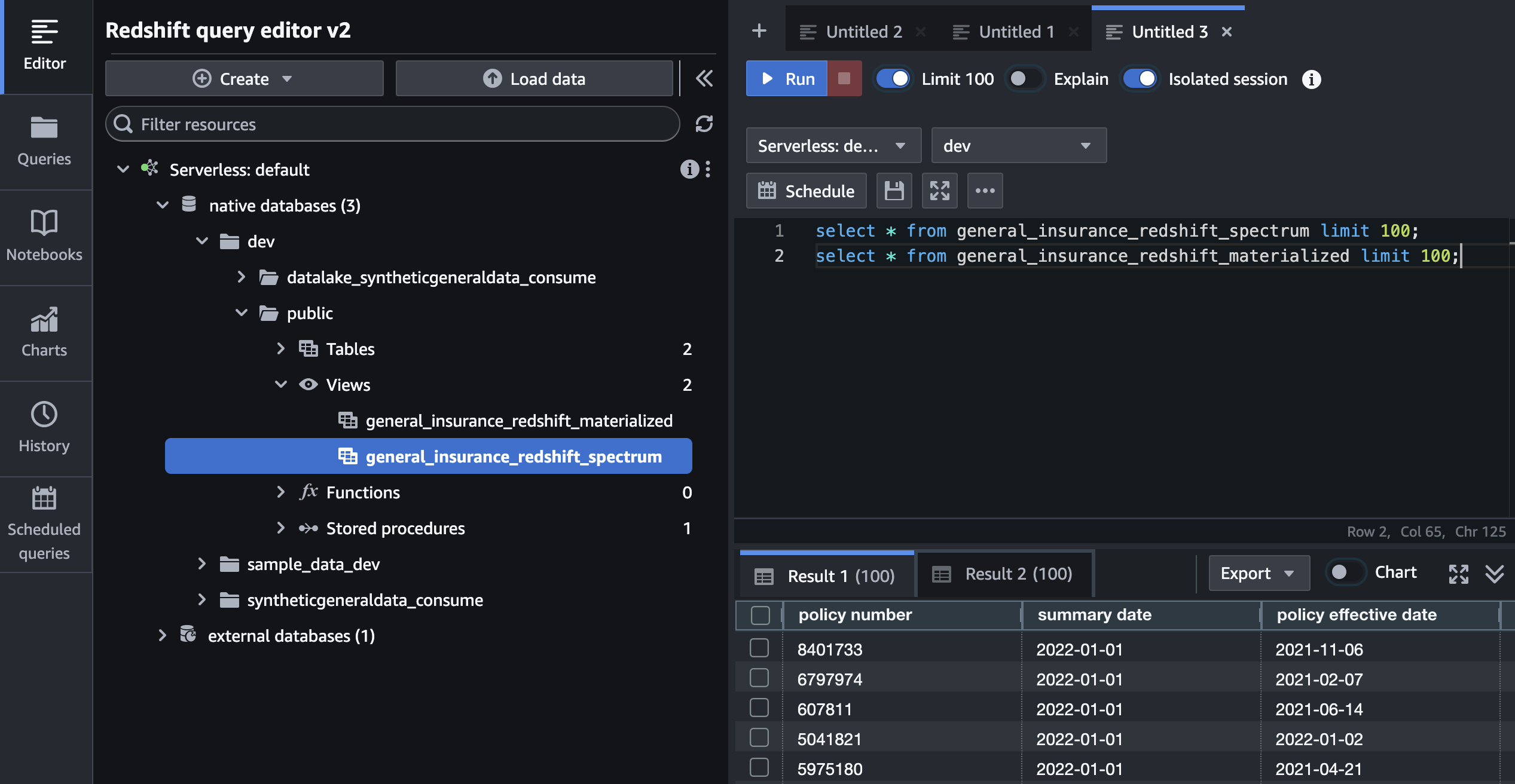

When the workflow completes, access the Amazon Redshift query editor.

Refresh the tree-view pane using the circular arrows icon, and notice that you now have 2 views in the

devdatabase,publicschema.Run the following Amazon Redshift SQL to check the contents of the 2 new views:

select * from general_insurance_redshift_spectrum limit 100; select * from general_insurance_redshift_materialized limit 100;

Other example queries using Amazon Redshift SQL:

Use Amazon Redshift for Consume layer storage

This section provides guidance for you to configure the Cleanse-to-Consume AWS Glue ETL job to write directly to Amazon Redshift Managed Storage in the Consume layer of your data lakehouse.

The following instructions use a Data Catalog Connection for the connectivity to Amazon Redshift. The Data Catalog Connection provisions the VPC automatically and retrieves Amazon Redshift credentials from AWS Secrets Manager.

Prerequisites

You have a shared VPC for AWS Glue ETL jobs and Amazon Redshift database.

You can use InsuranceLake to provision the VPC and create the Redshift workgroup or cluster in the VPC created by InsuranceLake. For more information on configuring a VPC deployment with InsuranceLake, refer to the Application Configuration section of the Full Deployment guide.

Alternatively you can bring your own VPC, create connections to each subnet under Data Catalog Connections, and connect your AWS Glue ETL jobs under

Job Details,Advanced Properties,Connections.Your VPC must have sufficient IP addresses available for service endpoints and Amazon Redshift.

InsuranceLake provisions your VPC using 3 Availability Zones, each with a public and private subnet. This divides the VPC subnet by 6, rounding down to the nearest power of 2. For example, if you specify a /24 VPC subnet in your InsuranceLake configuration, you will have 32 IP addresses in each private subnet.

AWS reserves 5 IP addresses in each subnet (1 network, 1 broadcast, 1 gateway, and 2 reserved). In addition, InsuranceLake VPCs are automatically deployed with 8 service endpoints, each of which uses an additional IP address. In the previous example, the 32 IP address subnet will have 19 available IP addresses.

If you bring your own VPC, you might have different subnet divisions and service endpoints.

Amazon Redshift Serverless workgroups and clusters have varying IP address requirements depending on the RPUs. For details refer to Considerations when using Amazon Redshift Serverless.

You have followed the Amazon Redshift permissions for AWS Glue instructions above.

The AWS Glue ETL job IAM role must have access to the Amazon Redshift cluster or workgroup. In the following steps, you will need to grant additional permissions to the AWS Glue ETL job IAM role and should know the role name. You do not need to grant access to the Data Catalog.

Connector setup

In the Amazon Redshift query editor, ensure you are connected to the correct workgroup or cluster.

Create a user for the Data Catalog Connection with a password.

CREATE USER glue_connector PASSWORD '<PASSWORD>';Create and grant access to each database the ETL job will write to.

You must create each database in Amazon Redshift and grant permissions to the AWS Glue ETL job IAM role in advance of writing any data. The ETL job will create tables automatically.

CREATE DATABASE "syntheticgeneraldata_consume"; GRANT CREATE ON DATABASE "syntheticgeneraldata_consume" TO "IAMR:dev-insurancelake-us-east-2-glue-role";Create a Secrets Manager secret with the username and password you created in the prior steps.

If you are already managing your Amazon Redshift credentials with a Secrets Manager secret, you can use the same secret for the AWS Glue Connector and skip this step.

Refer to Creating a secret for Amazon Redshift database connection credentials for specific instructions.

The AWS Glue ETL job IAM role must have access to the encryption key you choose. We recommend using the InsuranceLake KMS key,

dev-insurancelake-kms-key, because the AWS Glue ETL job IAM role already has access to decrypt using this key.Grant the AWS Glue IAM role access to the Secrets Manager secret containing your Amazon Redshift credentials.

Use the following policy statement as an inline policy for the IAM role:

{ "Effect": "Allow", "Action": "secretsmanager:GetSecretValue", "Resource": "<ARN FOR SECRETS MANAGER SECRET>" }Attach the AWS managed policy

AmazonVPCFullAccessto the AWS Glue ETL job IAM role to allow it to automatically provision the VPC.Create the Data Catalog Connection in the AWS Glue console.

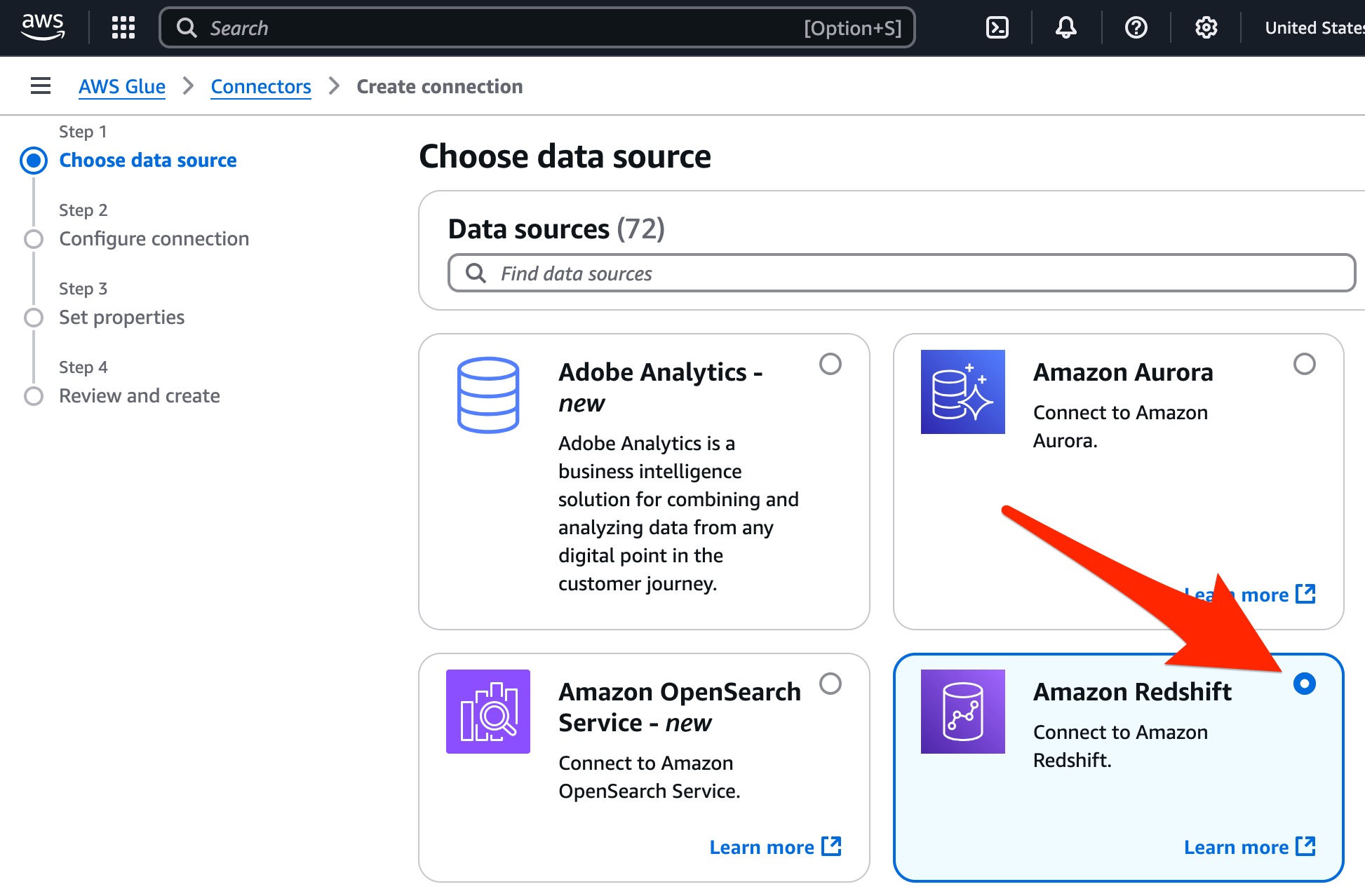

Select

Data connectionsfrom the navigation menu on the left.In the

Connectionssection, selectCreate connection.Select Amazon Redshift as the data source and click

Next. You can filter the list of data sources to help find it.

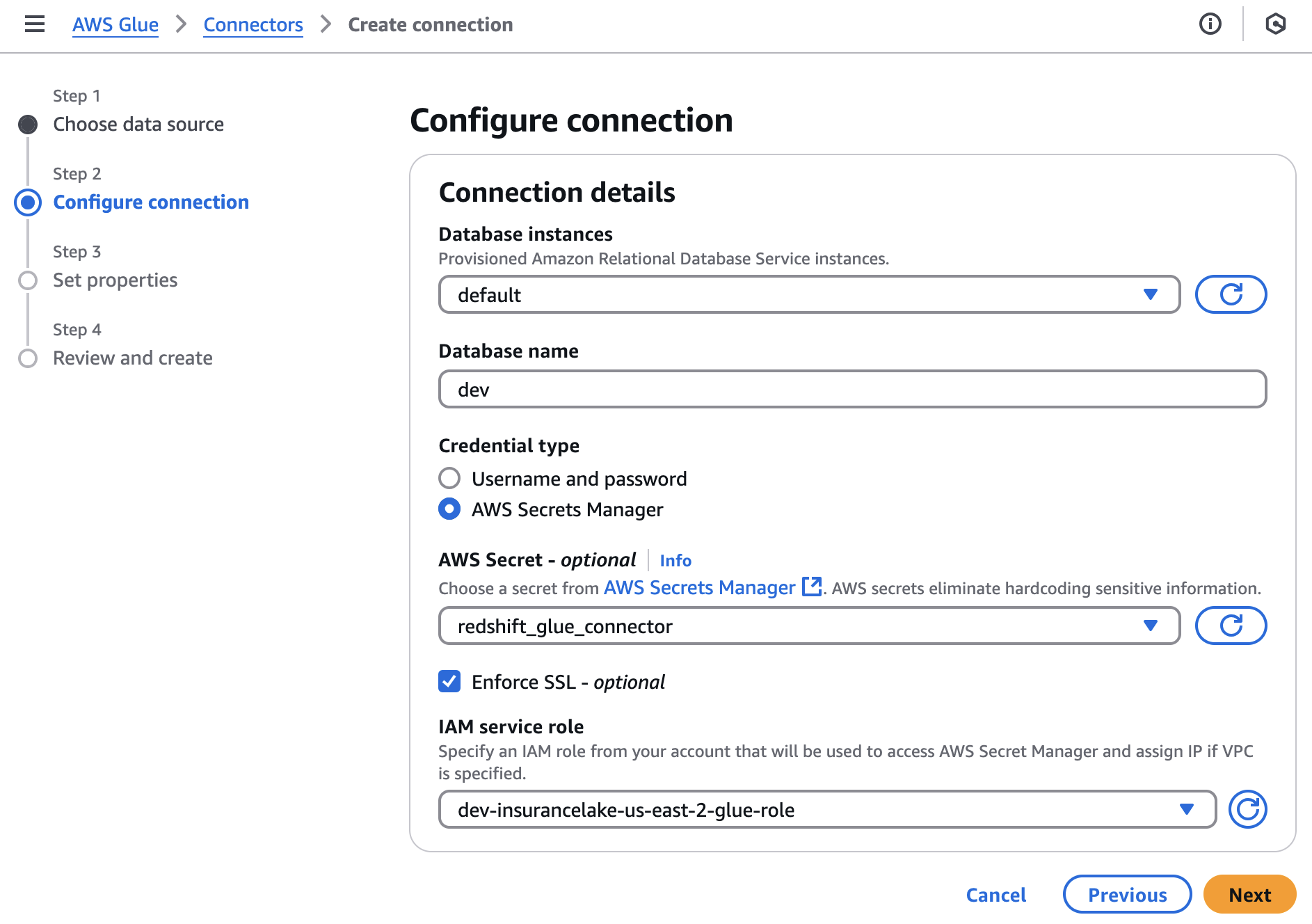

Under

Database instances, choose the workgroup or cluster to connect to.For

Database name, select a database from the list.The database name you choose in the connection details will not be used by the ETL workflow. Any valid database can be used to create the connector.

For

Credential type, select AWS Secrets Manager, then select the secret containing your Amazon Redshift credentials.Check

Enforse SSL.For

IAM service role, select the AWS Glue ETL job IAM role from the dropdown.

Select

Next,Nextagain, thenCreate connection.

Cleanse-to-Consume ETL job modification

In this section, you will modify the InsuranceLake Cleanse-to-Consume ETL job to write to Amazon Redshift Managed Storage instead of Amazon S3.

Edit the Cleanse-to-Consume AWS Glue script starting on line 162.

Comment or delete the code on lines 162 - 195. This section of the code sets the storage location, updates the Data Catalog, purges the S3 path, sets Spark write parameters, and writes the data to S3.

Add the following replacement code, which is used to write data directly to Amazon Redshift.

Change the

catalog_connectionparameter to match the Data Catalog Connection name created in the Connector setup.dyf = DynamicFrame.fromDF(filtered_df, glueContext, f"{args['execution_id']}-convertforredshift") glueContext.write_dynamic_frame.from_jdbc_conf( frame=dyf, catalog_connection='Redshift connection', connection_options={ 'database': args['target_database_name'].lower(), 'dbtable': f'{target_table}', }, redshift_tmp_dir=args['TempDir'], transformation_ctx=f"{args['execution_id']}-redshiftwrite" )For full details on connection options refer to Amazon Redshift connection option reference and JDBC connection option reference.

Add an additional import at line 9.

from awsglue.dynamicframe import DynamicFrame

Using CDK, redeploy the AWS Glue jobs stack.

cdk deploy Dev-InsuranceLakeEtlPipeline/Dev/InsuranceLakeEtlGlueJobs

Query Amazon Redshift Consume layer

Rerun the

PolicyDataworkflow to publish data to Amazon Redshift Managed Storage and wait for the job to complete.If you have recently run the

PolicyDataworkflow, you can execute the pipeline without upload.Use the following query in the Amazon Redshift query editor to check the data in the Consume layer:

select * from syntheticgeneraldata_consume.public.policydata limit 100;