Guidance for Self-Healing Code on AWS

Summary: This implementation guide provides an overview of Guidance for Self-Healing Code on AWS, its reference architecture and components, considerations for planning the deployment, and configuration steps for deploying the Guidance. This implementation guide is intended for solution architects, business decision makers, DevOps engineers, data scientists, and cloud professionals who want to implement Guidance for Self-Healing Code on AWS in their environment.

Overview

Any software will inevitably have issues or bugs, but software developers often have to de-prioritize addressing these issues as they must compete with pressure for product and feature development. These bugs can distract developers’ focus, degrade the user experience, and cause misleading metrics about the user experience. Even if fixing bugs is prioritized, resolving them often requires business investment in a form of experienced and skilled engineers to dedicate a large amount of their time and focus in understanding and fixing bugs.

This Guidance helps developers address these issues by combining Amazon CloudWatch, AWS Lambda, and Amazon Bedrock to create a comprehensive system that automatically detects and fixes bugs to enhance application reliability and the overall customer experience. In this system, a Log Driven Bug Fixer hooks into an application’s CloudWatch log group through a Lambda subscription. Any logs containing application errors are sent for processing, where a Lambda function creates a prompt, including the stack trace and relevant code files, and then sends it to Amazon Bedrock (Claude v1) to generate code fixes. The modified code is then pushed into source control (git) and creates a pull request for review and deployment.

Features and benefits

Guidance for Self-Healing Code on AWS provides the following features:

- Automatic stack trace (error) detection: Implements CloudWatch log subscriptions to automatically filter and detect stack traces

- Error tracking: Automatically tracks the state of error processing in Amazon DynamoDB

- Deduplication: Deduplicates stack traces to avoid redundant processing

- Pull request creation: Integrates with source control systems to automatically create pull requests, which include the bug fixes

Use cases

Use cases for this Guidance include Python applications that are:

- Emitting error logs and stack traces to CloudWatch logs.

- Using git source control.

This Guidance will automatically detect stack traces in the CloudWatch logs, create and push a new git branch (including the modified source code), and automatically create a pull request.

Architecture overview

This section provides a reference implementation architecture diagram for the components deployed with this Guidance.

Architecture diagram

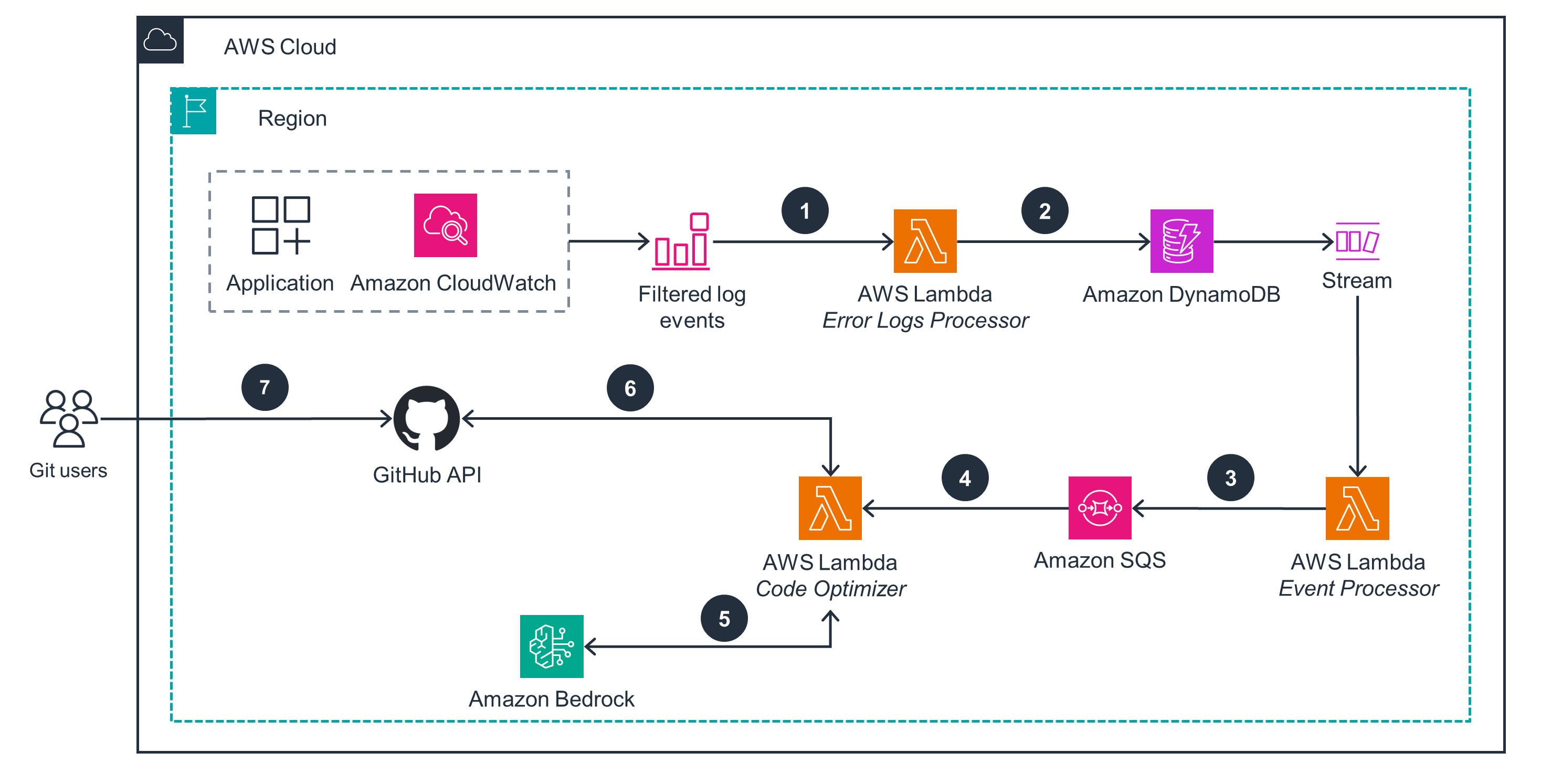

Figure 1: Guidance for Self-Healing Code on AWS architecture diagram

Architecture steps

- The Lambda Error Logs Processor function receives application error logs through a CloudWatch logs subscription and filter, which matches only Python stack traces. All Lambda functions assume an AWS Identity and Access Management (IAM) role scoped with minimum permissions to access the required resources.

- The stack trace in the application error log is md5-hashed for uniqueness and stored in an Amazon DynamoDB table to track its processing state. Each item in the table represents a deduplicated error message.

- The Lambda Event Processor function obtains events from the DynamoDB stream and sends them to Amazon Simple Queue Service (Amazon SQS) for batch processing.

- Amazon SQS enqueues messages to enable batch processing and concurrency control for the Lambda Code Optimizer function.

- The Lambda Code Optimizer function builds a prompt that includes source code and the relevant error message. The SSH key to access the Git repository is retrieved from Parameter Store, a capability of AWS Systems Manager. It invokes Amazon Bedrock with the prompt, which returns modified source code as a response. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) through a single API, along with a broad set of capabilities you need to build generative AI applications.

- The Lambda Code Optimizer function commits the modified source code into a new Git branch. The Git branch and its corresponding pull request are pushed to the source control system through the GitHub API.

- Git users review the pull request for testing and integration.

AWS Services in this Guidance

| AWS Service | Description |

|---|---|

| Amazon CloudWatch | Core service - Provides error log events data for problematic source code |

| AWS Lambda | Core service - Implements automation for prompt generation and interaction with Bedrock |

| Amazon Bedrock | Core service - Provides broad set of capabilities to build generative AI applications that analyze, fix, and return modified problematic source code |

| Amazon Simple Queue Service (Amazon SQS) | Core service - Provides batch processing and concurrency control for Lambda function |

| Amazon DynamoDB | Core service - Stores code stack trace with error logs and streams data |

| Amazon Systems Manager | Auxiliary service - Secrets are stored in Parameter Store |

Cost of Deployment

You are responsible for the cost of the AWS services used while running this Guidance. As of February 2024, the cost for running this Guidance with the default settings in the US East (N. Virginia) is approximately $<n.nn> an hour.

We recommend creating a budget through AWS Cost Explorer to help manage costs. Prices are subject to change. For full details, refer to the pricing webpage for each AWS service used in this Guidance.

Sample cost table

The following table provides a sample cost breakdown for deploying this Guidance with the default parameters in the US East (N. Virginia) Region for one month.

| AWS service | Dimensions | Monthly cost [USD] |

|---|---|---|

| Amazon DynamoDB | Average item size 0.5kb, 0.5 RCU and 1 WCU per message | $ 17.08 |

| Amazon CloudWatch | 33kb of logs written and stored per message | $ 8.77 |

| AWS Lambda | 45 seconds execution time per unique error message | $ 2.43 |

| Amazon SQS | 3 requests per message, 1k message size | $ 0.01 |

| Amazon Bedrock | 1,000 input tokens, 1,000 output tokens | $ 32.00 |

| Total | $ 60.29 |

Cost assumptions

- 1,000,000 error messages processed in a month (1,000 unique errors).

- Free-tier not included in costs.

- 1,000 Claude v2 input and output tokens required per message processed.

- DynamoDB table uses standard table class, on-demand capacity.

- Lambda functions provisioned with 128mb memory and x86 architecture.

Security

When you build systems on AWS infrastructure, security responsibilities are shared between you and AWS. This shared responsibility model reduces your operational burden because AWS operates, manages, and controls the components including the host operating system, the virtualization layer, and the physical security of the facilities in which the services operate. For more information about AWS security, visit AWS Cloud Security.

This Guidance includes the following security implementations:

- Least privilege IAM policies used for Lambda function roles.

- AWS Key Management Service (AWS KMS) customer managed key (CMK) is used to encrypt DynamoDB data and Lambda function environment variables.

- Parameter Store parameters encrypted and stored as SecureString.

Supported AWS Regions

This Guidance uses Amazon Bedrock, which is not currently available in all AWS Regions. You must launch this solution in an AWS Region where Amazon Bedrock is available. For the most current availability of AWS services by Region, refer to the AWS Regional Services List.

Guidance for Self-Healing Code in AWS is supported in the following AWS Regions:

- US East (N. Virginia)

- US West (Oregon)

- Asia Pacific (Singapore)

- Asia Pacific (Tokyo)

- Europe (Frankfurt)

Quotas

Service quotas, also referred to as limits, are the maximum number of service resources or operations for your AWS account.

Quotas for AWS Services in this Guidance

Make sure you have sufficient quota for each of the services implemented in this Guidance. For more information, see AWS service quotas.

To view the service quotas for all AWS services in the documentation without switching pages, view the information in the Service endpoints and quotas page in the PDF instead.

Deploy the Guidance

Prerequisites

You must have the following prerequisites to deploy this Guidance:

- Python 3.9+

- AWS CLI

Deployment process overview

Before you launch the Guidance, review the cost, architecture, security, and other considerations discussed in this guide. Follow the step-by-step instructions in this section to configure and deploy the Guidance into your account.

Time to deploy: Approximately 10 minutes

These deployment instructions are optimized to best work on Mac or Amazon Linux 2023. Deployment in another OS may require additional steps.

- Clone the sample code repo using command

git clone https://github.com/aws-solutions-library-samples/guidance-for-self-healing-code-on-aws.git - Navigate to the repo folder

cd guidance-for-self-healing-code-on-aws - Install required packages using command

pip install -r requirement.txt - Export the required environment variables:

# CloudFormation stack name.

export STACK_NAME=self-healing-code

# S3 bucket to store zipped Lambda function code for deployments.

# Note: the S3 bucket must be in the same region as the CloudFormation stack deployment region.

export DEPLOYMENT_S3_BUCKET=<NAME OF YOUR S3 BUCKET>

# S3 bucket to store zipped Lambda function code for deployments. i.e. artifacts/

export DEPLOYMENT_S3_BUCKET_PREFIX=<NAME OF YOUR S3 BUCKET PREFIX>

# All variables and secrets for this project will be stored under this prefix.

# You can define a different value if it's already in use.

export PARAMETER_STORE_PREFIX=/${STACK_NAME}/

- Install Python dependencies and run the configuration wizard to securely store variables and secrets in Parameter Store:

pip install -r requirements.txt

python3 bin/configure.py

Example prompt responses for running python3 bin/configure.py:

Enter the target repository's SSH URL (i.e. git@github.com:foo/bar.git). This is the code repo for your application:

https://github.com/antkawam/sample_app.git

Enter the target repository's API key/access token (i.e. ghp_xxxxx). For github repos, please refer this link for creating an access token. https://docs.github.com/en/authentication/keeping-your-account-and-data-secure/managing-your-personal-access-tokens:

ghp_secret_access_token

Enter an SSH private key that has write permissions to the target repository (i.e.

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnN...em9uLmNvbQECAw==

-----END OPENSSH PRIVATE KEY-----

) (Enter multiple lines. End with a blank line):

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzsdfAASDFAAAABG5vbmUAASDFDEAAABAAAAaAAAABNlY2RzYS

...

IAAAAgNXPtnVTIB+dnkInRPgqiATAG5WveCNLg4fnlxma621YAAAADfashjYUAzYzA2

MzAyZjE5YWMa231fasdfaasd20BAgMEBQ==

-----END OPENSSH PRIVATE KEY-----

Enter the CloudWatch log group name for your existing application:

sample_app_log_group

Re-run the above script if you need to make any changes. Alternatively, you can directly modify the Parameter Store values, which are stored under the ${PARAMETER_STORE_PREFIX} prefix.

- Run the configuration script to integrate the target application’s CloudWatch log group and source control details.

# Follow the resulting series of prompts to store configuration details in SSM Parameter Store.

python3 bin/configure.py

- Deploy the AWS resources with AWS CloudFormation:

# Create a deployment package (Lambda function source code)

cloudformation/package.sh

# Deploy the CloudFormation template

cloudformation/deploy.sh

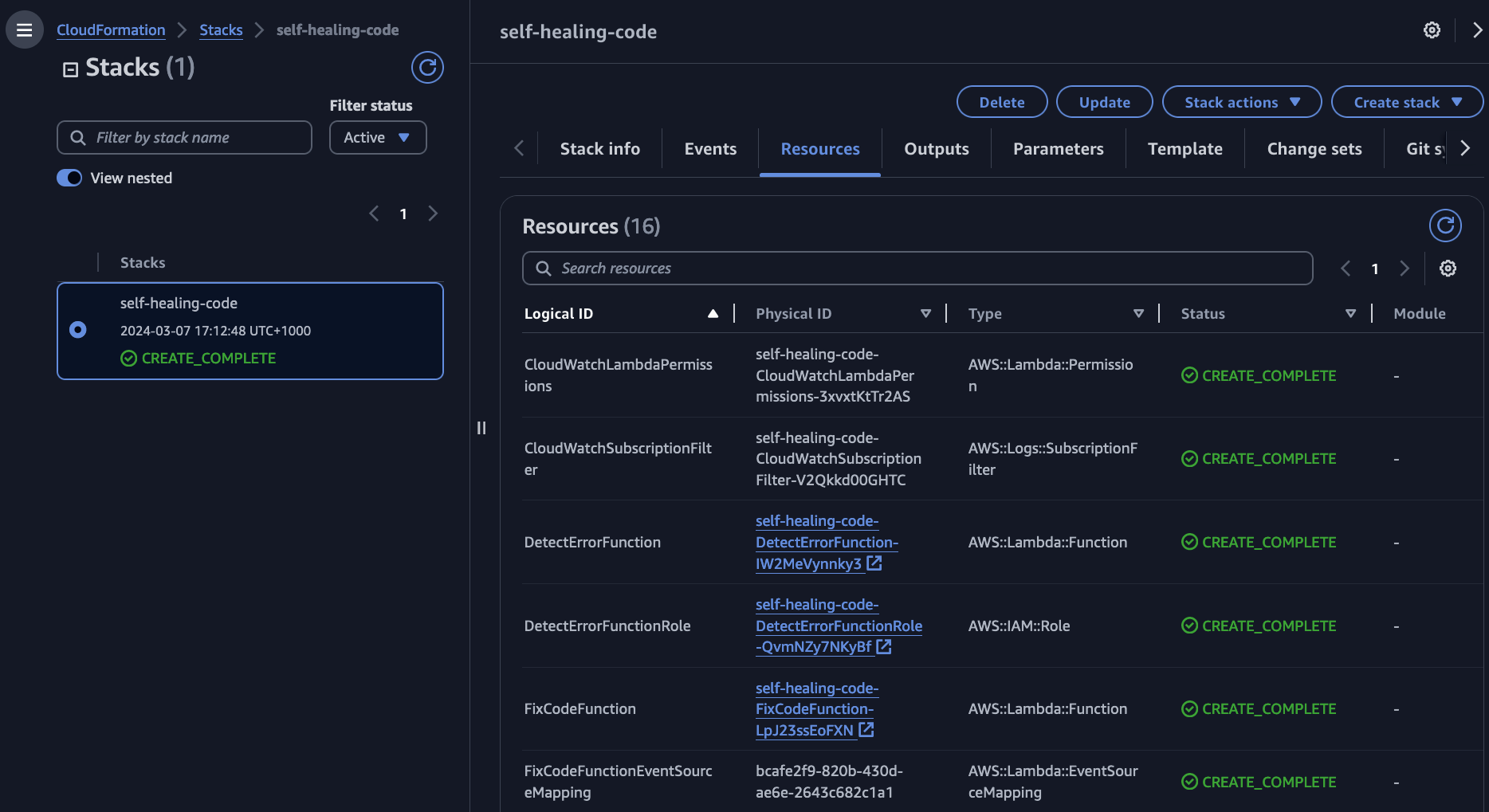

Upon successful deploy of the CloudFormation stack, the AWS console should display similar output to the below image:

Figure 2: CloudFormation deployment output

Example usage

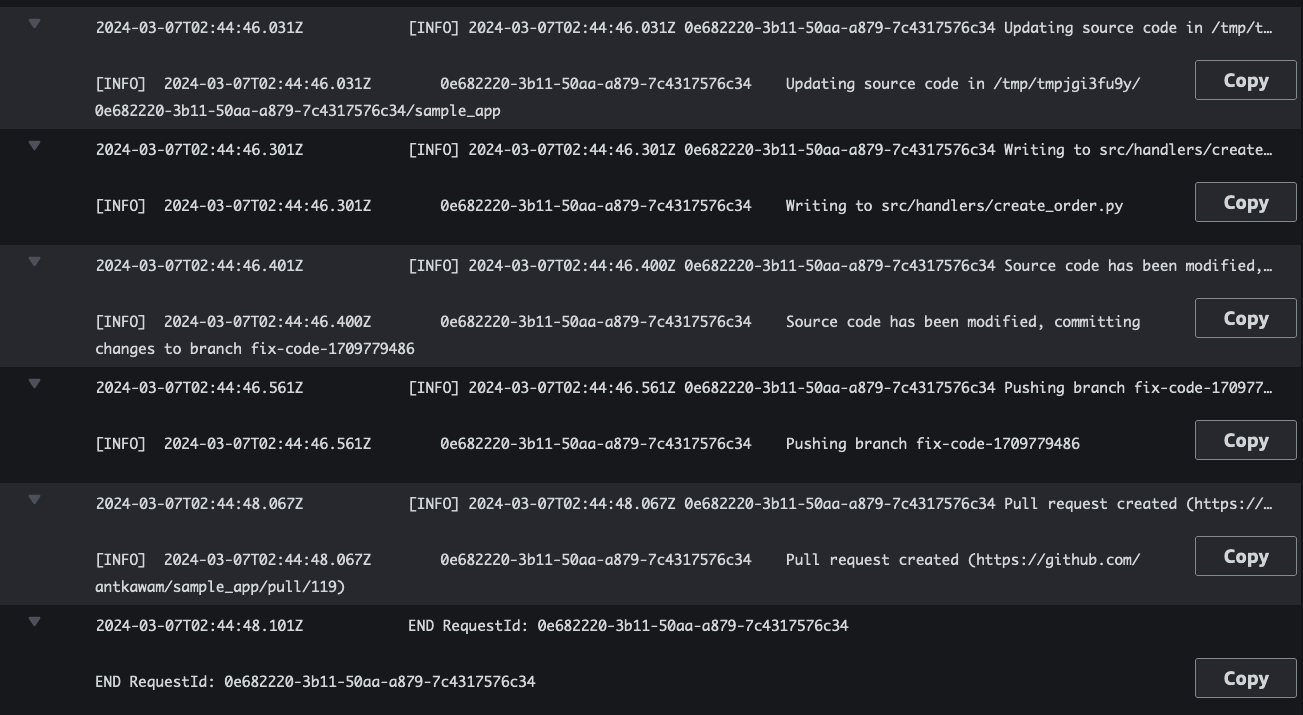

Suppose the target CloudWatch Log Group emits a log event with the following Python stack trace:

[ERROR] KeyError: 'order_items'

Traceback (most recent call last)

File "/var/task/handlers/create_order.py", line 14, in handler

order_items = body["order_items"]

The Self-Healing Code system will detect the error log and trigger its code refactoring logic. The output can be viewed in the FixCodeFunction Lambda function CloudWatch logs:

Figure 3: FixCodeFunction output

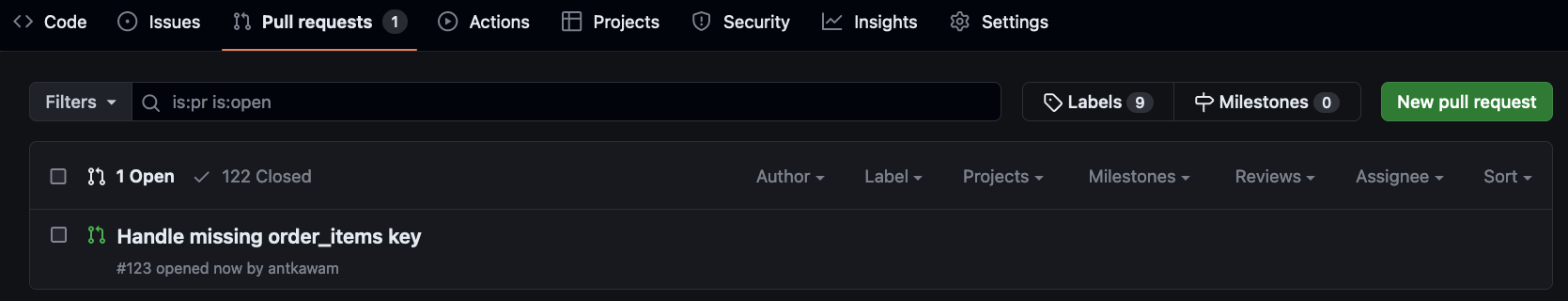

The resulting pull request will then appear in GitHub:

Figure 4: GitHub Pull request

Uninstall the Guidance

You can uninstall Guidance for Self-Healing Code on AWS by using the AWS Command Line Interface (CLI).

- Confirm that you have exported the same

STACK_NAMEenvironment variable from the deployment steps:

export STACK_NAME=self-healing-code

- Delete the CloudFormation stack:

aws cloudformation delete-stack --stack-name=${STACK_NAME}

- Delete the Parameter Store parameters:

bin/delete_parameters.sh

Contributors

Anthony Kawamoto

Yogesh Pillai

Notices

Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers.