Guidance for American Sign Language (ASL) 3D Avatar Translator on AWS

Summary: This implementation guide provides an overview of the American Sign Language (ASL) 3D Avatar Translator on AWS, its reference architecture and components, considerations for planning the deployment, and configuration steps for deploying the Guidance to Amazon Web Services (AWS). This guide is intended for Solution Architects, Business Decision Makers, DevOps Engineers, Data Scientists, and Cloud Professionals, who want to implement American Sign Language (ASL) 3D Avatar Translator on AWS in their environment.

Overview

This sample code/prototype demonstrates a novel way to pre-process/transform multilingual phrases into an equivalent literal (or direct) form for translation into American Sign Language (ASL). This pre-processing step improves sign language translation fidelity by expressing user-provided (input) phrases more clearly than they were initially expressed. GenAI is applied to re-interpret these multilingual input phrases into simpler, more explicit English phrases across multiple iterations/passes. These resulting phases have a different (more charitable) kind of interpretation versus the resulting phrases produced by traditional non-GenAI-based translation tools. Finally, this prototype animates a realistic avatar in Unreal Engine (via MetaHuman plugin) to visually depict the ASL translation of those resulting phrases. ASL translation in this prototype is based on a very loose/naïve interpretation of ASL rules and grammar, primarily involving hand and arm movements - all of which end users can refine. The main goals of this project are to essentially improve the translation of existing robust ASL translation engines (via GenAI), and to provide an engaging multimodal interface to view ASL translations.

Features and Benefits

The American Sign Language (ASL) 3D Avatar Translator on AWS provides the following features:

Convert speech to text.

Multilingual comprehension.

Use Generative AI to translate literal-form phrases into ASL.

Animate individual ASL signs via 3D avatar.

Generate contextual image and display it as a background.

Use Cases

Use Case: Digital Real-Time ASL Interpreter on AWS

The Digital Real-Time ASL Interpreter can bridge the gap between spoken languages and American Sign Language (ASL). This innovative application leverages the power of AWS services to provide real-time translation from any spoken language into ASL, enabling seamless communication for individuals with hearing disabilities.

Architecture Overview

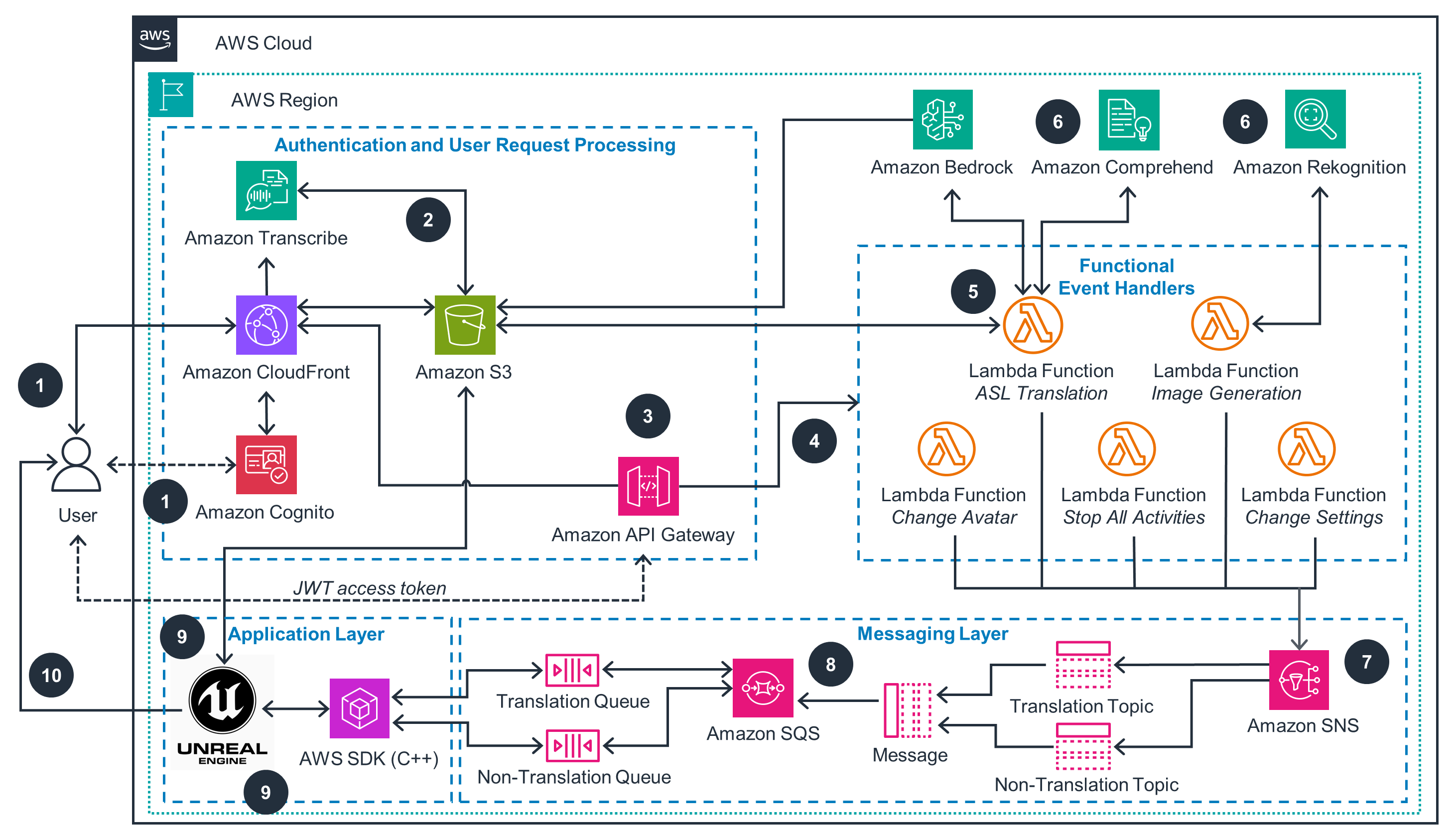

This section provides a reference implementation architecture diagram for the components deployed with this Guidance. It leverages several AWS AI services to enable real-time translation of spoken phrases into animated sign language gestures. The process flow is as follows:

The end-user speaks (or types) a phrase in their preferred spoken language. This voice input is captured and transcribed into text using Amazon Transcribe, a speech-to-text service that supports multiple languages.

The transcribed text is then translated into English using Amazon Translate. This step ensures that the subsequent processing is done in a common language (English).

The translated English text is iteratively simplified using carefully crafted prompts and the Generative AI capabilities of Amazon Bedrock. This step ensures that the final text is concise and suitable for sign language translation.

The simplified English text is then used to animate corresponding American Sign Language (ASL) gestures using an Avatar in Unreal Engine. The Avatar is a 3D character model that can perform realistic sign language animations based on the input text.

Architecture Diagram

Figure 1: Guidance for American Sign Language (ASL) 3D Avatar Translator Reference Architecture

Architecture Steps

- User authenticates to Amazon Cognito using an Amazon CloudFront-hosted website or web API (through Amazon Cognito-based JWT access token).

- User types or speaks an input phrase in a chosen language, which Amazon Transcribe transcribes. Transcription is stored in an Amazon Simple Storage Service (Amazon S3) bucket.

- User requests an action (like ASL translate, change avatar, or change background image) through the website or web API (Amazon API Gateway endpoint).

- Based on the user-requested action, API Gateway routes its request to a corresponding AWS Lambda function for processing of that action.

- For ASL Translation requests, a matching AWS Lambda function invokes Amazon Bedrock API to form an ASL phrase for the provided input phrase and obtain a contextual 2D image (to be stored in an S3 bucket).

- Amazon Comprehend and Amazon Bedrock perform multilingual toxicity checks on the input phrase. Amazon Rekognition performs visual toxicity checks on 2D-generated images. Toxicity check results are returned to respective Lambda functions.

- All Lambda functions generate a JSON-based payload to capture a user-requested action for Epic Games Unreal Engine. Each payload is sent to a corresponding Amazon Simple Notification Service (Amazon SNS) topic:

TranslationorNon-Translation. - Each Amazon SNS-based payload is transmitted to its corresponding Amazon Simple Queue Service (Amazon SQS) queue for later consumption by Unreal Engine.

- Using the AWS SDK, the Unreal Engine application polls and dequeues Amazon SQS action-based payloads from its queues. Background images are fetched from an S3 bucket for translation requests.

- Based on each payload received, the Unreal Engine application performs a user-requested action and displays resulting video output on that user’s system. This output provides an ASL-equivalent interpretation of an input phrase by displaying a MetaHuman 3D avatar animation with ASL-transformed text displayed.

AWS Services in this Guidance

| AWS Service | Role | |

|---|---|---|

| Amazon Transcribe | Core | Convert user speech to text. |

| Amazon Bedrock | Core | Invoke Foundation model to translate natural language to ASL. |

| Amazon API Gateway | Core | Create API to invoke lambda function from user interface. |

| AWS Lambda | Core | Run custom code to generate ASL for simplified text. |

| Amazon Cognito | Core | Authenticate user to access ASL translator |

| Amazon Comprehend | Core | Run moderation to detect toxicity on generated text |

| Amazon Rekognition | Core | Run moderation to detect toxicity on generated image |

| Amazon CloudFront | Core | Fast and secure web-hosted user experience |

| Amazon Simple Storage Service (S3) | Core | Host user interface code, store generated images |

| Amazon Simple Notification Service (SNS) | Core | Send the notification to unreal engine |

| Amazon Simple Queue Service (SQS) | Core | Queue notifications for Unreal Engine to consume |

Cost

You are responsible for the cost of the AWS services used while running this Guidance.

Sample Cost Table

The following table provides a sample cost breakdown for deploying this Guidance with the default parameters in the us-east-1 (N. Virginia) Region for one month. This estimate is based on the AWS Pricing Calculator output for the full deployment of this Guidance. As of February 2025, an average cost of running this Guidance in the us-east-1 is around $1718/month:

| AWS Service | Dimensions | Cost [USD] |

|---|---|---|

| Amazon Transcribe | 5,000 requests, 34 KB request size | <$1/month |

| Amazon Bedrock | 10 users | <$1/month |

| Amazon API Gateway | 5,000 requests, 128 MB memory allocation, 25 s duration | <$1/month |

| AWS Lambda (event trigger) | 5,000 requests, 128 MB memory allocation, 5 s duration | <$1/month |

| Amazon Cognito | 1 GB storage | <$2/month |

| Amazon Comprehend | 10 GB standard storage | <$1/month |

| Amazon Rekognition | 200 input / 300 output tokens per request (5,000 requests) | $44/month |

| Amazon S3 | 200 input / 300 output tokens per request (5,000 requests) | $26/month |

| Amazon SNS | 730 hours x 1.125 USD/hour | $821/month |

| Amazon SQS | 730 hours x 1.125 USD/hour | $821/month |

| TOTAL | $1718/month |

Security

When you build systems on AWS infrastructure, security responsibilities are shared between you and AWS. This shared responsibility model reduces your operational burden because AWS operates, manages, and controls the components including the host operating system, the virtualization layer, and the physical security of the facilities in which the services operate. For more information about AWS security, visit AWS Cloud Security.

If you grant access to a user to your account where this sample is deployed, this user may access information stored by the construct (Amazon Simple Storage Service bucket, Amazon CloudWatch logs). To help secure your AWS resources, please follow the best practices for AWS Identity and Access Management (IAM). Amazon CloudFront: Please follow best practices for a secured and faster website.

Supported AWS Regions

This Guidance uses the Amazon Bedrock service, which is not currently available in all AWS regions. You must launch this guidance in an AWS region where Amazon Bedrock is available. For the most current availability of AWS services by region, refer to the AWS Regional Services List.

American Sign Language (ASL) 3D Avatar Translator on AWS is supported in the following AWS regions:

| Region Name | |

|---|---|

| US East (Ohio) | us-east-2 |

| Asia Pacific (Seoul) | ap-northeast-2 |

| US East (N. Virginia) | us-east-1 |

| Europe (Paris) | eu-west-3 |

Quotas

Service quotas, also referred to as limits, are the maximum number of service resources or operations for your AWS account.

Quotas for AWS services in this Guidance

Make sure you have sufficient quota for each of the services implemented in this solution. For more information, see AWS service quotas.

To view the service quotas for all AWS services in the documentation without switching pages, view the information in the Service endpoints and quotas page in the PDF instead.

Deploy the Guidance

Prerequisites

An AWS account. We recommend to deploy this solution in a new AWS account

AWS CLI: configure your credentials

aws configure --profile [your-profile]

AWS Access Key ID [None]: xxxxxx

AWS Secret Access Key [None]:yyyyyyyyyy

Default region name [None]: us-east-1

Default output format [None]: json

Node.js: v18.12.1

AWS CDK: 2.102.0

npm install -g npm aws-cdk

Deployment Process Overview

Time to deploy: Approximately 2 minutes

This project is built using Cloud Development Kit (CDK). See Getting Started With the AWS CDK for additional details and prerequisites.

Clone this repository.

$ git clone <REPO_ADDRESS>Enter the code sample directory.

$ cd aws-asl-metahumanInstall dependencies.

$ npm installBootstrap AWS CDK resources on the AWS account.

$ cdk bootstrapBuild frontend codebase. These commands will create a build in /frontend/dist folder.

$ cd frontend $ npm install $ npm run-script buildDeploy the sample in your account

$ cdk deploy

This will deploy the frontend and backend components. The UI code is stored on a S3 bucket, which is hosted by CloudFront.

Update Config File

- Update config.js file with following cdk outputs. This is required to set up the UI with the backend services.

- “IdentityPoolId”:”AwsAslCdkStackIdentityPoolId”,

- “userPoolId”: “AwsAslCdkStack.UserPoolId”,

- “userPoolWebClientId”: “AwsAslCdkStack.ClientId”,

- “apipath”: “AwsAslCdkStack.apigwurl”

Model Access

This application uses the following models. Please make sure that your AWS account (Bedrock settings) has access to these models.

- stability.stable-diffusion-xl-v1

- anthropic.claude-v2

Setup ASL 3D Avatar

This application uses the Unreal Engine application with the MetaHuman plugin to display an Avatar. As of now, it only works on a Windows machine. You can either use an EC2 instance or a local windows laptop to drive the avatar.

Please follow these steps to initialize your Avatar and interact with your deployed code on AWS account.

- Build the Unreal Engine project: ASLMetaHuman.uproject (in source/ue folder). Alternatively, you can use the source/ue/bin directory batch files to build, package, cook shaders, run builds after configuring the user.bat file located in this directory.

- Set up AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_SESSION_TOKEN environment variables.

set AWS_ACCESS_KEY_ID="" set AWS_SECRET_ACCESS_KEY="" set AWS_SESSION_TOKEN="" - Run the built

ASLMetaHuman.exeexecutable file from the command line. Note: this executable filename may be suffixed with the build type information depending on the type of build you performed: i.e.ASLMetaHuman-Win64-Shipping.exe

Run Application

- Create a user in the Cognito user pool. Go to the Amazon Cognito page in the AWS console, select the created user pool. Under

users, selectCreate userand fill in the form. - Access the web app (either locally or cloud hosted) and sign in using the user credentials that you just created. For hosted ui please follow these steps: Click on App Integration. Select App client name. Click

View Hosted UISign In with the username and password. - Copy CloudFront url from the CDK Output of your stack and paste it on the browser. AwsAslCdkStack.CfnOutCloudFrontUrl =

"https://<cloudfrontdomainname>.cloudfront.net" - Enter the username and password that was created in the Cognito pool.

- Click on

Live transcription - Send text or change avatar from the UI.

Troubleshoot

- Verify that the user is able to login and invoke TranslationLambda

- Check Dev Tools on browser for errors.

- Check TranslationLambda logs for errors.

- Verify that the UI is able to send message on SQS to Unreal Engine.

- The Unreal Engine avatar listens to SQS message prior to performing activities. Check if there are any messages on outputactivity.fifo and translationactitivity.fifo queues.

- If there are messages on those queues and the avatar is not able to consume those messages, then verify that your AWS credentials (AWS_ACCESS_KEY_ID, AWS_SECRE_ACCESS_KEY and AWS_SESSION_TOKEN) are not expired and whether they are correct.

Test Amazon API Gateway Endpoints Directly

For testing purposes, you can trigger different actions in Unreal Engine directly through the provisioned Amazon API Gateway. This API uses Amazon Cognito as an authorizer to restrict calls only to authorized users, thus you first need to generate an access token.

- (if not already done) Create a user in the Cognito user pool. Go to the Amazon Cognito page in the AWS console, then select the created user pool. Under

users, selectCreate userand fill in the form. - Once created, the user will need their password updated. You can do that through the CLI.

Note: In the invocations below, replace example_user with your username, example_password with your password, example_app_client_id with your app client ID and example_user_pool_id with your app user pool id.

For instance:

aws cognito-idp admin-set-user-password --user-pool-id example user_pool_id --username example_user --password example_password --permanent

- Generate an authorization token for your request:

aws cognito-idp initiate-auth --auth-flow USER_PASSWORD_AUTH --auth-parameters USERNAME=example_user,PASSWORD=example_password --client_id example_app_client_id

Example: initiate-auth AWS CLI command response:

{

"AuthenticationResult": {

"AccessToken": "abCde1example",

"IdToken": "abCde2example",

"RefreshToken": "abCde3example",

"TokenType": "Bearer",

"ExpiresIn": 3600

},

"ChallengeParameters": {}

}

Take the value in “AccessToken” and store it (you can export it as an environment variable)

Linux

export TOKEN=AccessTokenValue

Windows

setx TOKEN "AccessTokenValue"

- Finally, perform invoke the Amazon API Gateway endpoint

Note: replace example_url with your Amazon API Gateway URL.

CHANGE AVATAR

Payload:

{

"avatar": string representing the avatar id

}

Linux

curl -d '{"avatar":"Ada"}' -X POST example_url/change_avatar -H 'Content-Type: application/json' -H 'Authorization:$TOKEN'

Windows

curl -d "{\"avatar\":\"Ada\"}" -X POST "example_url/change_avatar" --header "Content-Type: application/json" --header "Authorization:%TOKEN%"

CHANGE_BACKGROUND

Payload:

{

"imageInputDetails": {

"imagename": string representing the name of the image file generated and uploaded to the Amazon S3 bucket,

"inputText": string input prompt used to generate the image,

"bedrockParameters": {

"cfg_scale": float parameter that controls how much the image generation process follows the

text prompt. The higher the value, the more the image obeys to a given text input.,

"seed": int number used to initialize the generation,

"steps": int number of steps

}

}

}

Linux

curl -d '{"imageInputDetails": {"imagename": "aslimage22", "inputText": "american sign language translator 3d avatar, photorealistic, painted, 8k quality, unreal engine", "bedrockParameters": {"cfg_scale": 4.4, "seed": 416330048, "steps": 34}}}' -X POST example_url/change_background -H 'Content-Type: application/json' -H 'Authorization:$TOKEN'

Windows

curl -d '{\"imageInputDetails\": {\"imagename\": \"aslimage22\", \"inputText\": \"american sign language translator 3d avatar, photorealistic, painted, 8k quality, unreal engine\", \"bedrockParameters\": {\"cfg_scale\": 4.4, \"seed\": 416330048, \"steps\": 34}}}' -X POST example_url/change_background -H 'Content-Type: application/json' -H 'Authorization:%TOKEN%'

TRANSLATION

Payload:

{

"iterations": int number of Amazon Bedrock simplficiation iterations over the input sentence,

"message": string input sentence to translate

}

Linux

curl -d '{"iterations":3, "message":"la vie en rose."}' -X POST example_url/translate -H 'Content-Type: application/json' -H 'Authorization:$TOKEN'

Windows

curl -d '{\"iterations\":3, \"message\":\"la vie en rose.\"}' -X POST example_url/translate -H 'Content-Type: application/json' -H 'Authorization:%TOKEN%'

STOP_ALL_ACTIVITIES

Payload:

None

Linux

curl -X POST example_url/stop_all -H 'Content-Type: application/json' -H 'Authorization:$TOKEN'

Windows

curl -X POST example_url/stop_all -H 'Content-Type: application/json' -H 'Authorization:%TOKEN%'

CHANGE_SETTINGS

Payload:

{

"sign_rate": float rate at which signs are displayed

}

Linux

curl -d '{"sign_rate":3.5}' -X POST example_url/change_settings -H 'Content-Type: application/json' -H 'Authorization:$TOKEN'

Windows

curl -d '{\"sign_rate\":3.5}' -X POST example_url/change_settings -H 'Content-Type: application/json' -H 'Authorization:%TOKEN%'

you can invoke a similar HTTP request to test the Guidance API.

Uninstall the Guidance

Users are responsible for deleting the Guidance stack to avoid unexpected charges. A sample command for initiation of deletion is shown below:

$ cdk destroy AwsAslCdkStack

Then using AWS Console, delete the related Amazon CloudWatch logs, empty and delete the S3 buckets.

Contributors

We would like to acknowledge the contributions of these editors and reviewers.

- Alexa Perlov, Prototyping Architect

- Alain Krok, Sr Prototyping Architect

- Daniel Zilberman, Sr Solutions Architect - Tech Solutions

- David Israel, Sr Spatial Architect

- Dinesh Sajwan, Sr Prototyping Architect

- Michael Tran, Sr Prototyping Architect

Notices

Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents AWS current product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. AWS responsibilities and liabilities to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers.